MRDIMM: The Wrong Tool for Today’s Memory Challenges

Jul 09, 2025

Skip the “MRDIMM” for now: Simple, Efficient, Standard Solutions for Scaling the “Memory Wall”

As enterprise workloads become increasingly data intensive and CPU cores proliferate, much attention has been given to the need for memory innovation to fuel advancements in both system memory capacity and performance. Analysts and press coined the term “memory wall” to describe how the rapid scaling of CPUs into the hundreds of high-performance cores per socket was hindered by much more incremental advancement in system memory (typically SDRAM) technology.

Put simply, a doubling of processor cores without increases in memory would only mean that each core would only get half as much memory bandwidth and capacity. For servers, this was becoming a real choke point as high performance 64 core processors such as the AMD EPYCTM 7003 series (formerly code-named “Milan”) pushed DDR4 to its limits—the 64GB/DIMM capacity limit and 3200MT/s data transfer rates just could not keep up.

Fortunately, CPU vendors responded with an increase in the number of memory channels per CPU and worked with JEDEC–the industry consortium driving memory standards—to deliver faster, more scalable DDR5 technology. In addition to numerous security, reliability and energy efficiency enhancements, JEDEC-standard DDR5 would allow for more scale with definitions of up to 512GB capacity DIMMs and eventually 3rd generation MRDIMM data transfer rate roadmaps reaching 17,600MT/s. This would allow adequate headroom to continue to support ongoing processor performance gains for years to come. And while the initial releases of DDR5 started at 4800MT/s and now reach 6400MT/s, this was a necessary complement to the ongoing expansion of CPU-based memory channel support and multiple DIMMs/channel—which come with their own limitations.

Today, customers have more choices than ever to find the balance of memory performance, cost and capacity to meet almost any workload needs while retaining the multi-sourcing and interoperability that has been hallmarks of x86 computing. These innovative options include:

- Non-binary DIMMs – DRAM historically came in binary capacity points: 8GB/16GB/32GB/64GB/128GB and more. Prior to 2024, doubling capacity to very large (128/256GB DIMMs) involved costly new approaches to module development such as 3D stacking, which made the largest capacity DIMMs quite costly in terms of cost/GB. In recent years, DRAM vendors have created non-binary capacity points: 24GB, 48GB and 96GB DIMMS that offer significantly better alternatives to meeting higher capacity and bandwidth needs at more affordable costs and with less need for over-provision.

- Faster DIMMs – Current JEDEC standard DDR5 DIMMs in the market range from 4800-6400 MT/s. There is often little/no premium for the higher performance modules thanks largely to the fiercely competitive DRAM market. While there are faster versions on the way, the DDR5 rate is already 2X the throughput rate of the prior DDR4 generation.

- Memory channels – Memory channels provide added memory bandwidth and capacity. AMD has been the leader in memory channel counts for generations. The AMD EPYC 7001 series processors topped the market with 8 memory channels, while the 9004 family debuted with a whopping 12 memory channels per CPU, and the AMD EPYC 9005 offers that same number. The 12 memory channels on the EPYC 9005 family allows scalability to over a terabyte of memory per socket using inexpensive 96GB DIMMs and reach 614GB/s of total memory bandwidth per socket. That is enough affordable memory and memory bandwidth per socket to support up to 128 single core VMs with up to 8GB DRAM for each!

- DIMMS/channel – Many CPUs allow OEMs to design system boards that allow populating each memory channel with either 1 or 2 DIMMs. This is often referred to as 1 DIMM per channel (1DPC) or 2 DIMMs per channel (2DPC). While it can be challenging for system designers to find a way to physically accommodate up to 48 DIMM slots on a system board (2 DIMMs x 12 channels x 2 CPUs), it is not uncommon. 2DPC allows for scaling of capacity and the ability to use less costly small capacity DIMMs to reach target capacity points, but it comes at the cost of reducing memory bandwidth. For example, you will often see platform memory specs list one MT/s figure for a “1DPC” platform config and a different, lower rate for the “2DPC” configuration. The AMD EPYC team recently posted a blog that shares more insight on the 1DPC/2DPC decision here.

- Compute Express Link (CXL)-based memory - AMD and server partners also offer CXL lanes for CXL-attached memory expansion, providing standards-based flexibility for a range of configurations that balance cost, performance, and capacity. Among other things, CXL provides an industry standard mechanism to utilize PCIe Gen5 resources to increase memory capacity and bandwidth expansion. This option is relatively new, and as a result, workload memory profiling is somewhat scarce—but it opens many new options for customers—including the re-use of DDR4 DIMMs to increase memory capacity in a cost-effective way.

With all these options, customers have never had so many alternatives to address their needs for affordable memory solutions or to achieve incredibly high system memory capacities while utilizing industry standard technology that ensures longevity of investment--including swapping of DIMMs among vendors but also often across several server generations.

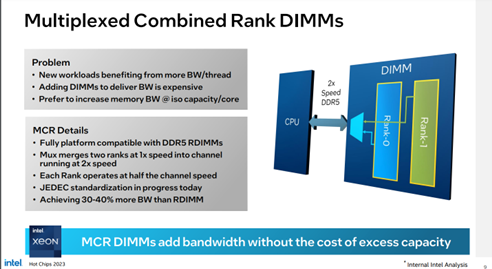

Despite all these easy options, some have opted to enable different approaches that expose customers to unnecessary downsides. One such approach is Intel’s advocacy for and support of new, non-standard technology on select SKUs of its “Granite Rapids” Intel Xeon 6 platforms. This memory comes with a lot of promises of improved system performance through enhanced prefetch and multiplexing to achieve up to 8,800MT/s and memory capacity gains with tall DIMMs. Intel had been driving this technology with selected memory partners under the name of “MCRDIMM” until the summer of 2023—as shown from this slide from an Intel presentation at the Hot Chips conference in 2023.

More recently, and confusingly, Intel and its partners started marketing these modules as “MRDIMM”—which is the forthcoming higher speed standards also initially labeled as “MRDIMM” in JEDEC. While this sounds attractive (and philosophically aligned to JEDEC goals for next-gen memory), there are some challenges and implications with Intel’s strategy that warrant concerns about its use with Xeon 6 systems.

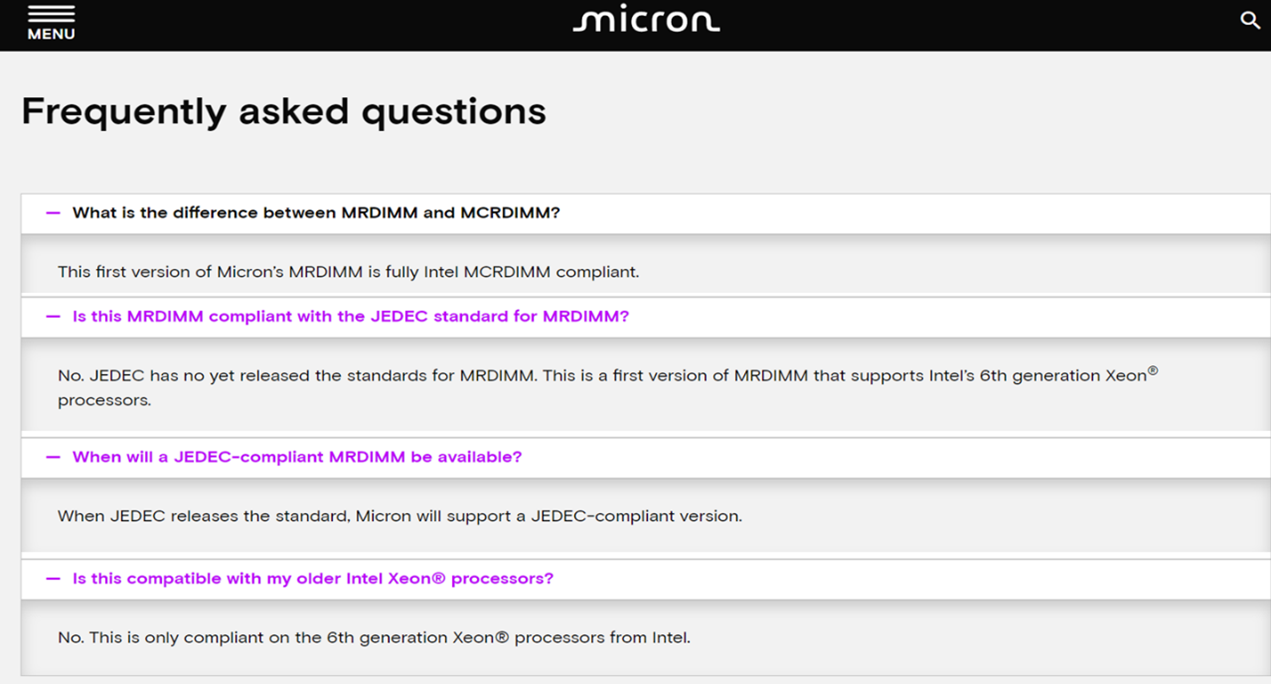

- “One and done” proprietary technology: Many of us remember Intel’s investment and promotion of proprietary “Intel® OptaneTM” persistent memory as an example of proprietary memory technology delivering a lot of potential. Similarly, the new Intel “MRDIMM” memory developed by Micron and others was touted as another proprietary technology, bringing its value into question in a field that is dominated by the scale and value of industry standard solutions. Consider the snapshot below from the Micron web site regarding the “MRDIMM” positioning and use.

What’s more, Intel has announced that only selected SKUs within the Xeon 6 “Granite Rapids” family will utilize this memory. Lastly, industry standard JEDEC high speed memory is expected to be available for use with next generation platforms. If true, this means that any MRDIMMs purchased for today’s systems may not benefit from re-use at time of platform upgrade shortening the value of the media to a very limited set of server offerings.

- High cost: Given that the Intel “MRDIMM” memory is supported uniquely on selected “Granite Rapids” systems—with no other sources of the volume that drives commodity pricing, the cost of these DIMMs initially hit the market at up to 2X premiums vs. standard 6400MT/s DDR5 memory modules of equivalent capacity. These premiums are logical as DIMM vendors seek to recoup design and manufacturing costs across a narrow base of supported systems. A full 6 months after DRAM vendors announced full production of these parts, they are still quite scarce in the market. Ongoing channel checks with leading memory distributors show limited to no availability. While DRAM pricing is historically quite volatile, in recent months costs can still range from 10-30% higher on a cost/gigabyte compared to standard 6400MT/s DIMMs. Limited availability of these parts—as of June 2025 several leading server OEMs do not seem to offer them for general sales on their web sites—can only further postpone cost parity. Higher memory cost, of course, translates to higher system cost with memory contributing to one of the highest cost sub-systems of many server solutions.

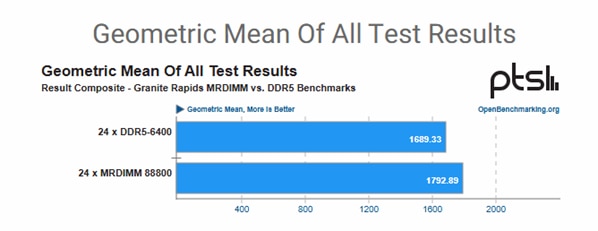

- Questionable performance and TCO benefits: While Intel’s “MRDIMM” memory may offer some potential performance benefits, it is important to understand the fine print. For example, Intel and memory vendors will point to the comparisons of specifications (6400MT/s vs up to 8800MT/s) to cite “up to 37% performance gains” comparing standard DDR5 vs the “MRDIMM”. But the theoretical max data transfer rates of memory may not accurately indicate real workload performance in this situation. Independent testing by Phoronix shown below closely confirms AMD testing—the performance gains of the “MRDIMM”-equipped platform are well below the “theoretical” gains.

The Geomean results of more than 50 tests in the Phoronix suite show a roughly 6% performance uplift when using MRDIMM at 8800MT/s compared to standard DDR5 at 6400MT/s on the same “Granite Rapids” platform. A deeper look into the Phoronix results shows that a set of primarily high-performance computing workloads drives low double-digit performance gains. Many other workloads demonstrate no measurable performance benefit—and a small number are less performant with the MRDIMMs. Given the cost premium and limited availability and re-usability for this technology, it is logical to question whether there are TCO benefits for enterprise adoption.

AMD has long recognized the critical role of memory to support leadership AMD EPYC CPU performance and has been at the forefront of enabling more memory channels to complement the growing number of high-performance cores in our CPU portfolio. As noted, AMD EPYC 9005 platforms support up to 12 memory channels with support for up to 6 terabytes of standard high performance 6400MT/s DDR5 memory per processor—offering strong memory capacity and bandwidth levels for demanding workloads while honoring the value proposition of standard, non-proprietary memory.

Not standing still, AMD is also committed to working with the memory industry to deliver a next-generation of multiplexed, multi-ranked buffered DIMMs (as noted, JEDEC is also currently using the term “MRDIMM”), an innovation that leverages industry standard DRAM and DDR5 interfaces to new levels of performance and capacity, offering support for accessing two ranks of memory simultaneously. A slightly deeper discussion on this point can be found here. The industry-standard JEDEC MRDIMM is a very compelling concept with a strong roadmap of future enhancements, and JEDEC is working to finalize these specifications now for a first generation to debut at 12,800MT/s—a far more sizeable transfer rate advantage than current 6400MT/s vs proprietary 8,800MT/s parts Intel offers.

With these challenges considered, it’s clear that for most customers, Intel’s latest memory foray is a costly ingredient that should be avoided when specifying infrastructure. Customers can reduce risk and gain more value using industry standard solutions. Gaining extended lifespan across multiple server generations, reaping cost benefits of large volume market adoption, and having a broad choice of vendor and system compatibility provides a more rational alternative for many enterprise customers.

To learn more about how AMD EPYC processors support standard DDR5 and CXL memory and to learn more about our upcoming support for industry standard MR memory, please visit our website.