Build AI Anywhere with ROCm™ Software: AMD and Microsoft Bring Cloud-to-Client Power to Developers

May 19, 2025

AMD empowers Developers to explore innovation without limits

In today’s rapidly evolving AI landscape, innovation is only limited by the imagination of developers and fueled by powerful partnerships, cutting-edge hardware and an open software ecosystem.

AMD and Microsoft collaborate to empower innovation across Cloud to Client

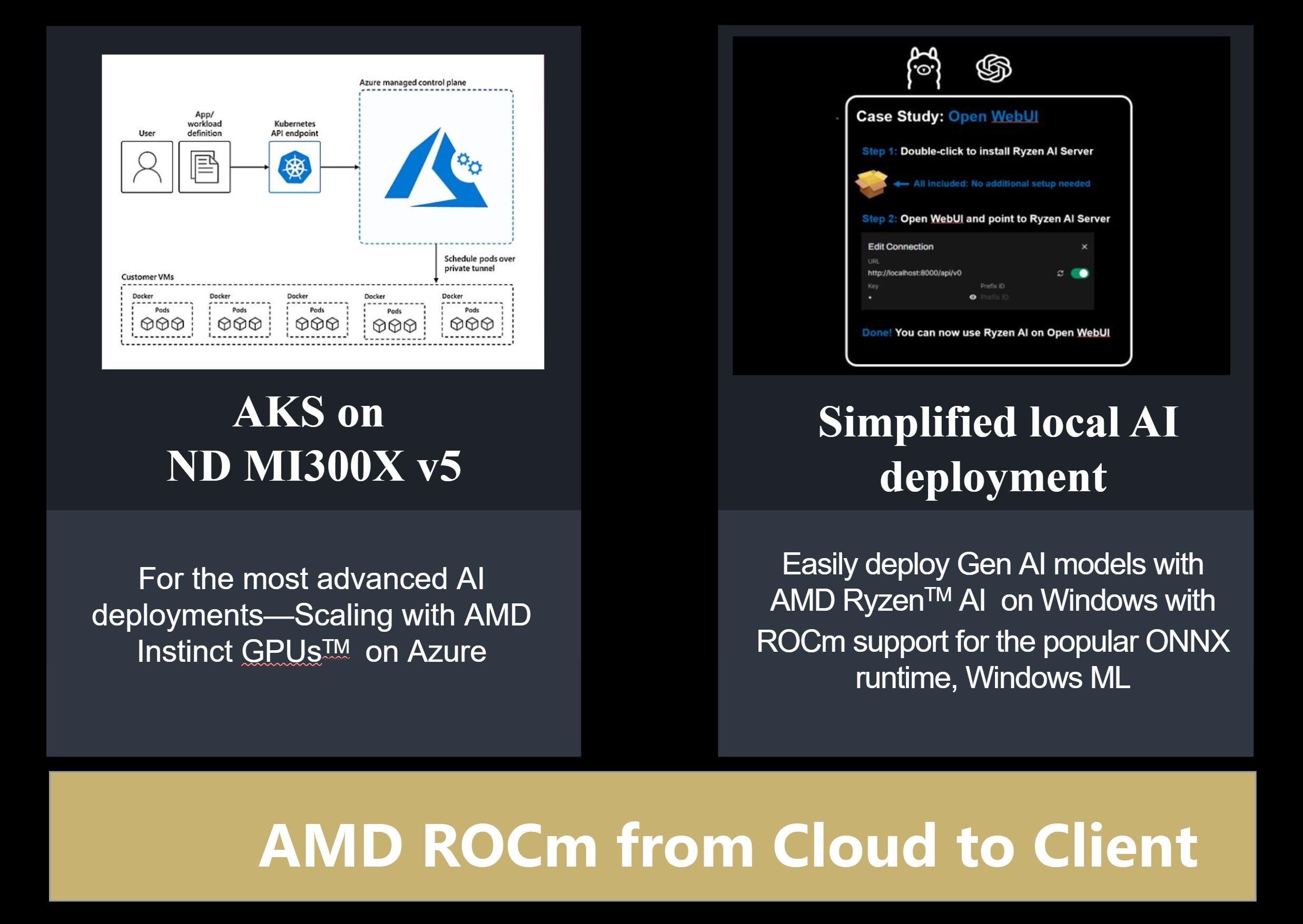

Our longstanding collaboration with Microsoft continues to push the boundaries of AI innovation across Cloud to Client. This joint effort accelerates innovation and empowers the AI developer community with high-performance and secure platforms and open-source tools. With ROCm support for both Cloud and Windows ML powered by ONNX Runtime, developers can effortlessly move development and deployment across Azure cloud with AMD Instinct platforms to Client with AMD Ryzen and Radeon.

“We are excited about our shared vision with AMD to bring the best AI experiences to developers and customers from Cloud to Client. Our collaboration on the Ryzen AI Execution Provider and Windows ML make Windows 11 PCs powered by Ryzen AI APUs an ideal platform for developers to drive rapid AI innovation. And for Cloud, we look forward to our continued partnership in Azure across AMD Instinct and EPYC based infrastructures.” said Logan Iyer, Distinguished Engineer, Windows Platform + Developer.

AMD ROCm Everywhere – Build without Barriers

The scale and complexity of modern AI workloads continue to grow—but so do the expectations around performance and ease of deployment. AMD ROCm is a huge step towards democratizing high-performance computing and AI development. ROCm is the open-source software stack that spans development and deployment across cloud to client without much code change.

ROCm supports all popular frameworks and Day 0 support for top Models. It includes

- Llama 4 Maverick and Scout: Meta's latest multimodal intelligence models

- Gemma 3: Optimized for low-latency inference,

- DeepSeek-R1/V3: Enhanced throughput and efficiency, and

- Llama support for various configurations like 8B, 70B, and 405B,

The recent ROCm 6.4 release delivers sweeping performance improvements across major AI frameworks like PyTorch, Megatron-LM, JAX, and SGLang—enabling faster, memory-efficient LLM training and inference on AMD Instinct™ GPUs. Whether you’re building next-gen AI applications, optimizing LLM inference, or managing containerized GPU clusters, ROCm integrates seamlessly with Microsoft Azure, enabling powerful AI and HPC workloads.

ROCm on Radeon supports Machine Learning development on Radeon GPUs. These GPUs offer significant improvements in AI performance with up to 24GB or 48GB of GPU memory, making them suitable for large ML models. Utilizing a local PC or workstation equipped with these GPUs provides a cost-effective solution for developing and training ML models. ROCm on Radeon can be run on Windows using the Windows Subsystem for Linux (WSL). This allows developers to leverage the hardware acceleration of AMD Radeon cards. The same software stack also supports AMD CDNA™ GPU architecture, so developers can migrate applications from their preferred framework into the datacenter. AMD makes various ROCm components available on Windows today and are working to enable additional support for ROCm for Windows 11.

The collaboration between AMD and Microsoft ensures that AI workloads on Windows 11 are executed with high performance and accuracy. With ROCm adding support for Windows ML, powered by ONNX Runtime, and popular frameworks like PyTorch, developers can accelerate and optimize inference of multiple learning models on PCs. For example, a Ryzen AI Copilot+ PC with up to 96GB of shared system memory for AI Models can run 70B+ LLMs locally without the performance penalty of page-to-RAM needed for discrete graphics. Users can also run cloud quality image generation models like SD3.5 Large, Flux locally on a Windows 11 PC, and enjoy seamless execution of cloud-based models on client Windows 11 PCs with ROCm.

The AMD Advantage for AI development – Full Spectrum Compute Infrastructure with Open Ecosystem to build and deploy AI anywhere.

Build High Performance applications on Azure

AMD Instinct™ GPUs are purpose-built to handle the demands of next-gen models. As foundational frontier models grow in both size and complexity, you need a solution that can keep up with the constantly evolving AI landscape. This is why AMD and Microsoft Azure partner together to build infrastructure solutions like the ND MI300X v5 virtual machine (VM), ideal for AI applications that need to quickly process vast amounts of data. The AMD Instinct™ MI300X GPU found powering the Azure ND MI300X v5 VM can run today’s gigantic frontier models on one node—for example, all 671B parameters of DeepSeek-R1 fit in a single VM. The massive HBM memory capacity on the ND MI300X VM also enables support for extended context lengths, well suited for rich input modalities and chain-of-thought reasoning models.

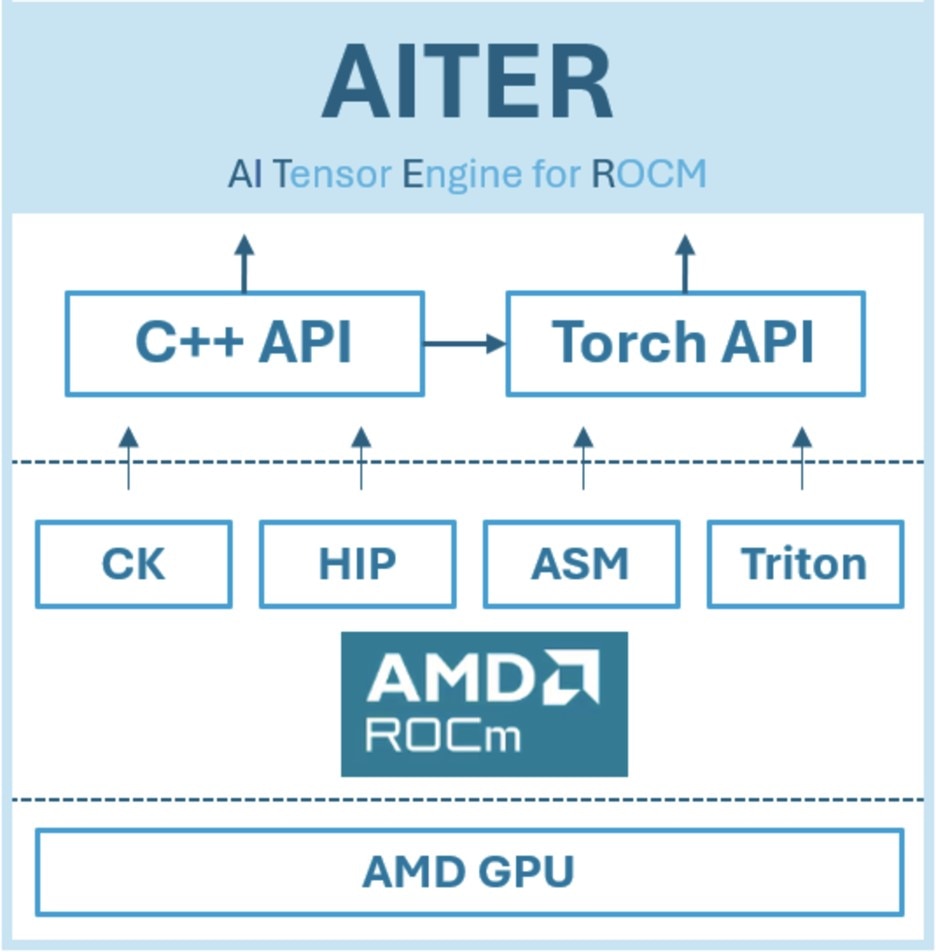

When working with GPUs, performance optimization is critical, especially for tasks involving artificial intelligence, which can be extremely demanding. To fully leverage the capabilities of advanced and specialized hardware, it is essential to master optimization strategies and ensure every available resource is utilized efficiently. That is why AMD provides the AI Tensor Engine for ROCm (AITER), a centralized repository filled with high-performance AI operators [1] designed to accelerate various AI workloads. AITER serves as a unified platform where customers can easily find and integrate optimized operators into their existing frameworks.

Build efficiently and securely with Azure VMs

Microsoft today offers over 40 different AMD powered VM types available to customers across the world. To reap the full benefits of AI to drive efficiency and innovation, organizations look to AMD on Azure to:

- First build a foundation of infrastructure capable of supporting everyday workloads while making room in the budget to invest in AI;

- Work with sensitive customer or financial data, regulated data, or highly sensitive intellectual property; and

- Interact with key partners and collaborators securely.

For those building on Azure, modernizing to these VMs, now widely available across Azure regions, means more room in the IT budget to invest in Azure AI services. In addition, Azure Confidential Clean Rooms, enabled by Confidential Containers on Azure Container Instances, a serverless offering, provide a strong cloud foundation to enable multiparty access while preserving data confidentiality and integrity in financial, governmental, retail, healthcare and other important use cases.

Together, these AMD on Azure offerings enable builders to get ready for AI.

Create compelling user experiences on Client Devices

AMD is working closely with Microsoft and app developers (ISVs) to create compelling user experiences by simplifying the development of AI enhanced applications across various hardware platforms by leveraging Windows ML. The Windows ML promise of write once, run anywhere enables ISVs and developers to scale their AI applications across CPUs, GPUs, and NPUs, providing a seamless balance of performance and battery life. Powered by ONNX Runtime and the standard ONNX model format, Windows ML lowers the barrier for developers to onboard their AI workloads, accelerating developers’ innovation and deployment and enhancing the overall user experience.

The collaboration between AMD and Microsoft ensures that AI workloads on Windows 11 PCs are executed with high performance and accuracy, thanks to the ONNX Execution Provider (EP) certification process that guarantees quality across all Windows 11 platforms. The automatic installation of Windows ML runtime and EPs through the Microsoft Store on Windows ensures that developers and end users always have access to the latest, high-quality code components in the Windows ML stack. This standardized development platform reduces fragmentation in the Windows 11 PC landscape, accelerating the adoption of AI and bringing new, engaging experiences to Windows 11 clients. The deep partnership between AMD and Microsoft has been marked by a strong sense of co-engineering and mutual support, driving the pace of innovation and delivering great app experiences to the Windows ecosystem.

AMD mantra for innovation: Open software ecosystem built for developers, by developers

It is a commitment to Open, collaborative AI progress. Please visit the AMD Developer Portal to find resources you need for developing on AMD platforms.

Training: Access tutorials, blogs, open-source projects, and other resources for AI development with the ROCm™ software platform. This site provides an end-to-end journey for all AI developers who want to develop AI applications and optimize them on AMD GPUs.

- https://www.amd.com/en/developer/resources/rocm-hub/dev-ai.html

- https://www.amd.com/en/developer/resources/rocm-hub/dev-ai.html#blogs

Access to GPUs:

Developer Contest: If you enjoy a challenge, join the inference contest and win prizes.

DISCLAIMERS:

[1] AI operators : optimized mathematical functions or computational kernels that perform fundamental AI and machine learning tasks, such as matrix multiplications, convolutions, and activations, which are crucial for accelerating AI workloads.

The information contained herein is for informational purposes only and is subject to change without notice. While every precaution has been taken in the preparation of this document, it may contain technical inaccuracies, omissions and typographical errors, and AMD is under no obligation to update or otherwise correct this information. Advanced Micro Devices, Inc. makes no representations or warranties with respect to the accuracy or completeness of the contents of this document, and assumes no liability of any kind, including the implied warranties of noninfringement, merchantability or fitness for particular purposes, with respect to the operation or use of AMD hardware, software or other products described herein. No license, including implied or arising by estoppel, to any intellectual property rights is granted by this document. Terms and limitations applicable to the purchase or use of AMD products are as set forth in a signed agreement between the parties or in AMD's Standard Terms and Conditions of Sale. GD-18u.

© 2025 Advanced Micro Devices, Inc. All rights reserved. AMD, the AMD Arrow logo, [insert all other AMD trademarks used in the material IN ALPHABETICAL ORDER here per AMD's Guidelines on Using Trademark Notice and Attribution] and combinations thereof are trademarks of Advanced Micro Devices, Inc. [Insert third party trademark attribution here per AMD's Guidelines on Using Trademark Notice and Attribution or remove.] Other product names used in this publication are for identification purposes only and may be trademarks of their respective owners. Certain AMD technologies may require third-party enablement or activation. Supported features may vary by operating system. Please confirm with the system manufacturer for specific features. No technology or product can be completely secure.