Local Tiny Agents: MCP Agents on Ryzen AI with Lemonade Server

Jun 10, 2025

We’re excited to announce that the power of Model Context Protocol (MCP) has been unlocked on AMD Ryzen AI™ PCs. You can get started today by installing AMD Lemonade Server and connecting it to projects like Hugging Face’s Tiny Agents via streaming tool calls.

If you’re new to Lemonade, it's a lightweight open-source local LLM server designed to show the capabilities of AMD AI PCs. Think of it as a docking station for LLMs, letting you plug powerful models directly into apps like Open WebUI and run them locally, with no cloud needed. Developers can also use Lemonade to integrate with modern projects that use the advanced features from the OpenAI standard. This includes awesome projects like Tiny Agents, created by Hugging Face, which makes local tool calling remarkably smooth. 🚀

What Are Tiny Agents?

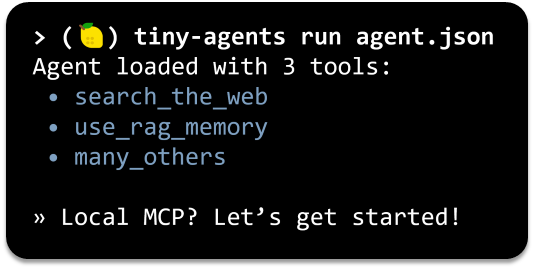

With MCP (Model Context Protocol), you can connect tools like web search, memory, and filesystem access directly to LLMs. Building on this, Tiny Agents makes it easy to create lightweight autonomous agents using these MCP-connected tools. The LLM simply loops between conversation and tool use—autonomously—until the task is complete.

With Lemonade's latest update, you can now enable these tool interactions using your favorite LLM. That means low-friction access to external tools, and more dynamic, context-aware behavior for your application—with minimal setup.

QuickStart: Run a Lemonade-Powered Tiny Agent

First, download and install Lemonade Server 7.0.2 or above using the installer and launch the server by double clicking the Desktop icon or running the command below.

lemonade-server serve

Install Node.js (download here) and the latest huggingface_hub with MCP:

pip install "huggingface_hub[mcp]>=0.32.4"

Save this sample database into your current folder. Then, save the agent config below to a new file named agent.json:

{

"model": "Qwen3-8B-GGUF",

"endpointUrl": "http://localhost:8000/api/",

"servers": [

{

"type": "stdio",

"config": {

"command": "C:\\Program Files\\nodejs\\npx.cmd",

"args": [

"-y",

"mcp-server-sqlite-npx",

"test.db"

]

}

}

]

}

Run your agent with the Command Line Interface (CLI):

tiny-agents run ./agent.json

This sample SQLite tool allows LLMs to reason about and edit SQLite databases. It’s automatically discovered by the MCP server and connected to the agent. Once connected, the LLM can use it during inference—looping through tool calls as needed. You can try this out with prompts like “Which tables are available?” and “What is the most expensive product?”.

In this example, the Qwen3 model is accelerated using Vulkan, a low-overhead, cross-platform API designed for high-performance graphics and compute tasks, making it ideal for running local LLMs efficiently. With Vulkan, the Qwen3 model delivers fast, responsive inference by accelerating on your AMD Radeon™ GPU or integrated GPU.

Beyond Basic Examples: Stepping up your MCP Game

There are hundreds of MCP servers available: from basic calculators to image generation and editing frameworks. This flexibility makes it easy to find (or build) an MCP setup that fits your workflow. Some good resources to get started with MCP servers are awesome-mcp-servers and mcpservers.org.

If your application is designed for concise context usage and you're interested in exploring NPU + iGPU acceleration, check out the Hybrid models available on Lemonade for the AMD Ryzen AI 300 series PCs. This includes models like Llama-xLAM-2-8b-fc-r-Hybrid, which have been fine-tuned for tool-calling and deliver snappy responses!

Why This Matters

We’re entering a new chapter where AI agents don’t just respond: they act. Running Tiny Agents locally with Lemonade Server makes it easier to build practical, tool-using LLM applications without relying on the cloud.

You get a setup that’s:

- Private: Everything stays on your machine, which can be important when working with sensitive data.

- Free: Since everything happens locally, you’re not paying per API call or usage-minute. This makes it more sustainable for experimentation, development, or long-running tasks.

- Powerful: MCP tools for many tasks and services are broadly available, enabling you to extend the capabilities of your LLM with ease.

Shoutout to the Hugging Face team for pioneering Tiny Agents and pushing open tooling forward. Lemonade is proud to build on top of this amazing foundation.

Try It Yourself

Install Lemonade Server, configure a Tiny Agent, and see what you can build. If you run into something interesting, or want to contribute, check out our GitHub, open an issue or send us an email at lemonade@amd.com. We’d love to hear what you're working on.

Try it out, tweak it, or build your own. Your next tiny agent is just a loop away!

👉 github.com/lemonade-sdk/lemonade