Hybrid NPU/iGPU Optimized Agent on AMD Ryzen AI Powered PC

Jun 11, 2025

Introduction

HP Xiaohui, an NPU/iGPU (integrated GPU) Hybrid Optimized Agent is a generative artificial intelligence service model specifically designed for HP AI PCs on AMD Ryzen™ 5 AI 340 and Ryzen 7 AI 350 PCs, helping users solve problems related to computer use. It provides service support for a wide range of PC-related issues, including technical support, software assistance, hardware guidance, performance optimization and personalized recommendations. The NPU/iGPU Hybrid Optimized Agent is pre-installed on Windows HP laptops that include AMD Ryzen™ 5 AI 340 and Ryzen 7 AI 350 CPUs. In this blog, we will discuss the strength of AMD NPU/iGPU Hybrid Optimized solution in both prefill and token generation for AI model inference and show how it works in HP AI Agent.

Hybrid Method

The Hybrid solution built on the OnnxRuntime GenAI (OGA) framework combines the AI acceleration of the AMD NPU with iGPU to enhance performance on Ryzen AI-powered laptops.

Developed using AMD Ryzen AI OGA Hybrid, the HP Xiaohui agent operates in a hybrid execution mode to deliver a more responsive and efficient user experience.

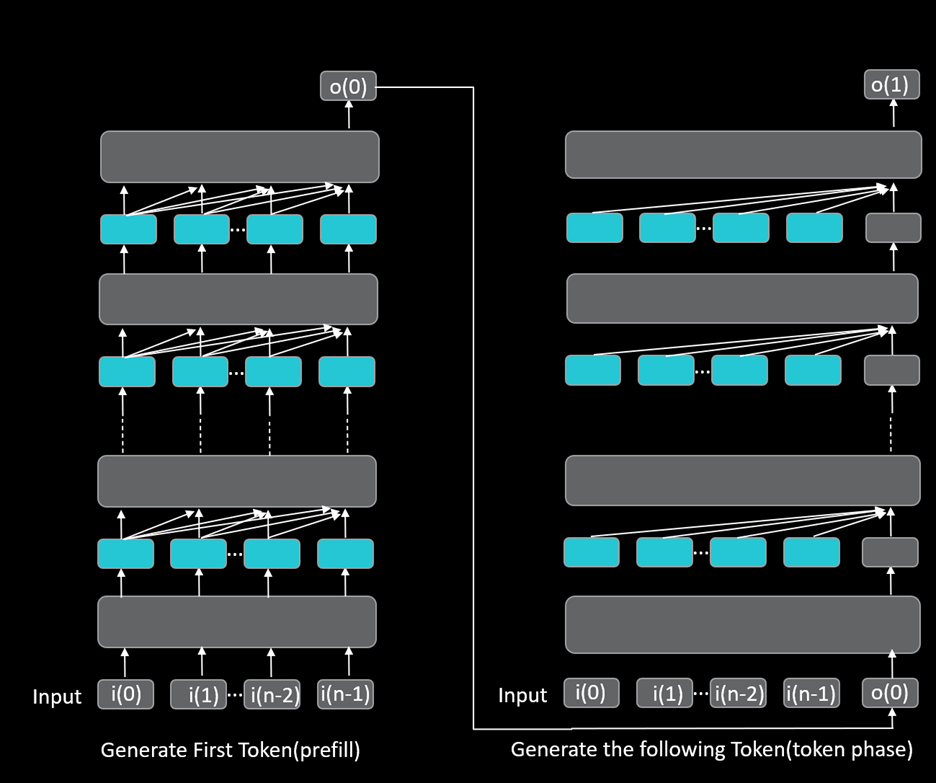

Figure 1: Workload of Prefill and Token Phase

We know that the Ryzen AI powered PCs acheive great performance by combining the strengths of both the NPU and the iGPU. For instance, the prefill phase is compute-intensive and benefits from running on the NPU with its high AI Engine capability at up to 50 TOPS (Tera Operations per Second). On the other hand, the decode phase is memory intensive and is executed on the iGPU, which is well-suited for handling high-bandwidth operations. Hybrid execution mode optimally partitions the model such that different operations are scheduled on NPU and iGPU. This minimizes the time-to-first-token (TTFT) in the prefill phase and maximizes token generation (tokens per second, TPS) in the decode phase.

Additionally, Ryzen AI OGA provides both C++ and Python APIs, along with pre-optimized models that developers in the Ryzen AI ecosystem can reuse and extend for their applications.

Below is how to set up and run a model on Ryzen AI powered PC:

1. Quantize FP32 ONNX model to FP16a/INT4w using Quark

cd examples/torch/language_modeling/llm_ptq/

python quantize_quark.py \

--model_dir "meta-llama/Llama-2-7b-chat-hf" \

--output_dir <quantized safetensor output dir> \

--quant_scheme w_uint4_per_group_asym \

--num_calib_data 128 \

--quant_algo awq \

--dataset pileval_for_awq_benchmark \

--model_export hf_format \

--data_type <datatype> \

--exclude_layers

2. Ryzen AI compatibility post processing

conda activate ryzen-ai-<version>

model_generate --hybrid <output_dir> <quantized_model_path>

3. Run with Hybrid mode

.\model_benchmark.exe -h

# To run with default settings

.\model_benchmark.exe -i $path_to_model_dir -f $prompt_file -l $list_of_prompt_lengths

# To show more informational output

.\model_benchmark.exe -i $path_to_model_dir -f $prompt_file --verbose

# To run with given number of generated tokens

.\model_benchmark.exe -i $path_to_model_dir -f $prompt_file -l $list_of_prompt_lengths -g $num_tokens

# To run with given number of warmup iterations

.\model_benchmark.exe -i $path_to_model_dir -f $prompt_file -l $list_of_prompt_lengths -w $num_warmup

# To run with given number of iterations

.\model_benchmark.exe -i $path_to_model_dir -f $prompt_file -l $list_of_prompt_lengths -r $num_iterations

Addiotionally, AMD provides a set of pre-optimized LLMs ready to be deployed with Ryzen AI software and the supporting runtime for hybrid execution. These models can be found on Hugging Face:

- https://huggingface.co/amd/Phi-3-mini-4k-instruct-awq-g128-int4-asym-fp16-onnx-hybrid

- https://huggingface.co/amd/Phi-3.5-mini-instruct-awq-g128-int4-asym-fp16-onnx-hybrid

- https://huggingface.co/amd/Mistral-7B-Instruct-v0.3-awq-g128-int4-asym-fp16-onnx-hybrid

- https://huggingface.co/amd/Qwen1.5-7B-Chat-awq-g128-int4-asym-fp16-onnx-hybrid

- https://huggingface.co/amd/chatglm3-6b-awq-g128-int4-asym-fp16-onnx-hybrid

- https://huggingface.co/amd/Llama-2-7b-hf-awq-g128-int4-asym-fp16-onnx-hybrid

- https://huggingface.co/amd/Llama-2-7b-chat-hf-awq-g128-int4-asym-fp16-onnx-hybrid

- https://huggingface.co/amd/Llama-3-8B-awq-g128-int4-asym-fp16-onnx-hybrid/tree/main

- https://huggingface.co/amd/Llama-3.1-8B-awq-g128-int4-asym-fp16-onnx-hybrid/tree/main

- https://huggingface.co/amd/Llama-3.2-1B-Instruct-awq-g128-int4-asym-fp16-onnx-hybrid

- https://huggingface.co/amd/Llama-3.2-3B-Instruct-awq-g128-int4-asym-fp16-onnx-hybrid

- https://huggingface.co/amd/Mistral-7B-Instruct-v0.1-hybrid

- https://huggingface.co/amd/Mistral-7B-Instruct-v0.2-hybrid

- https://huggingface.co/amd/Mistral-7B-v0.3-hybrid

- https://huggingface.co/amd/Llama-3.1-8B-Instruct-hybrid

- https://huggingface.co/amd/CodeLlama-7b-instruct-g128-hybrid

- https://huggingface.co/amd/DeepSeek-R1-Distill-Llama-8B-awq-asym-uint4-g128-lmhead-onnx-hybrid

- https://huggingface.co/amd/DeepSeek-R1-Distill-Qwen-1.5B-awq-asym-uint4-g128-lmhead-onnx-hybrid

- https://huggingface.co/amd/DeepSeek-R1-Distill-Qwen-7B-awq-asym-uint4-g128-lmhead-onnx-hybrid

- https://huggingface.co/amd/AMD-OLMo-1B-SFT-DPO-hybrid

- https://huggingface.co/amd/Qwen2-7B-awq-uint4-asym-g128-lmhead-fp16-onnx-hybrid

- https://huggingface.co/amd/Qwen2-1.5B-awq-uint4-asym-global-g128-lmhead-g32-fp16-onnx-hybrid

- https://huggingface.co/amd/gemma-2-2b-awq-uint4-asym-g128-lmhead-g32-fp16-onnx-hybrid

Experiments

The HP OmniBook featurin AMD Ryzen™ AI 7 350 has been publicly available since April 2025 and is equipped with an NPU-powered AI Agent.

https://www.hpstore.cn/omnibook-7-aerongai-13-bg1078au-b91cbpa.html

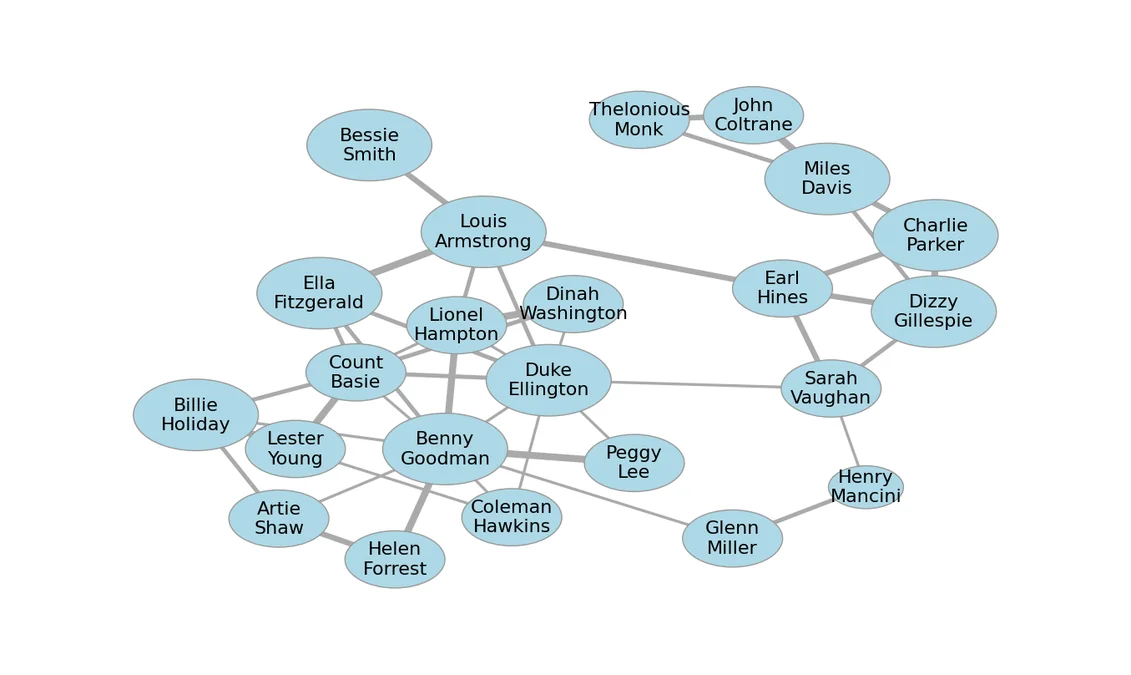

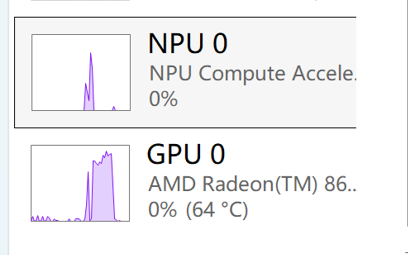

After logging in Windows, users can open pre-installed Application “HP Xiaohui” from Windows menu, then ask the agent to support a wide range of PC-related issues, including technical support, software help, hardware guidance. Windows Task Manager can show wonderful hybrid usage with NPU and iGPU.

Figure 2: NPU and iGPU Utilization from Windows Task Manager

With AMD AI PC NPU/iGPU Hybrid, end users can have wonderful experiences on both time to first token and speed of tokenize decode. To run the LLMs in the best performance mode, follow these steps: Go to Windows → Settings → System → Power and set the power mode to Best Performance. Execute the following commands in the terminal:

cd C:\Windows\System32\AMD

xrt-smi configure --pmode performance

Summary

Hybrid execution mode minimizes the time-to-first-token (TTFT) in the prefill phase and maximizes token generation (tokens per second, TPS) in the decode phase so end users can have wonderful experiences with both prefill phase and decode phase.