AMD Instinct MI430X: Powering the Next Wave of AI and Scientific Discovery

Nov 19, 2025

AMD continues to lead the world in high-performance computing, powering the most advanced supercomputers across government and enterprise.

According to the Top500 list, Frontier at Oak Ridge National Laboratory became the world’s first exascale-class system, delivering 1.3 exaflops of performance and enabling breakthroughs in science and AI. Building on that, El Capitan at Lawrence Livermore National Laboratory is now the most powerful supercomputer in the world at 1.8 exaflops, advancing national security and large-scale simulation workloads. AMD also extends its leadership into enterprise with ENI’s HPC6, the largest commercial supercomputer globally, delivering 478 petaflops of performance for energy research and innovation.

Building on this legacy, the AMD Instinct™ MI430X GPU ushers in a new era of performance and efficiency for large-scale AI and high-performance computing (HPC). Built on the next-generation AMD CDNA™ architecture, and supporting 432GB of HBM4 memory and 19.6TB/s of memory bandwidth, these GPUs deliver extraordinary compute capabilities for HPC and AI, enabling researchers, engineers, and AI innovators to push the limits of what’s possible.

Driving Sovereign AI and Scientific Leadership

The AMD Instinct MI430X GPU will power some of the world’s most advanced AI and HPC systems, underscoring our leadership in enabling sovereign, open innovation.

- Discovery, at Oak Ridge National Laboratory, serves as one of the United States’ first AI Factory supercomputers. Using AMD Instinct MI430X GPUs and next-gen AMD EPYC “Venice” CPUs on HPE Cray GX5000 supercomputing platform, Discovery will enable U.S. researchers to train, fine-tune, and deploy large-scale AI models while advancing scientific computing across energy research, materials science, and generative AI.

- Alice Recoque, a recently announced Exascale-class system in Europe, integrates AMD Instinct MI430X GPUs and next gen AMD EPYC “Venice” CPUs using Eviden’s newest BullSequana XH3500 platform to deliver exceptional performance for both double-precision HPC and AI workloads. The system’s architecture leverages the massive memory bandwidth and energy efficiency to accelerate scientific breakthroughs while meeting stringent energy efficiency goals.

These deployments reflect the role AMD plays in advancing sovereign AI infrastructure — empowering nations and institutions to build and control their own AI and HPC capabilities with open hardware and software foundations.

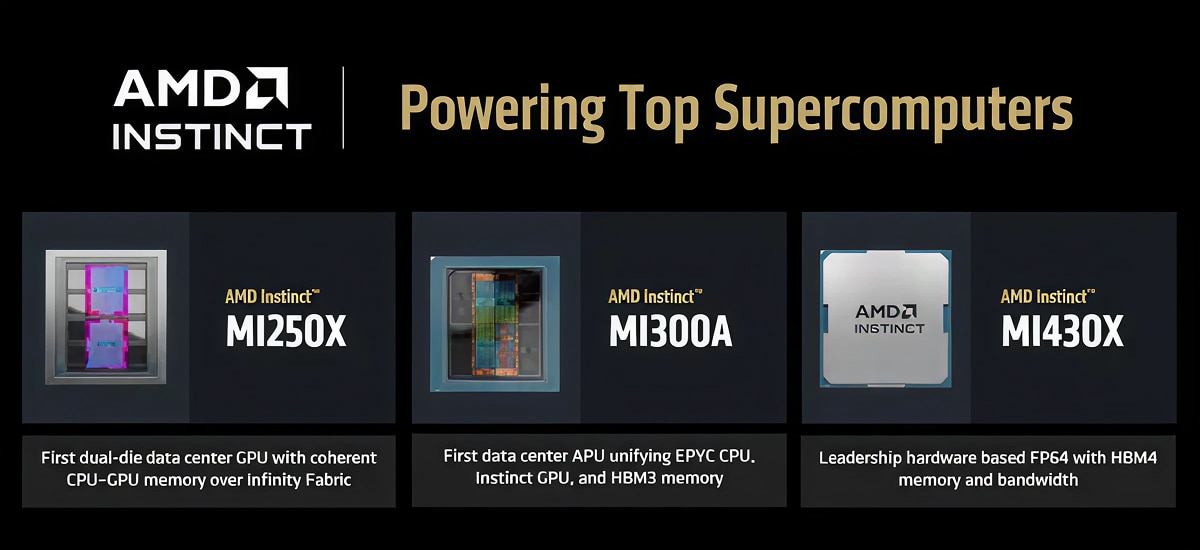

Three Generations of HPC Leadership

AMD leadership in HPC has been defined by three successive generations of innovation — each one redefining performance, programmability, and efficiency at scale.

The AMD Instinct MI250X, which powers Frontier, introduced a groundbreaking dual-GPU design connected to the CPU through a coherent Infinity Fabric, enabling tight integration between CPU & GPU compute and memory for exceptional performance in traditional HPC workloads.

Building on that foundation, the AMD Instinct MI300A, at the heart of El Capitan, became the industry’s first data center APU, combining CPU and GPU cores in a unified package. This architectural leap drove HPC performance and programmability to new levels, simplifying development while delivering unprecedented efficiency.

Now, the AMD Instinct MI430X, powering next-generation systems such as Discovery in the U.S. and Alice Recoque in Europe, continues that leadership with true hardware-based FP64 precision and advanced AI capabilities. It ensures that scientific computing and AI training can coexist on the same platform — without compromise in performance, accuracy, or scalability.

Convergence of HPC and AI at Scale

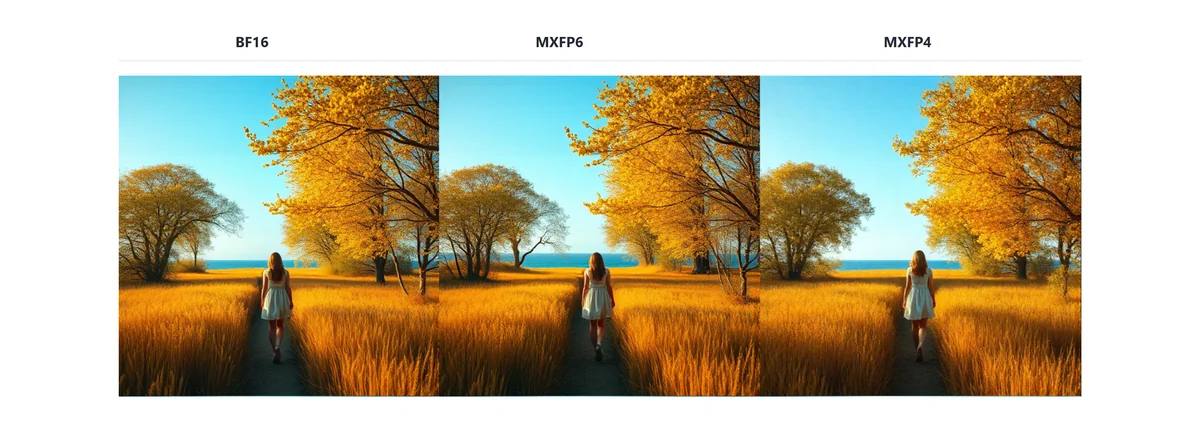

The AMD Instinct MI430X GPU is purpose-built for the convergence of AI and HPC workloads. Its extensive HBM4 memory and ultra-high bandwidth reduce bottlenecks common in training large language models or running complex simulations, while its FP4, FP8, and FP64 precision support ensures balanced performance for both AI and scientific applications.

Combined with AMD ROCm™ software, the GPUs provide full-stack compatibility and scalability across data center and supercomputing environments. ROCm continues to integrate with leading frameworks such as PyTorch, TensorFlow, and JAX — ensuring optimized performance for both training and inference across thousands of GPUs.

Advancing the Frontier of Open, Efficient Computing

From Discovery in the U.S. to Alice Recoque in Europe, the AMD Instinct MI430X is accelerating a new generation of AI factories and scientific platforms built on open standards and energy-efficient performance.

As part of our mission to advance the frontier of high-performance and adaptive computing, the AMD Instinct MI430X reinforces our commitment to enabling global innovation — from powering the world’s most advanced supercomputers to driving the next breakthroughs in AI, science, and technology.