Open Standards for AI Scale: How AMD and OCP are Shaping the Next Era of AI Infrastructure

Oct 15, 2025

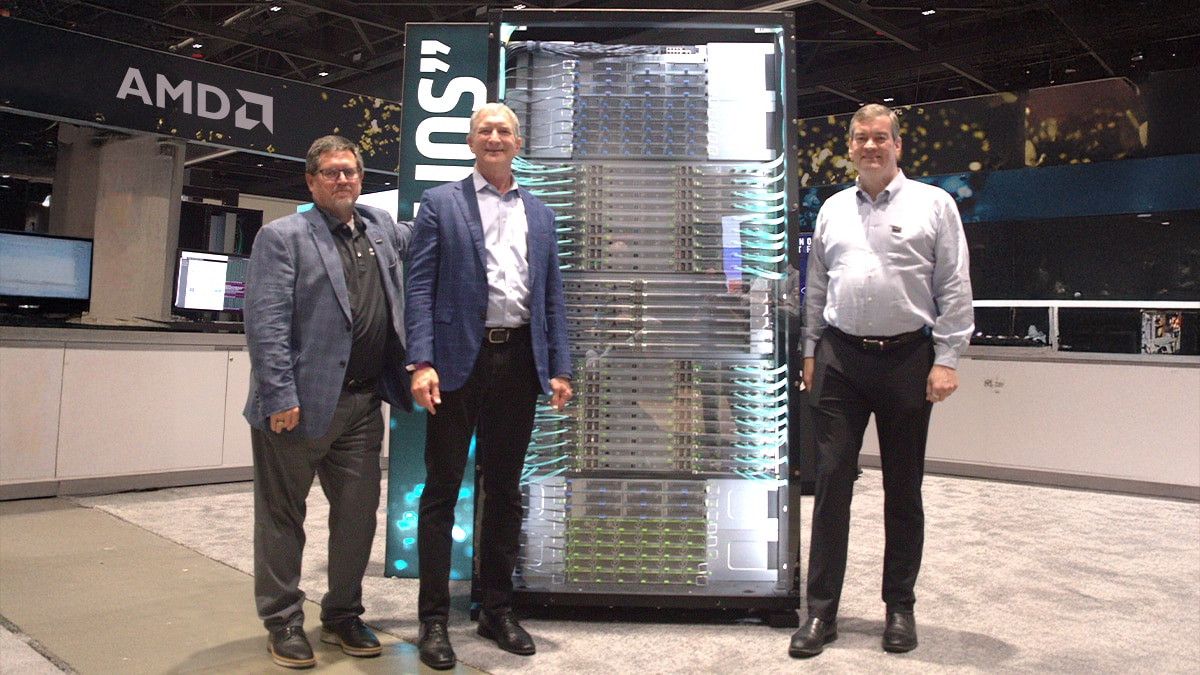

Thank you to Open Compute Project® (OCP) for the opportunity to share a keynote at the 2025 Global Summit on Monday. I was thrilled to see an audience of nearly 7,000 people sharing a passion for an open compute ecosystem (more than 10,300 are attending OCP 2025). Let me share a few points from my presentation.

AI is driving the most profound transformation in computing history. In just three years, we’ve seen AI models evolve from experimental prototypes to driving disruptive productivity gains and new workflows. The rapid deployment of AI workloads is driving insatiable demand for more compute, memory, and networking.

At AMD, we believe that the next era of AI infrastructure will be defined not just by faster silicon, but by open connectivity standards and software that allow innovation to scale.

Scaling with Open Interconnects

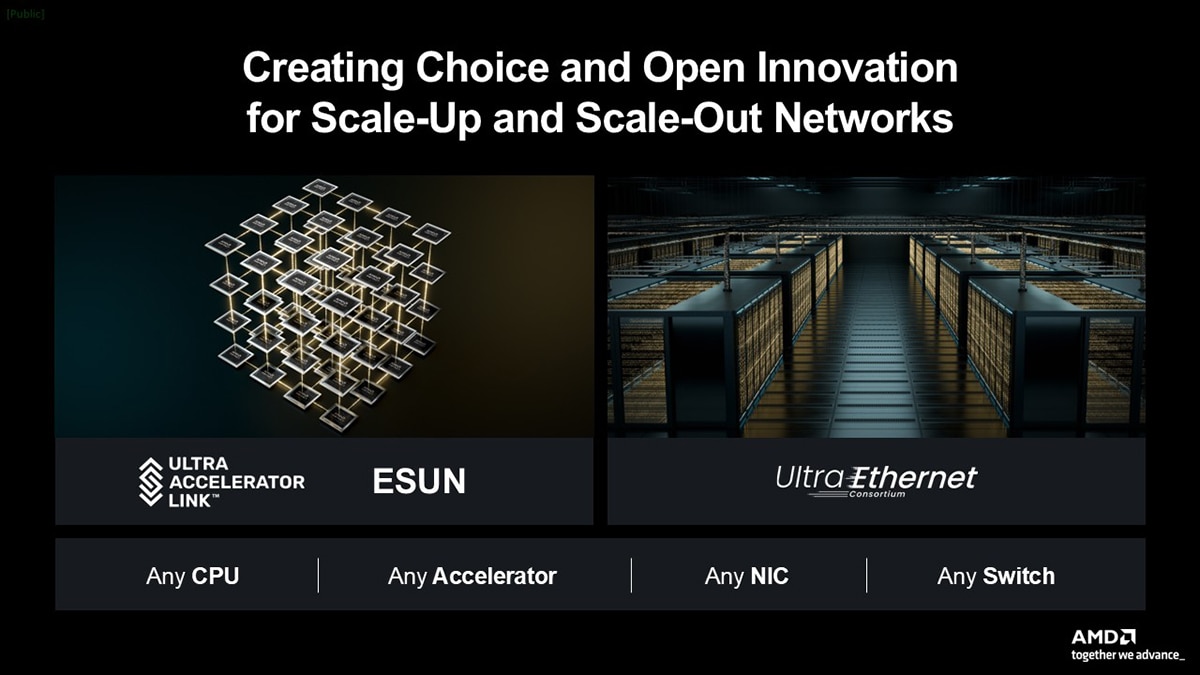

The rack is the new unit of compute in the build out of large AI clusters. Interconnect is the key to enable these racks to be highly performant and operate as one cohesive machine. That’s why we’ve taken a lead role in forming open consortia to give customers choice in their implementation without any vendor lock-in. Our efforts with UALink™, the UltraEthernet Consortium, and now the OCP ESUN initiative reflect one consistent strategy: to drive performance leadership through openness.

AMD has always championed open standards that foster innovation, interoperability, and customer choice. We led the creation of the UALink Consortium to establish an open, high-performance interconnect standard for AI scale-up systems. UALink delivers the protocol efficiency and ultra-low latency needed to unlock full accelerator performance and enable effective scaling as POD sizes grow.

At the same time, AMD is a strong proponent of advancing Ethernet technologies for AI infrastructure. Our leadership in the UltraEthernet Consortium and our recent announcement at the OCP Global Summit supporting the E-SUN initiative underscore this commitment. ESUN enables vendor-unique scale-up protocols, such as UALink, to operate over standardized Ethernet layers, aligning perfectly with AMD’s strategy to offer customers the flexibility to scale using UALink over Ethernet or through dedicated UALink switches.

We support UALink as the highest-performance, lowest-latency solution for AI scale-up connectivity. However, some customers value compatibility and interoperability with existing Ethernet-based infrastructure, and AMD is enabling that path as well.

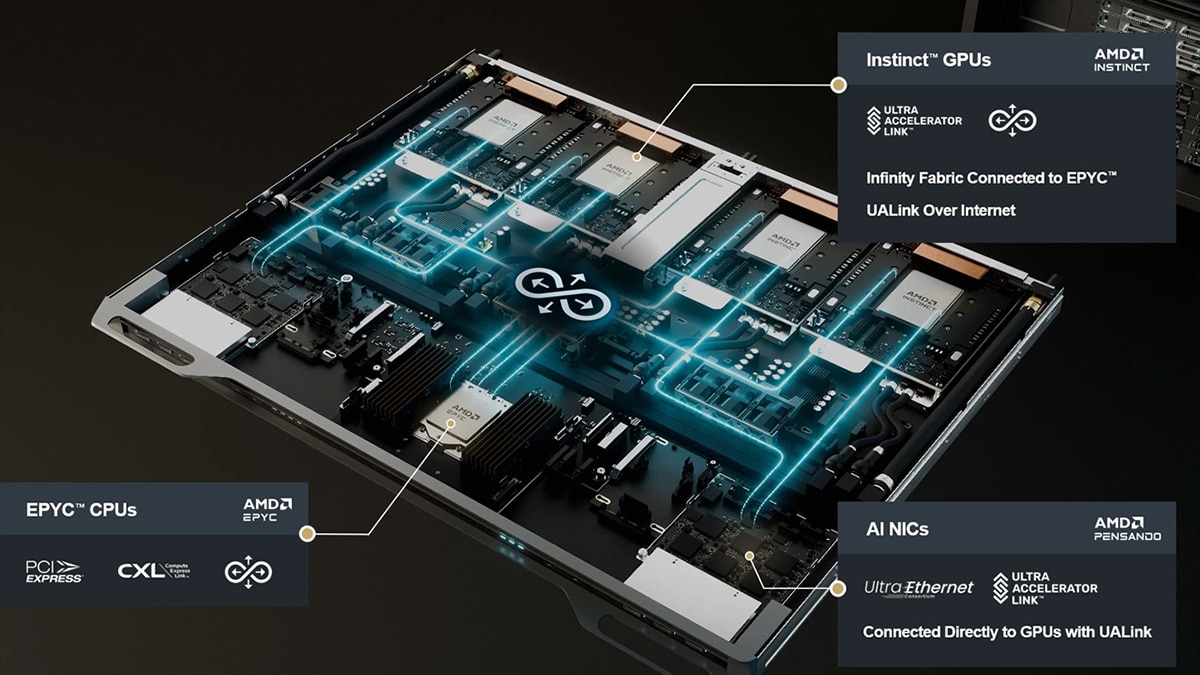

AMD Helios: Our Blueprint for Open AI Racks

AMD’s Helios rack architecture exemplifies this approach: it connects GPUs to NICs using UALink, and scales across racks using UALink-over-Ethernet, the kind of hybrid connectivity that E-SUN will enable industry-wide over time. Our next-generation Instinct roadmap continues this direction, supporting both UALink-over-Ethernet and native UALink switch fabrics for flexible, open, and efficient AI system scaling.

The AMD Helios rack architecture brings these ideas together in practice.

Confidential Computing for AI Trust

AI innovation must be paired with security and trust. That’s why AMD continues to lead in Confidential Computing, delivering data and model protection even during active processing.

We’ve introduced end-to-end memory encryption and secure VM isolation across our EPYC™ CPU and Instinct™ GPU platforms. These capabilities are increasingly critical as organizations deploy sovereign AI infrastructure and run sensitive workloads across shared environments.

From collaborative research to national-scale deployments, Confidential Computing enables AI to scale securely across both private and public domains.

The Road Ahead: Open at Every Layer

I’ll conclude by highlighting what many of us already know: open ecosystems win. They’ve delivered faster progress, broader adoption, and greater trust across every major technological inflection.

The difference today is speed. AI is moving much faster than past transitions. That means open collaboration isn’t just a success factor. It’s the foundational requirement to meet industry demands.

At AMD, we’re all in. We’re committed to advancing open platforms from scale-up to scale-out, and from hardware to software. Because in the end, it’s collaboration, not lock-in, that will ensure a vibrant, resilient, and competitive AI ecosystem.

#OpenEcosystem #AIInfrastructure #ROCm #UALink #UltraEthernet #ConfidentialComputing #ESUN #AMDInstinct #OpenInnovation