AI PCs for On-The-Go Professionals

Explore laptops designed for modern AI-enabled productivity.

Bring AI acceleration to commercial PCs with AMD Ryzen™ AI PRO processors- enabling fast, privacy-focused, and responsive AI experiences at work.

Empower your teams with AI performance designed for real-world workloads, directly on the PC. AMD Ryzen™ AI PRO processors support the tools you already use through a broad ISV ecosystem, while maintaining the reliability required for professional environments.

Dedicated AI engine, powered by AMD XDNA, designed for the ultimate in AI processing efficiency.

Cores with powerful AI capabilities to bring exciting new AI PC experiences to life.

Dedicated AI accelerators built in to optimize AI workloads.

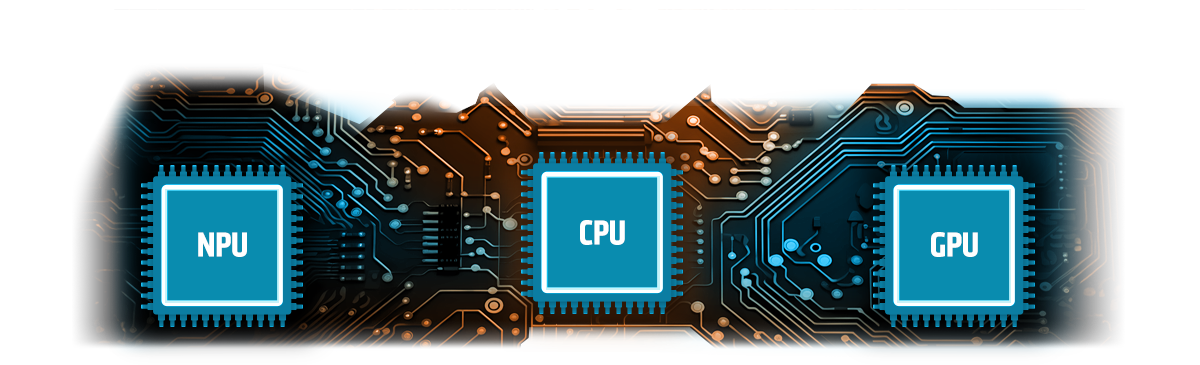

An AMD AI PC is a system that includes a dedicated neural processing unit (NPU) in addition to the CPU and GPU. While many traditional PCs can run AI workloads using the CPU or GPU, an AMD AI PC is specifically designed to execute AI tasks across all three processing engines based on the characteristics of the workload. Non-AI PCs typically rely on the CPU, integrated graphics, or a discrete GPU to run AI workloads. In contrast, AMD AI PCs include an on-die AI engine that enables additional flexibility and potential efficiency by offloading suitable AI workloads to the NPU. This allows AI tasks to run with lower power impact while leaving the CPU and GPU available for other system processes. The distinction is not whether a PC can run AI workloads, but whether it includes dedicated AI hardware designed to support them efficiently and concurrently.

AMD Ryzen AI refers to the combination of AI-capable hardware and supporting software integrated into AMD Ryzen processors. This includes a dedicated AI engine (NPU), AMD Radeon graphics, and Ryzen CPU cores working together to enable AI capabilities across a wide range of workloads. In practical terms, AMD Ryzen AI encompasses both the silicon features that accelerate AI processing and the software stack required to take advantage of them. AI workloads may run on the CPU, GPU, or NPU depending on performance, latency, and power requirements. AMD Ryzen processors with NPUs use AMD XDNA™ and AMD XDNA2™ architectures, which are designed to support evolving AI workloads over time. Offloading AI tasks to the NPU enables multitasking with minimal performance impact, keeping the system responsive while AI processing runs in parallel.

AI workloads generally fall into two categories: training and inference. Training involves creating or refining an AI model using large datasets and typically requires substantial compute resources, often using clusters of GPUs or specialized accelerators. This work is usually performed in data centers or cloud environments. Inference is the process of applying a trained model to new data. Inference workloads are far more common in day-to-day use and can run across a wide range of hardware, including CPUs, GPUs, and NPUs. Many AI applications are designed to perform inference locally on PCs rather than relying on the cloud.

AMD Ryzen AI-based systems are optimized to support local inference workloads by running them on the most appropriate processing engine. The NPU is particularly well suited for sustained, low-power AI inference, while the CPU and GPU handle tasks that benefit from general-purpose or highly parallel processing.

“100+ AI-enabled experiences” refers to the growing ecosystem of applications and services that take advantage of AI acceleration on AMD Ryzen AI-based systems. This includes collaboration with independent software vendors (ISVs), operating system partners, and platform developers to enable AI features across productivity, security, creation, and technical workflows. These experiences span applications that use local inference, hybrid local-and-cloud processing, and OS-level AI integration. The breadth of this ecosystem reflects the AMD approach to AI enablement across multiple layers of the software stack rather than support for a single application or use case. As additional AI-enabled software is released, support for AMD Ryzen AI continues to expand through software updates and ecosystem collaboration, allowing systems to adapt as AI models and workloads evolve.

Running AI workloads locally on the PC can enable lower latency, improved responsiveness, and greater control over data. On-device AI allows inference to occur without reliance on network connectivity or cloud services, which can be important for privacy-sensitive or time-critical tasks. AMD Ryzen AI-based systems support on-device AI by distributing workloads across the CPU, GPU, and NPU. The NPU is designed to handle sustained AI inference efficiently, reducing power consumption while keeping other system resources available. This approach enables a broader range of AI use cases to run directly on the PC, complementing cloud-based services rather than replacing them.

AMD collaborates with leading ISVs to enable a growing ecosystem of AI-powered applications optimized for AMD Ryzen AI PRO processors—bringing AI experiences directly to the PC.

AMD Ryzen AI PRO processors are designed for security, manageability, and long-term stability.

Ryzen™ AI is defined as the combination of a dedicated AI engine, AMD Radeon™ graphics engine, and Ryzen processor cores that enable AI capabilities. OEM and ISV enablement is required, and certain AI features may not yet be optimized for Ryzen AI processors. Ryzen AI is compatible with: (a) AMD Ryzen 7040 and 8040 Series processors except Ryzen 5 7540U, Ryzen 5 8540U, Ryzen 3 7440U, and Ryzen 3 8440U processors; (b) AMD Ryzen AI 300 Series processors, and (c) all AMD Ryzen 8000G Series desktop processors except the Ryzen 5 8500G/GE and Ryzen 3 8300G/GE. Please check with your system manufacturer for feature availability prior to purchase. GD-220c.

©2024 Advanced Micro Devices, Inc. All rights reserved. AMD, the AMD Arrow logo, Radeon, Ryzen, XDNA, and combinations thereof are trademarks of Advanced Micro Devices, Inc. Microsoft and Windows are registered trademarks of Microsoft Corporation in the US and/or other countries. Other product names used herein are for identification purposes and may be trademarks of their respective owners.