Full Stack Enterprise Ready AI

The AMD Enterprise AI Suite enables enterprises to go from bare metal compute to production-grade AI in minutes by connecting key open-source AI frameworks and Gen AI models with an enterprise-ready Kubernetes platform, minimizing the time from AI experimentation to large scale production on AMD compute platforms.

Accelerate Enterprise AI from Pilot to Production

Optimized Compute Utilization

With the AMD Enterprise AI Suite, your AI compute is utilized efficiently across user groups, projects and use cases.

Accelerated Time-to-Value

From bare metal or cloud to running production workloads in a matter of minutes.

Efficient AI Operations

Focus on the real value of AI value by automating the infrastructure.

Unmatched TCO

Performant cost-efficient hardware meets streamlined and optimized software.

Open-source Modular Architecture

Fully open-source platform ensures that you avoid vendor lock-in and benefit the most from the global AI community.

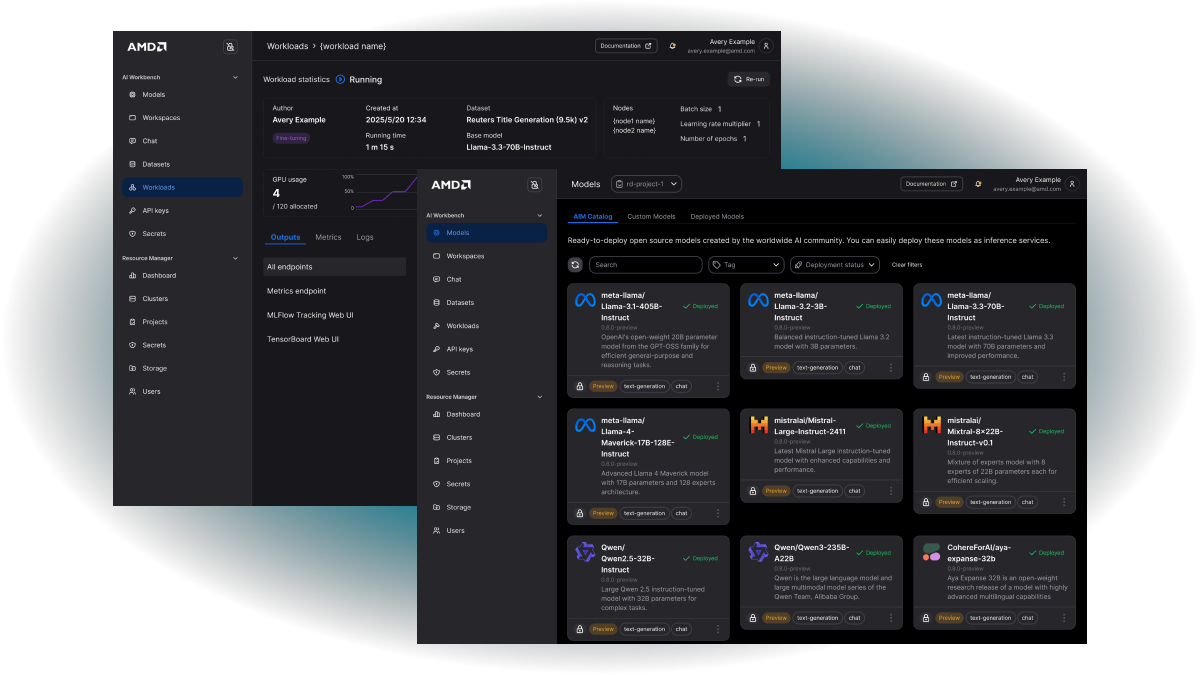

Deploy, Manage, and Accelerate AI Workloads at Scale

Built for enterprise scale on AMD Data Center Technologies, the intuitive interfaces enable organizations to quickly get started with AI development and efficiently utilize compute resources across the organization.

AMD Inference Microservices

Prebuilt inference containers that bundle model, engine, and optimized configuration for AMD hardware. Supports OpenAI-compatible APIs and open weight models, enabling rapid deployment without re-engineering. Hardware-aware tuning optimizes precision, tensor parallelism, and throughput for optimal performance.

Inference at Scale

Intelligent inference routing and scheduling, model caching, and real-time autoscaling based on actual utilization, combined with industry standard APIs.

Optimized AIM Containers & OS Run Times

Model profiles optimized for AMD hardware options and use case specific KPIs.

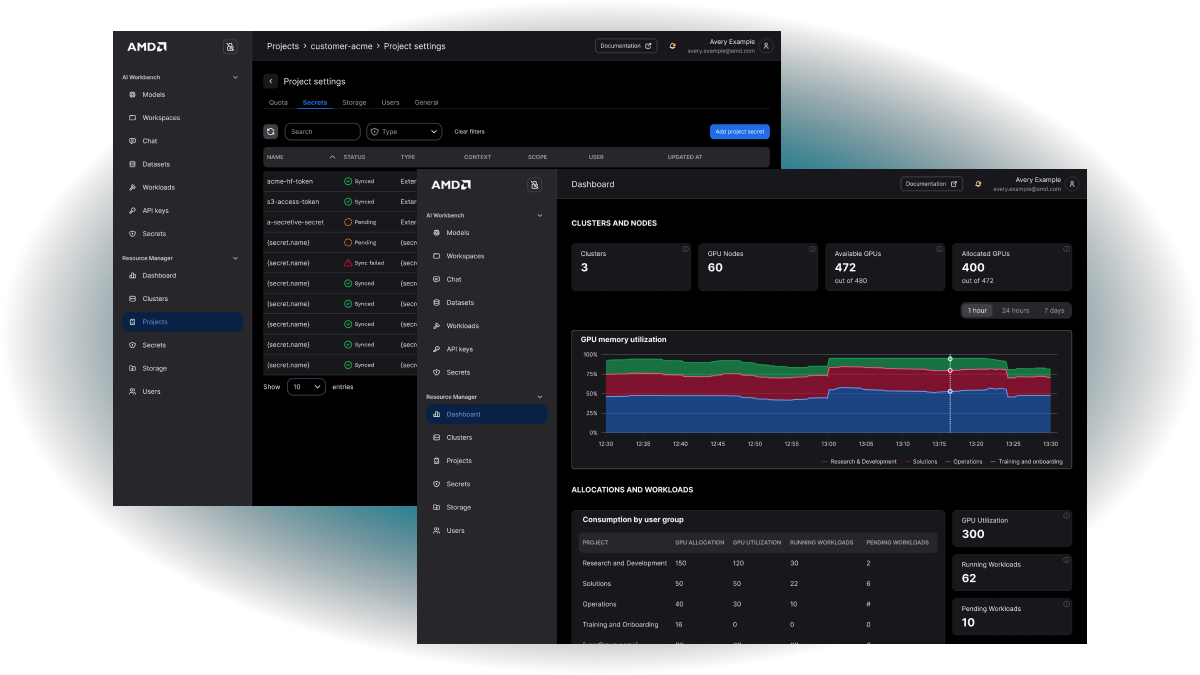

Enterprise Ready Security & Governance

Observability and model management through real-time monitoring dashboards and life cycle management of models and keys.

Deploy and Manage AIMs

Intuitive interface for deploying, interacting with and monitoring AIMs deployments.

Developer Workspaces

Virtualized development environments based on notebooks, popular IDEs or flexible SSH-level access.

Community Developer Tools

Most common AI development tools available to the developers, from experiment tracking and pipelines to benchmarking and profiling.

Intelligent Resource Scheduling

Flexibly allocate resource with intelligent workload scheduling based on pre-defined policies and priorities, reducing GPU idleness.

Job Queue Management

Ensure balanced compute distribution between teams and workloads through smart quota allocation and dynamic scaling.

Metrics and Analytics

Track and monitor the compute utilization across user groups and workloads. Optimize resource allocation based on real world feedback.

Resources