Accelerating AI with Open Software: AMD ROCm™ 7 is Here

Jul 07, 2025

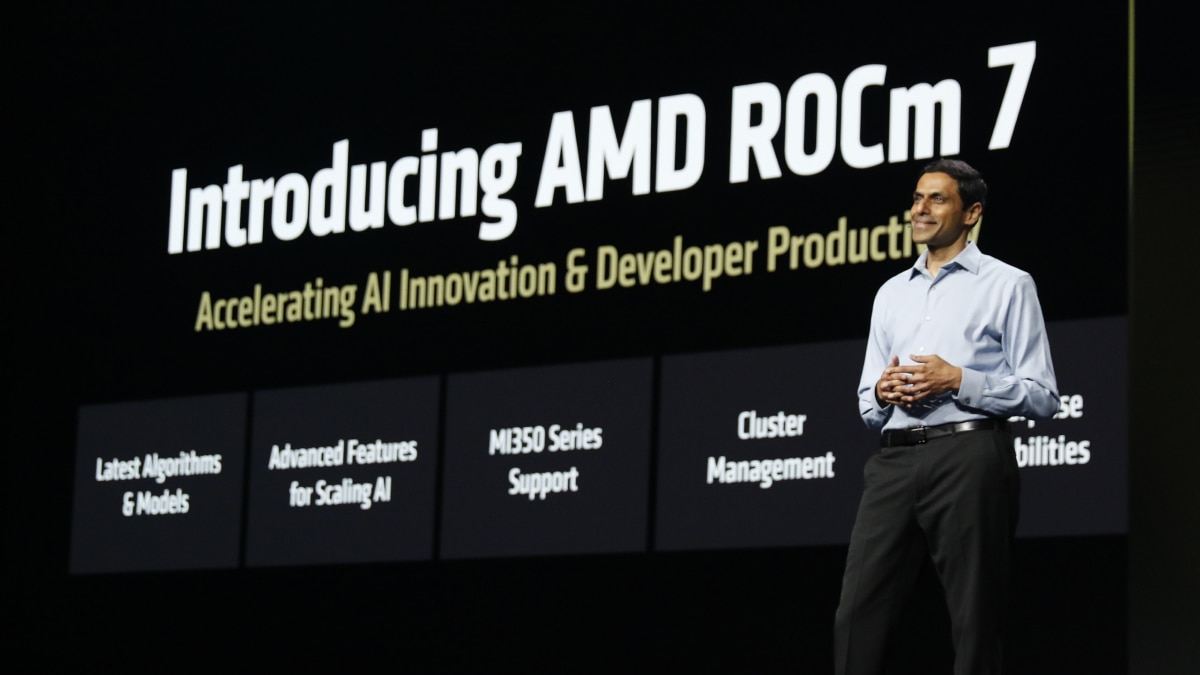

The enterprise AI landscape is evolving fast. Open software platforms that are scalable and high performance are needed urgently for companies to take advantage of all AI has to offer. At Advancing AI 2025, AMD unveiled AMD ROCm™ 7, the latest evolution of our open-source software platform built to unleash the full potential of AMD Instinct™ GPUs.

Engineered for performance, compatibility, and flexibility, AMD ROCm 7 delivers major enhancements in inference and training, supports many of the latest AI models out of the box, and extends the AMD open ecosystem from cloud to edge.

Five Game-Changing Features of AMD ROCm 7

- A Generational Leap in AI Performance

- ROCm 7 delivers breakthrough performance gains over its predecessor.

- Training workloads see up to 3x average improvement over ROCm 6 benchmarks.1

- Inference performance increases by an impressive 4.6x on average versus ROCm 6.2

These gains are powered by improvements in GPU utilization, support for lower precision data types, and optimized data movement. For enterprises, that translates directly into faster time-to-insight and higher infrastructure ROI.

- Support for the latest Algorithms and Models

- Enhanced Distributed Inference

- From Cloud to Client: Expanded Platform Support

- The All-new AMD Developer Cloud

With AMD ROCm 7, developers can deploy the latest open-source algorithms and models, including LLaMA 4, Gemma 3, and DeepSeek, from day one. Our accelerated update cadence, with biweekly major releases and day-zero support for emerging models, helps ensure enterprises stay ahead in a fast-moving AI landscape.

AMD ROCm 7 introduces a robust approach to distributed inference, leveraging the open-source ecosystem, including frameworks like SGLang, vLLM, and llm-d. By embracing an open strategy, ROCm 7 enables the incredibly high throughput at rack scale —across batch, across nodes, across models.

AMD ROCm is now available on endpoint devices, supporting AMD Ryzen™ AI and select AMD Radeon™ Graphics systems. In the second half of 2025, AMD plans to enable developers to develop with ROCm locally on their own Linux or Windows device, with their own data, instead of relying on the cloud.

This edge-to-cloud continuum gives enterprises new flexibility in how they prototype, deploy, and secure AI workloads.

AMD has launched the AMD Developer Cloud, a fully managed, zero-setup environment providing instant access to AMD Instinct™ MI300X GPUs. With a GitHub account or email, developers can launch Jupyter notebooks in seconds and start building. Developers can apply for 25 complimentary cloud hours today, with up to 50 more hours available through the ROCm Star Developer Certificate program.

Open. Scalable. Enterprise-Ready.

AMD continues to champion open software and open platforms With AMD ROCm 7 and the AMD Developer Cloud, organizations gain an open-source AI stack that is frictionless, ready to use, and built for scale.

AMD ROCm 7 is accelerating AI innovation across layers—from algorithms to infrastructure—bringing real competition and openness back to the AI software stack

To learn more about ROCm 7, visit the ROCm page, or watch the Advancing AI 2025 keynote.

Related Blogs

Footnotes

- MI300-081 - Testing conducted by AMD Performance Labs as of May 15, 2025, to measure the training performance (TFLOPS) of ROCm 7.0 preview version software, Megatron-LM, on (8) AMD Instinct MI300X GPUs running Llama 2-70B (4K), Qwen1.5-14B, and Llama3.1-8B models, and a custom docker container vs. a similarly configured system with AMD ROCm 6.0 software. Hardware Configuration

1P AMD EPYC™ 9454 CPU, 8x AMD Instinct MI300X (192GB, 750W) GPUs, American Megatrends International LLC BIOS version: 1.8, BIOS 1.8.

Software Configuration

Ubuntu 22.04 LTS with Linux kernel 5.15.0-70-generic

ROCm 7.0., Megatron-LM, PyTorch 2.7.0

vs.

ROCm 6.0 public release SW, Megatron-LM code branches hanl/disable_te_llama2 for Llama 2-7B, guihong_dev for LLama 2-70B, renwuli/disable_te_qwen1.5 for Qwen1.5-14B, PyTorch 2.2.

Server manufacturers may vary configurations, yielding different results. Performance may vary based on configuration, software, vLLM version, and the use of the latest drivers and optimizations.

- MI300-080 -Testing by AMD Performance Labs as of May 15, 2025, measuring the inference performance in tokens per second (TPS) of AMD ROCm 6.x software, vLLM 0.3.3 vs. AMD ROCm 7.0 preview version SW, vLLM 0.8.5 on a system with (8) AMD Instinct MI300X GPUs running Llama 3.1-70B (TP2), Qwen 72B (TP2), and Deepseek-R1 (FP16) models with batch sizes of 1-256 and sequence lengths of 128-204. Stated performance uplift is expressed as the average TPS over the (3) LLMs tested.

Hardware Configuration

1P AMD EPYC™ 9534 CPU server with 8x AMD Instinct™ MI300X (192GB, 750W) GPUs, Supermicro AS-8125GS-TNMR2, NPS1 (1 NUMA per socket), 1.5 TiB (24 DIMMs, 4800 mts memory, 64 GiB/DIMM), 4x 3.49TB Micron 7450 storage, BIOS version: 1.8

Software Configuration(s)

Ubuntu 22.04 LTS with Linux kernel 5.15.0-119-generic

Qwen 72B and Llama 3.1-70B -

ROCm 7.0 preview version SW

PyTorch 2.7.0. Deepseek R-1 - ROCm 7.0 preview version, SGLang 0.4.6, PyTorch 2.6.0

vs.

Qwen 72 and Llama 3.1-70B - ROCm 6.x GA SW

PyTorch 2.7.0 and 2.1.1, respectively

Deepseek R-1: ROCm 6.x GA SW

SGLang 0.4.1, PyTorch 2.5.0

Server manufacturers may vary configurations, yielding different results. Performance may vary based on configuration, software, vLLM version, and the use of the latest drivers and optimizations.

- MI300-081 - Testing conducted by AMD Performance Labs as of May 15, 2025, to measure the training performance (TFLOPS) of ROCm 7.0 preview version software, Megatron-LM, on (8) AMD Instinct MI300X GPUs running Llama 2-70B (4K), Qwen1.5-14B, and Llama3.1-8B models, and a custom docker container vs. a similarly configured system with AMD ROCm 6.0 software. Hardware Configuration

1P AMD EPYC™ 9454 CPU, 8x AMD Instinct MI300X (192GB, 750W) GPUs, American Megatrends International LLC BIOS version: 1.8, BIOS 1.8.

Software Configuration

Ubuntu 22.04 LTS with Linux kernel 5.15.0-70-generic

ROCm 7.0., Megatron-LM, PyTorch 2.7.0

vs.

ROCm 6.0 public release SW, Megatron-LM code branches hanl/disable_te_llama2 for Llama 2-7B, guihong_dev for LLama 2-70B, renwuli/disable_te_qwen1.5 for Qwen1.5-14B, PyTorch 2.2.

Server manufacturers may vary configurations, yielding different results. Performance may vary based on configuration, software, vLLM version, and the use of the latest drivers and optimizations. - MI300-080 -Testing by AMD Performance Labs as of May 15, 2025, measuring the inference performance in tokens per second (TPS) of AMD ROCm 6.x software, vLLM 0.3.3 vs. AMD ROCm 7.0 preview version SW, vLLM 0.8.5 on a system with (8) AMD Instinct MI300X GPUs running Llama 3.1-70B (TP2), Qwen 72B (TP2), and Deepseek-R1 (FP16) models with batch sizes of 1-256 and sequence lengths of 128-204. Stated performance uplift is expressed as the average TPS over the (3) LLMs tested.

Hardware Configuration

1P AMD EPYC™ 9534 CPU server with 8x AMD Instinct™ MI300X (192GB, 750W) GPUs, Supermicro AS-8125GS-TNMR2, NPS1 (1 NUMA per socket), 1.5 TiB (24 DIMMs, 4800 mts memory, 64 GiB/DIMM), 4x 3.49TB Micron 7450 storage, BIOS version: 1.8

Software Configuration(s)

Ubuntu 22.04 LTS with Linux kernel 5.15.0-119-generic

Qwen 72B and Llama 3.1-70B -

ROCm 7.0 preview version SW

PyTorch 2.7.0. Deepseek R-1 - ROCm 7.0 preview version, SGLang 0.4.6, PyTorch 2.6.0

vs.

Qwen 72 and Llama 3.1-70B - ROCm 6.x GA SW

PyTorch 2.7.0 and 2.1.1, respectively

Deepseek R-1: ROCm 6.x GA SW

SGLang 0.4.1, PyTorch 2.5.0

Server manufacturers may vary configurations, yielding different results. Performance may vary based on configuration, software, vLLM version, and the use of the latest drivers and optimizations.

/avx512-blog-primary-photo.png)