Hack the Edge: Developers unleash creativity at AMD x Liquid AI Hackathon

Nov 18, 2025

Over two days in downtown San Francisco, more than 70 developers, builders, and researchers came together for a fast-paced, edge AI focused innovation sprint powered by AMD and Liquid AI. Hosted at Liquid AI’s office in San Francisco, Hack the Edge challenged participants to explore new possibilities when small, fast, private models run directly on AMD NUCs and Ryzen™ AI desktops.

Across 48 hours of ideation, hacking, fireside chats, and live demos, teams delivered 15 agentic, real-world projects built entirely on Liquid Foundation Models (LFMs) running on ROCm-accelerated AMD hardware. What emerged was a clear signal:

Hybrid AI and on-device intelligence are not in the distant future; They’re here, and developers are ready to build.

Hybrid AI Meets hardware built for the edge

The challenge theme focused on a question that sits at the heart of modern AI development - When deployed locally, what useful, agentic applications can you build with Liquid models on AMD Ryzen AI devices?

Liquid AI’s LFM family - small language models engineered for fast, private, on-device inference, served as the backbone for every project. These models shine in workloads like:

- Structured data extraction

- Function calling

- Retrieval-augmented generation

- Translation

- Real-time multimodal reasoning

Teams used Liquid’s LEAP platform, customization tooling, and the latest LFM2, LFM2-VL, LFM Nanos, and LFM2-Audio models to build applications that typically require much larger models or cloud-scale infrastructure.

AMD showed the open ecosystem approach, deep developer-first tooling, and hybrid AI strategy spanning cloud to client. With AMD ROCm ™ software integrated into llama.cpp, teams ran Liquid models at impressive speed on AMD Ryzen AI -powered hardware, unlocking low-latency inference and full local privacy.

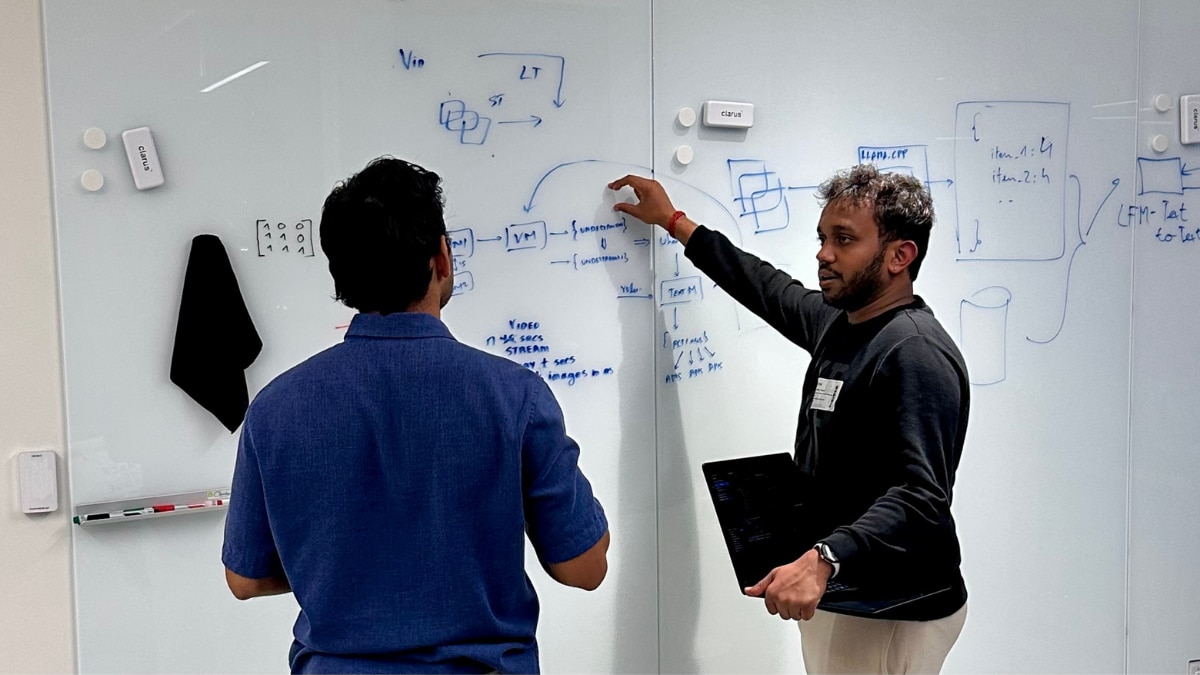

To support rapid experimentation and real-time edge deployment, developers hacked on a fleet of 25 AMD-powered PCs purpose-built for on-device AI. The setup included AMD NUCs running the Ryzen™ AI 9HX 370 with Radeon™ 890M graphics and 64 GB of RAM, alongside high-performance Framework desktops featuring the Ryzen™ AI 395+ processor and 128 GB of RAM. Together, this hardware, paired with optimized Liquid Foundation Models and ROCm acceleration through llama.cpp, created a powerful sandbox where teams could prototype, fine-tune, and deploy agentic applications entirely on-device.

ROCm-Powered Liquid AI: LLM Acceleration on AMD Ryzen AI platform

Liquid’s LFM models are now optimized with AMD ROCm software stack, enabling:

- Maximum performance using Radeon AI accelerators

- Significant speed-ups from ROCm 7.x integrations

- Smooth llama.cpp deployment with simplified workflows

- A fully open ecosystem with no vendor lock-in

- Optimized kernels tuned for customer needs and modern hardware

For developers, that meant one thing - smaller models that match frontier model level performance, running entirely on-device.

Challenge Reveal and First Builds

The hackathon opened with a welcome from AMD and Liquid AI and an introduction to the challenge: build a useful, agentic application using Liquid models running locally on AMD hardware. To spark ideas, organizers highlighted on-device use cases like meeting recorders, voice dictation, document Q&A, and privacy-first filters, along with edge “box” concepts such as security analysis, home-health assistants, and continuous bug-detection tools. Teams formed quickly, jumped into brainstorming, and by mid-morning the room had already shifted from ideas to active prototyping.

Fireside Chat #1: Pau Labarta Bajo (Liquid AI) × Ramine Raone (AMD)

The first fireside chat brought together Pau Labarta Bajo from Liquid AI and Ramine Raone from AMD for a deep dive into the technical future of on-device intelligence. Their conversation explored everything from Stanford Hazy Research’s hipkitten work to how well-optimized small models can rival much larger ones when deployed locally. They discussed the growing importance of “intelligence per watt” as a defining metric in the hybrid AI era, and why combining cloud and client compute is accelerating developer velocity. Both speakers emphasized how the AMD–Liquid partnership is enabling faster experimentation and more efficient real-world applications across the edge. Ramine closed with advice for builders navigating a rapidly evolving AI landscape, noting that increasingly capable models are not a threat but a multiplier, freeing developers to spend more time thinking rather than coding. His final recommendation landed strongly with the audience: becoming a full-stack, end-to-end engineer will matter more than ever.

Fireside Chat #2: Bryan Madden (AMD) × Ramin Hasani (Liquid AI)

Later in the afternoon, Global Head of Marketing at AMD, Bryan Madden, joined Liquid AI’s Co-Founder and CEO, Ramin Hasani, for a wide-ranging conversation on the evolution and direction of the AI ecosystem. Ramin traced Liquid AI’s origins back to its MIT research roots and the unconventional inspiration behind its architecture, modeled after the dynamics of “worm brains” that led to breakthroughs in efficient, adaptable neural systems. He spoke about Liquid’s ambition to become the software layer for physical AI and why hybrid AI is essential for deploying intelligence in the real world, where low latency and privacy matter. Both Bryan and Ramin emphasized the shared mission between AMD and Liquid: empowering developers to build fast, private, on-device intelligence without barriers. The discussion grounded the day’s hacking in a larger industry narrative, highlighting how developer creativity and open ecosystems are shaping the next phase of AI innovation.

A Showcase of Edge Intelligence: What the Teams Built

The final demos revealed just how far developers could push Liquid models on AMD hardware in only two days. Projects spanned audio, vision, multimodal agents, automation, and even scientific computing, each showing the power of fast, private, on-device AI.

- Liquid DJ turned an AMD NUC into an on-device Shazam for long DJ sets, using LFM2-Audio-1.5B to detect track transitions and automatically generate full YouTube set tracklists.

- Liquid Audio Book built a full edge audiobook pipeline LFM2-VL for text extraction, LFM2-Extract for refinement, and LFM2-Audio for TTS, instantly turning scanned notes into high-quality narration without cloud calls.

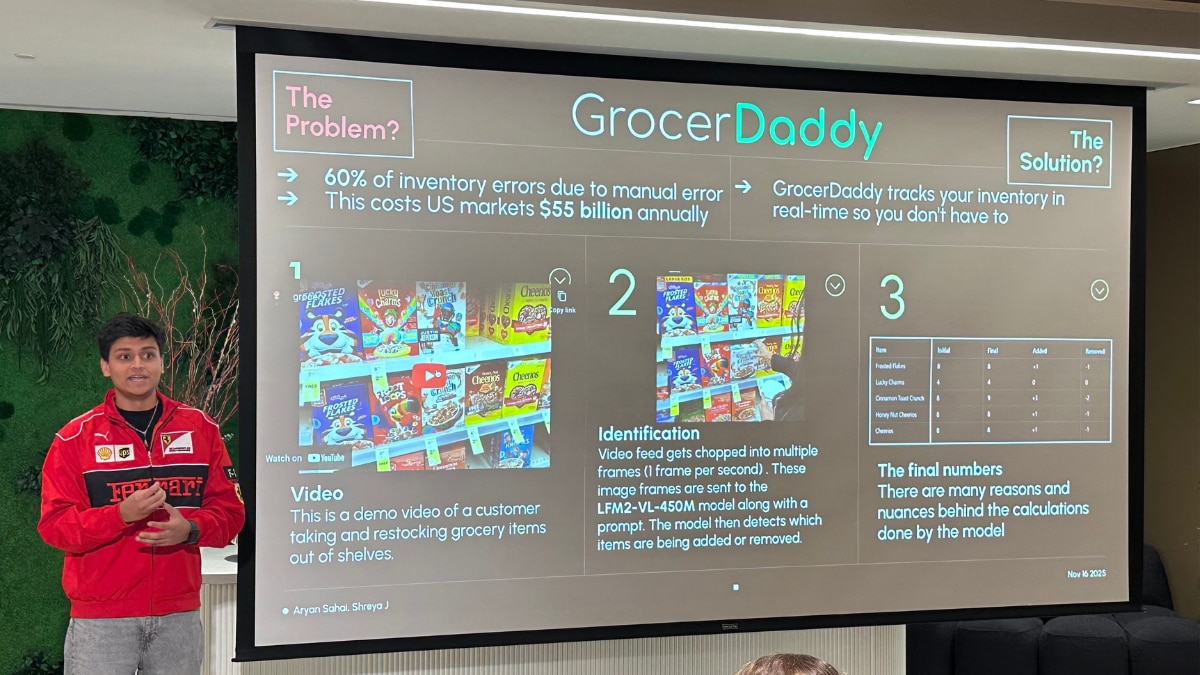

- GrocerDaddy used LFM2-VL-450M to analyze grocery aisle video and detect items being added or removed, highlighting how small multimodal models can automate real-world retail workflows.

- VoltX built an on-device EV battery health monitor using the LFM2-350M model to track SOC/SOH, degradation signals, and performance trends. With FastAPI, DuckDB, and a lightweight Flask dashboard, the system visualized real-time metrics locally, while a private AI assistant provided personalized battery insights.

- DocSpeak acted like a local NotebookLM: upload a PDF, and it extracts, summarizes, and generates an audio version using LFM2-Extract + LFM2-Audio, turning dense documents into short, private podcasts.

- Realtime Chatbot showcased multimodal orchestration with LFM2-VL models, enabling a voice-interactive agent that responds using both audio input and video frame context.

- Echo Finder paired a wearable camera with LFM2-2.6B, VLM 2B, and LFM2-Audio to help users locate lost household items, an especially compelling assistive case for elderly or visually impaired users.

- Team Monster delivered a lightweight video understanding pipeline that generates per-second embeddings with LFM2-VL, enabling semantic clip search and recommendation workflows on-device.

- LiquidSense explored smart-home intelligence, using on-device inference for IoT control, automatic scene responses, and privacy-preserving anomaly detection.

- PharmaNeuro Predictor pushed hybrid AI into drug discovery, combining molecular features with multimodal models to predict CNS efficacy, BBB penetration, and safety risks, hinting at faster, more accessible scientific workflows.

Across all 15 demos, teams proved that with Liquid’s SLMs, ROCm acceleration, and AMD’s edge hardware, developers can build meaningful, high-impact applications entirely on-device, fast, private, and ready for real-world use.

Judging & Winners

Projects were evaluated on four core pillars: how well they aligned with the challenge, the creativity and polish of the idea, the real-world usefulness of the solution, and the technical depth behind the implementation.

After an afternoon of rapid-fire demos, two teams earned the prize of Framework desktops powered by the Ryzen™ AI 395+ processor for their standout blend of innovation, execution quality, and clear edge-AI impact. Additional teams were recognized with awards from Liquid AI’s $10,000 prize pool, celebrating exceptional applications of Liquid Foundation Models running on AMD Ryzen AI platforms.

Momentum that extends beyond the weekend

What stood out most over the weekend was the pace and quality of innovation in the room. Developers moved quickly from ideas to polished prototypes, leveraging ROCm-optimized Liquid models to build fast, private, and highly capable edge applications. LFM2-VL and LEAP customization emerged as clear favorites, powering multimodal agents, vision-based tools, and tightly integrated workflows. Across every table, teams pushed SLMs into new territory, experimenting boldly, iterating rapidly, and showing what’s possible when optimized models meet capable hardware and an open developer ecosystem.

This hackathon captured the essence of AMD and Liquid AI’s shared mission:

Empower developers to build AI without barriers - open, fast, AI everywhere.

Developer Resources

As momentum continues, AMD offers a growing ecosystem designed to help developers keep building.

- Developer Resources Portal – Access SDKs, libraries, documentation, and tools to accelerate AI, HPC, and graphics development.

- AI@AMD X – Stay updated with the latest software releases, AI blogs, tutorials, and news.

- Developer Cloud – Start projects on AMD Instinct™ GPUs with $100 in complimentary credits for 30 days, offering an easy on-ramp for experimentation and benchmarking.

- Developer Central YouTube – Explore hands-on videos, demos, and deep-dive sessions from engineers and community experts.

- Developer Community Discord – Join global developer communities, share feedback, and exchange optimization tips directly with peers and AMD specialists.