Where Developers Build the Future: Inside AMD AI DevDay 2025

Oct 27, 2025

We are witnessing an unprecedented surge in AI workloads – yet GPU compute resources are often locked behind proprietary walls and steep learning curves.

That mission set the stage for AMD as they hosted their first AI DevDay, where the global developer community came together to see what’s possible when cutting-edge hardware meets an open software ecosystem. Held at the historic San Francisco Mint, the event brought engineers, researchers, and creators to explore real-world AI solutions. It showed how open standards, scalable infrastructure, and developer-driven innovation are redefining the future of AI.

Figure 1: The San Francisco Mint

A Day of Innovation and Collaboration

The one-day event featured keynotes, hands-on labs, and deep-dive sessions led by some of the brightest minds in AI. From distributed systems to multi model agents, every session reinforced a single idea – the future of AI will be open, modular, and built by developers everywhere.

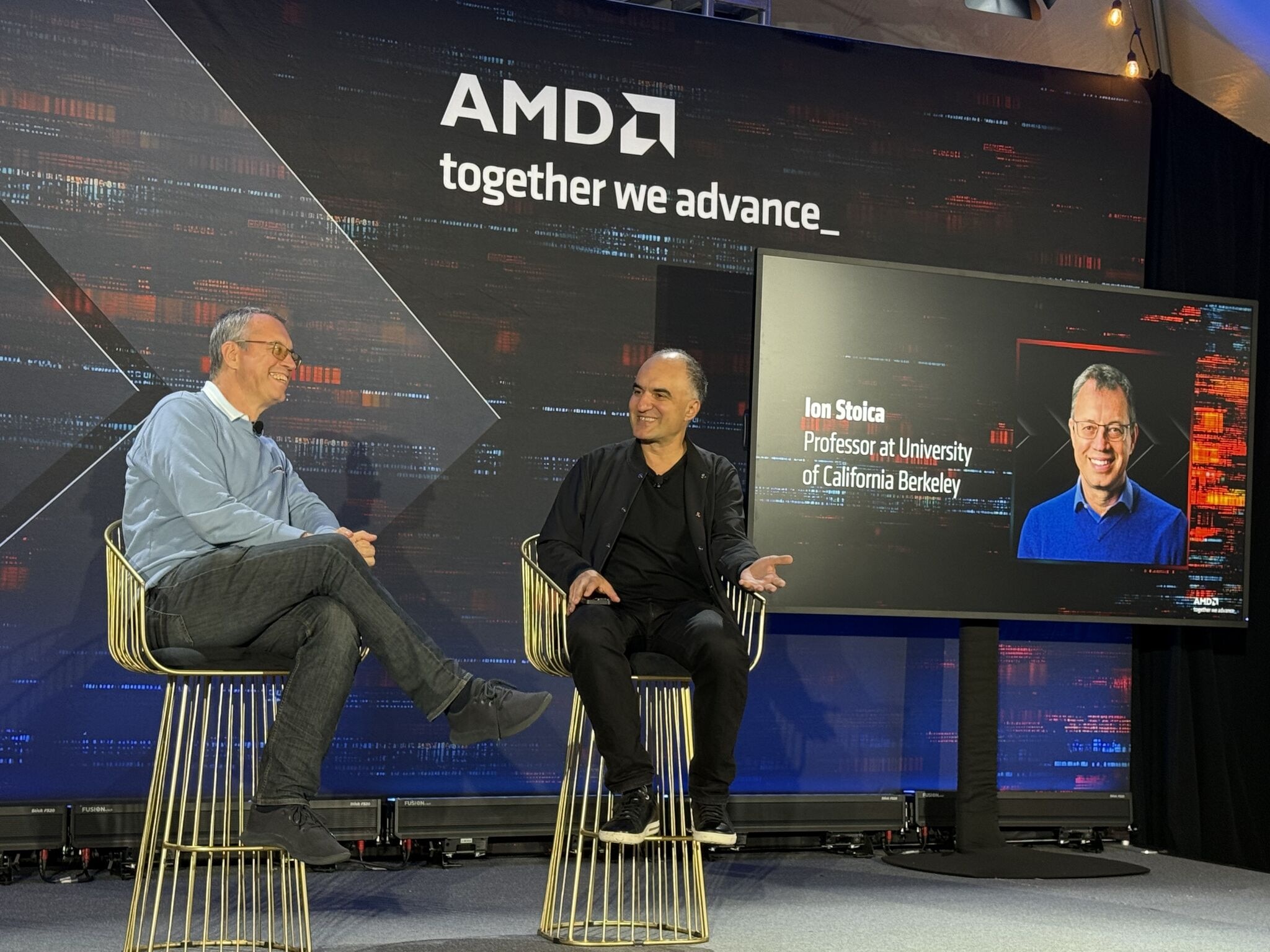

Distinguished speakers included Ion Stoica, Lin Qiao, Simran Arora, Jeff Boudier, Michael Chiang, Daniel Han, Driss Guessous, Dominik Kundel, Simon Mo, Mark Saroufim, Tris Warkentin, and Yineng Zhang — representing leading universities, open-source communities, and platform innovators shaping the AI ecosystem.

Attendees joined instructor-led, hands-on GPU workshops spanning beginner to advanced levels — from developing multi-model multi-agent systems to video generation with open-source tools, teaching models to think (GRPO with Unsloth on AMD Instinct™ MI300X GPUs), and developing optimized kernels. Each workshop provided a practical path to accelerate development and push the limits of AI performance on open platforms.

Figure 2: Beginner Workshop - Developing Multi-Model Multi-Agent Systems

The event floor buzzed with live demos – from Lemonade Arcade, which used locally running LLMs to generate Python games in minutes on Ryzen™ AI systems, to a hands-on showcase of how GRPO training with Unsloth turns a LLama model into a step-by-step reasoning engine. Each demo captured the creative, hands-on spirit of the developer community — making AI tangible and open to all.

Building the Future Together

Ion Stoica, Professor at UC Berkeley and co-founder of Databricks and Anyscale opened the day with a powerful reminder: the hardest problems in AI aren’t about intelligence – they are about building systems that scale, adapt, and deliver value. His talk captured the spirit of AI DevDay: that the future of AI will be shaped not just by breakthroughs in models, but by the infrastructure and communities that make those breakthroughs possible.

Figure 3: Fireside chat with Ion Stoica, Professor at UC Berkeley

Stoica challenged developers to think beyond benchmarks – to build infrastructure that’s open, reliable, and built for collaboration. He called on the community to focus on value before efficiency, simulate before shipping, and embrace modular, data-driven architectures. His message echoed throughout the day; real innovation happens when developers, tools, and hardware come together in an open ecosystem that scales.

With that bold declaration, Lin Qiao captured the forward-looking energy of AI DevDay. Her session spotlighted how agentic AI—systems made of smaller, specialized agents that collaborate to solve complex tasks—is transforming how developers build intelligent applications. For Qiao, this evolution reflects the very spirit of the event: giving every developer access to the performance, openness, and flexibility needed to bring next-generation AI to life

Developer Experience: Built for Speed

Anush emphasized that open software is what makes true performance possible. He shared how AMD ROCm™ Software continues to empower developers with a fully transparent, modular, and community drivenplatform . Developers can see the code, shape it, and make it better. This collaborative approach has fueled rapid innovation across AI frameworks, improving performance, and simplifying cross-platform development.

Elangovan also underscored the AMD focus on developer experience — from streamlined installation to unified tools and expanded learning resources. A growing developer hub, improved documentation, and new community channels (including a Discord and upcoming Discourse forum) are designed to help every developer build and scale with confidence.

RAY3 serving on AMD Instinct 325X GPUs

Conversation on the Future of Building with AI

A highlight of the day was a fireside chat between Vamsi Boppana, Senior Vice President of AI at AMD, and Simran Arora, Academic Partner of Together AI and assistant professor at Cal Tech. Their conversation explored the intersection of AI research and real-world performance — and how the next breakthroughs will come from collaboration between developers and intelligent systems.

Arora shared insights from her research on LLMs generating efficient GPU kernels, challenging the long-held belief that performance optimization is reserved for teams of specialized engineers. She reflected on her experience using AMDs open platforms for research, emphasizing how accessibility and transparency accelerate both discovery and deployment.

Pushing the Limits: AMD Developer Challenge

The event concluded with a celebration of developer ingenuity. Vamsi Boppana was joined by Mark Saroufim, co-creator of GPU mode, and announced the results of the AMD Developer Challenge: Distributed Inference.

Teams of up to three participants were challenged to optimize multi-GPU communication kernels and boost LLM inference performance on AMD Instinct™ GPUs. The response was extraordinary - with over 600 developers, 60,000 code submissions, and a peak of 5,000 runs per day. Participants worked across six benchmark kernel shapes and eleven testing configurations, tackling complex operations such as All-to-All, GEMM + ReduceScatter, and ALLGather + GEMM. Submissions ranged from hand-written HIP/Triton kernels to LLM-generated test code, showcasing the intersection of human ingenuity and AI-assisted development.

The winning teams were:

- Grand Prize $100,000: RadeonFLow

- 1st Place $25,000: Cabbage Dog

- 2nd Place $15,000: Epliz

- 3rd Place $10,000: Team Ganesha

These teams achieved up to 1.5x AMDs internal performance baseline – an impressive testament to what’s possible through open collaboration.

Thank You to Our Sponsors

Special thanks to Dell Technologies, Supermicro, Crusoe, and Hewlett Packard Enterprise (HPE) for their partnership and support. Their collaboration underscores the shared commitment to open innovation and developer empowerment across the AI ecosystem.

The Future of AI is Open

In the end, AMD AI DevDay 2025 marked more than just a gathering — it was a powerful statement about the future of AI. By bringing together the global developer community, AMD showcased what’s possible when open hardware, open software, and open collaboration converge. The event reinforced a clear vision: the next era of AI innovation will be defined by accessibility, transparency, and the collective ingenuity of developers everywhere.

Unable to attend? Sign up to be notified when AI DevDay 2025 session recordings are available on demand.

Developer Resources:

- Developer Resources Portal – Access SDKs, libraries, documentation, and tools to accelerate AI, HPC, and graphics development.

- AI@AMD X – Stay updated with the latest software releases, AI blogs, tutorials, and news.

- Developer Cloud – Start projects on AMD Instinct™ GPUs with $100 in complimentary credits for 30 days, offering an easy on-ramp for experimentation and benchmarking.

- Developer Central YouTube – Explore hands-on videos, demos, and deep-dive sessions from engineers and community experts.

- Developer Community Discord – Join global developer communities, share feedback, and exchange optimization tips directly with peers and AMD specialists.