Speculative LLM Inference on the 5th Gen AMD EPYC Processors with Parallel Draft Models (PARD) & AMD Platform Aware Compute Engine (AMD PACE)

Jul 23, 2025

Introduction

Auto-regressive language models process tokens sequentially and often face limitations due to memory bandwidth constraints. To address this, speculative decoding predicts multiple future tokens and verifies them in parallel, which reduces inter-token latency. Parallel draft models (PARD) technology, developed by AMD, further enhances speculative decoding by increasing the acceptance rate of predicted tokens, enabling large language models (LLMs) to achieve human-perceptible generation speeds on 5th Gen AMD EPYC™ processors.

AMD PACE (AMD Platform Aware Compute Engine) is a high-performance inference server with speculative decoding optimized for modern CPU-based servers. When combined with PARD, AMD PACE + PARD solution achieves a throughput of approximately 380 tokens/second on the Llama 3.1 8B model. Even with a batch size of 1, this allows multiple users to experience interactive, human-readable response times. AMD PACE is also set to be open-sourced soon.

In this blog, we will explore how PARD and AMD PACE work together to optimize inference performance for Transformer models, particularly in data center environments. We will discuss the technical underpinnings of these technologies, their integration with the 5th Gen AMD EPYC processor architecture, and the results of performance evaluations based on benchmark tests.

Technical Overview

Auto-Regressive Decoding

In auto-regressive decoding, each layer performs scaled dot-product attention using several parameters: batch size (B), number of attention heads (H), head dimension (D), and sequence length (n). For each new token, batched matrix multiplications (Query-Key and Attention-Value) are updated incrementally. However, this process often has low arithmetic intensity and is typically bottlenecked by memory bandwidth.

Speculative Decoding with PARD

Speculative decoding accelerates LLM inference, but speculative decoding often requires multiple draft model passes, which can introduce overhead. PARD introduces a Parallel Mask Predict mechanism, enabling the draft model’s contribution in a single forward pass after minimal fine-tuning. This significantly boosts inference speeds for models like Llama 3 and Qwen. A single PARD-enhanced draft model can accelerate an entire family of LLMs, simplifying their deployment.

Through parallelism, PARD can efficiently speculate and verify K tokens per iteration to improve arithmetic intensity. The overall speedup is a function of both the mean number of accepted speculative tokens (m') and the value of K.

Efficient Inference on the 5th Gen EPYC Processor Architecture

The AMD EPYC 9005 Series uses a hybrid, multi-chip design and new ‘Zen 5’ and ‘Zen 5c’ cores to address challenges in data centers. This new architecture prioritizes leadership performance, density, and efficiency in virtualized and cloud environments while supporting new AI workloads.

Servers based on the EPYC 9005 Series leverage Vectorized Neural Network Instructions (VNNI) to accelerate neural network inference. Each socket, featuring 128 physical (256 logical) cores and operating at a clock frequency of 2.6 GHz, delivers a theoretical hardware performance of 90-100 tera operations per second (TOPS) using BFloat16 precision across two sockets.

Deep Dive: How AMD PACE and PARD Enhance Inference Performance

AMD PACE utilizes PARD to reduce inter-token latency and leverages ‘Zen 5’ processor cores in the EPYC processor for efficient multi-instance runs. Here’s how this collaboration enhances performance:

AMD PACE improves support for Transformer models on CPUs by efficiently integrating PARD. AMD PACE leverages the optimal number of speculative tokens ('K') to minimize inter-token latency.

The optimal selection of "K" (number of speculative tokens) enhances the computational efficiency of the cores. Coupled with RAM capacities in the terabyte range, servers based on the EPYC 9005 Series can host multiple model instances, enabling concurrent real-time interactive sessions for numerous users. By carefully tuning "K" and potentially other parameters, we can effectively exploit the ‘Zen 5’ architecture for improved throughput.

| Feature | Specification |

| CPU | AMD EPYC 9005 Series processors, codename “Turin” |

| Architecture | Zen 5 |

| Cores | 128 cores per socket |

| RAM | 1.5 TB |

| Precision | BF16 |

| Sockets | 2 |

Evaluation

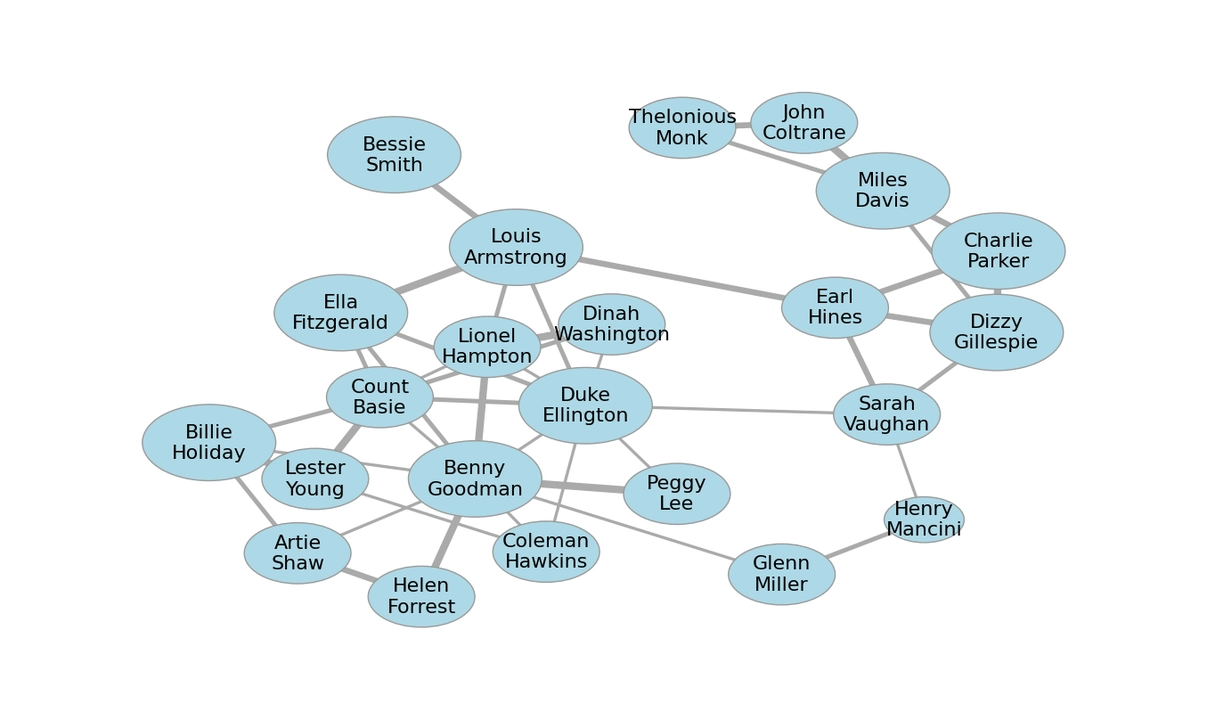

In this section, we conduct experiments with various LLMs to demonstrate the benefits of the PARD and AMD PACE solutions. We test a wide range of models from Llama3, Qwen, and DeepSeek families and benchmark their inference performance and accuracy under different configurations.

Performance Acceleration (7B-70B Models):

PARD utilizes the `meta-llama/Llama-3.2-1B-Instruct` model as a draft model, providing foundational support for all models within the Llama family. Additionally, PARD offers models for the Qwen family and the DeepSeek family, which comprises Qwen-distilled models.

The draft model includes a configurable parameter, `K`, which determines the number of parallel tokens to be generated:

When `K` is set to 0, token generation via the draft model is bypassed, and the target model operates in an autoregressive manner.

When `K` is set to 12, the draft model generates 12 tokens, which are then verified by the target model.

We evaluated the inference performance with Llama 3.1-8B, Instruct, Qwen2.5-7B, Deepseek-R1-Qwen, and Llama 3.1-70B as target models on a dual-socket server. The benchmarks used are GSM8K, HumanEval, and Math-500. The following are the observations:

- The combination of Transformers+ and PARD yields performance gains of around 3x~4x across a range of models

- Library optimizations from AMD PACE enable an additional boost of up to 20%

As an inference serving solution, AMD PACE enables data parallelization. AMD PACE implements data parallelization internally as multiple instances and improves the performance by up to 16x compared to vanilla auto-regressive models.

---amd-platform-aware-compute-engine-(amd-pace)/figure-1-comparison%20of%20throughputs%20on%20the%20math-500.png)

Figure 1: Comparison of throughputs on the Math-500 dataset using a batch size of 1

---amd-platform-aware-compute-engine-(amd-pace)/Figure%202%20Comparison%20of%20throughputs%20on%20the%20GSM8K%20dataset%20using%20a%20batch%20size%20of%201.png)

Figure 2: Comparison of throughputs on the GSM8K dataset using a batch size of 1

---amd-platform-aware-compute-engine-(amd-pace)/Figure%203%20Comparison%20of%20throughputs%20on%20the%20HumanEval%20dataset%20using%20a%20batch%20size%20of%201.png)

Figure 3: Comparison of throughputs on the HumanEval dataset using a batch size of 1

Draft Model Accuracy

The draft model accuracy across different datasets is as follows:

| Accuracy | |||

| Model | GSM8K | HumanEval | Math_500 |

| Llama3.1_8B | 0.91 | 0.85 | 0.84 |

| Qwen2.5 7B | 0.86 | 0.85 | 0.82 |

| Deepseek_Qwen2 | 0.78 | 0.73 | 0.79 |

| Llama3.1_8B_Instruct | 0.80 | 0.85 | 0.81 |

Conclusion

The integration of the AMD PACE and PARD represents a significant advancement in enhancing inference performance for large language models (LLMs) on the 5th Gen AMD EPYC processors. By collaboratively reducing inter-token latency and enhancing the overall efficiency of Transformer workloads, these technologies achieve higher throughput with similar accuracy across benchmark tests. With AMD PACE's open-source release on the horizon, we are excited for the wider community to enjoy the benefits and innovations these advancements provide for the ongoing development of AI.

Resources

Please refer to our documentation for more information on how to enhance your AI models with AMD PACE and PARD and look out for the upcoming open-source release. We look forward to hearing from you and working with you as we continue to revolutionize AI inference.

- PARD GitHub repository: https://github.com/AMD-AIG-AIMA/PARD

- AMD PACE GitHub repository: https://github.com/amd/AMD-PACE

- Whitepaper: https://www.amd.com/content/dam/amd/en/documents/epyc-business-docs/white-papers/5th-gen-amd-epyc-processor-architecture-white-paper.pdf

- Data sheet: https://www.amd.com/content/dam/amd/en/documents/epyc-business-docs/datasheets/amd-epyc-9005-series-processor-datasheet.pdf

Related Blogs

Footnotes

A system configured with AMD EPYC™ 9755 processor shows that with AMD PACE, PARD, the Llama3 series models achieve a throughput of up to 380 Token/sec when data parallelization is enabled. Testing done by AMD on 06/20/2025, results may vary based on configuration, usage, software version, and optimizations.

SYSTEM CONFIGURATION

System Model: Supermicro

CPU: AMD EPYC 9755 128-Core Processor (2 sockets, 128 cores per socket, 2 threads per core)

NUMA Config: 1 NUMA node per socket

Memory: 1536 GB (24 DIMMs, 6400 MT/s, 64 GiB/DIMM)

Host OS: Ubuntu 24.04.2 LTS 6.8.0-58-generic

System Bios Vendor: American Megatrends International, LLC

A system configured with AMD EPYC™ 9755 processor shows that with AMD PACE, PARD, the Llama3 series models achieve a throughput of up to 380 Token/sec when data parallelization is enabled. Testing done by AMD on 06/20/2025, results may vary based on configuration, usage, software version, and optimizations.

SYSTEM CONFIGURATION

System Model: Supermicro

CPU: AMD EPYC 9755 128-Core Processor (2 sockets, 128 cores per socket, 2 threads per core)

NUMA Config: 1 NUMA node per socket

Memory: 1536 GB (24 DIMMs, 6400 MT/s, 64 GiB/DIMM)

Host OS: Ubuntu 24.04.2 LTS 6.8.0-58-generic

System Bios Vendor: American Megatrends International, LLC