Build without limits on a full-stack AI Cloud with global scale and peak performance.

Deploy AI workloads globally on AMD GPUs with high-performance InfiniBand and Ethernet networking, orchestrated through Kubernetes or Slurm for peak efficiency.

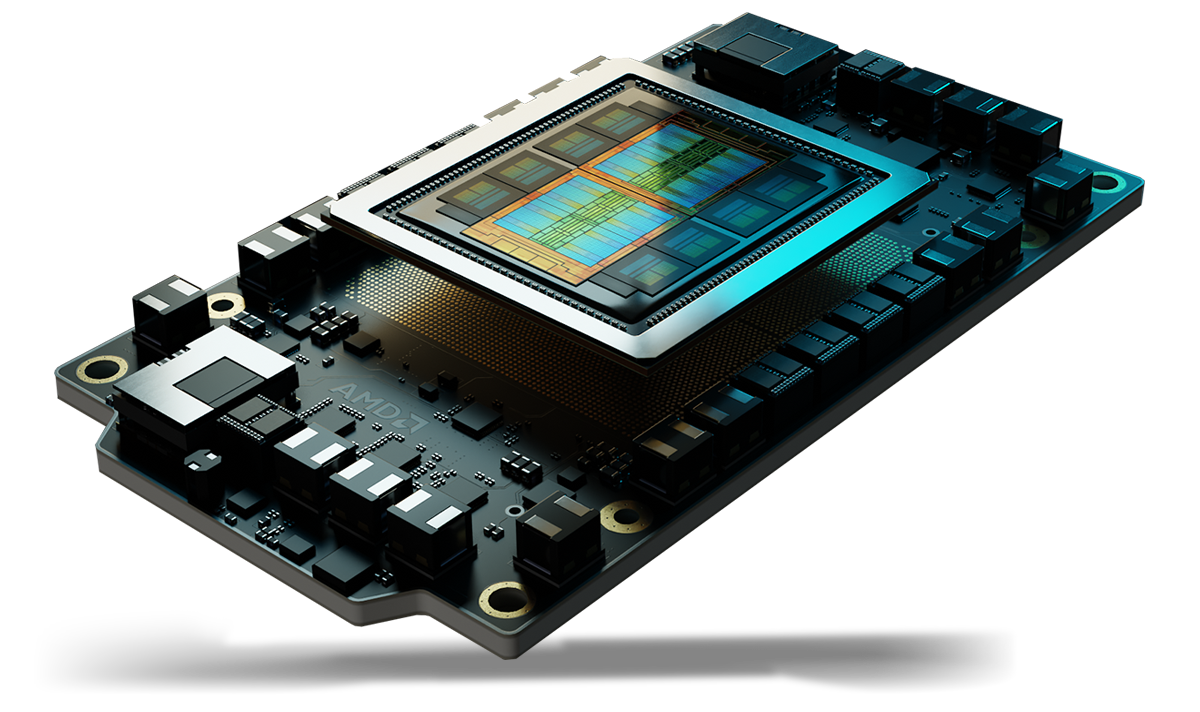

- Powered by AMD Instinct™: Purpose-built for large model training, fine-tuning, and high-throughput inference on AMD Instinct™ GPUs with up to 192GB of HBM3 memory.

- Scale without borders: Scale instantly with thousands of GPUs globally, supporting everything from rapid prototyping to enterprise-grade training and inference.

- Built for Trust: Run mission-critical AI workloads on secure, sovereign infrastructure designed to meet regulatory requirements.

One Platform. Built for Every AI Builder.

Train, fine-tune, and deploy agentic and inference workloads faster on a full-stack AI cloud with leading AMD GPUs, integrated tools, and expert support.

- GenAI Services: Accelerate innovation with services for agents, RAG, guardrails, and fine-tuning. Build and scale next-generation AI applications with confidence and speed.

- Model Hosting & Inference: Deploy and scale models in seconds. Access a ready-to-use model catalog, run inference through APIs, and serve production workloads with low latency, sovereign control, and enterprise-grade reliability.

- AI Ops: Go beyond training with built-in AI lifecycle management. Customize and fine-tune models, monitor performance, and enforce governance with integrated AI Ops to keep models reliable, compliant, and production ready.

- Infrastructure as a Service: High-performance infrastructure with AMD GPUs, delivered as a fully managed platform for peak efficiency and performance.

From Prototype to Enterprise Scale

- Start Fast. Scale on Your Terms. Immediate, pay-as-you-go pricing via the AI Cloud console. Perfect for prototyping, model testing, and ML experiments that need to launch fast without commitment.

- Built for Scale. Optimized for Reliability. Pre-tested, large-scale GPU clusters optimized for enterprise workloads. Designed for scale-ups and global businesses that require reliability, sovereignty, and enterprise-grade support.

- Scale Globally, Stay Local. Run AI workloads across sovereign data centers globally. Access tens of thousands of GPUs with low latency, high performance, global reliability, and in-country data residency.

Evaluate AMD Instinct GPUs on Core42 Cloud

Gateway to the Future of AI

Performance You Can Trust

Frequently Asked Questions

What is the AMD Instinct GPU Evaluation Program?

The AMD Instinct GPU Evaluation Program provides testing environments through AMD Instinct partners to remotely explore the capabilities of AMD Instinct GPUs and AMD ROCm software.

How much capacity is available for testing in the AMD Instinct Evaluation program?

Duration of evaluation and amount of capacity available varies by partner.

Where can I learn more about AMD Instinct GPUs for research and academia?

For educators, researchers, and students the AMD University Program.

When can I expect a response?

The AMD Instinct GPU Evaluation Program team will reach out with a response to your inquiry within two weeks.

Who is this program for?

The AMD Instinct GPU Evaluation Program is for start-ups and companies interested in testing AMD Instinct GPUs and AMD ROCm software for their AI workloads.

How do I get started once I get access to the AMD Instinct GPU Evaluation Program?

Use the ROCm AI Developer Hub to access tutorials, blogs, open-source projects, and other resources for AI development with ROCm™ software platform. This site provides an end-to-end journey for all AI developers who want to develop AI applications and optimize them on AMD GPUs.