AI at Scale Starts Here: The AMD Vision Comes Alive at Advancing AI 2025

Jun 27, 2025

At the Advancing AI 2025 event AMD unveiled new technology solutions as well as our unparalleled AI portfolio and strategy to help all businesses succeed in the AI era.

AI is everywhere and its evolution will require advanced compute technology from cloud to edge to endpoint. Enterprises looking to reap the benefits of AI will need to think about the rising costs to support new workloads as well as ensuring their data center solutions are equipped to meet their unique needs. To rise to the moment enterprises will need the best technology partners in their corner – and AMD is ready to meet enterprises wherever they are in their AI journey.

Compute diversity and the AI revolution

AI workloads are shifting beyond the data center and new use cases continue to emerge. The AI revolution requires heterogeneous compute and successful AI implementations inherently require diverse computing solutions – optimized combinations of CPUs, GPUs, networking, edge and client – all working in concert across data centers, cloud environments, and intelligent endpoints. AMD powers the full spectrum of AI – from silicon to software to solutions. Our strategy is simple but complete:

- Deliver an unmatched portfolio of high-performance, energy efficient compute engines for AI training and inference

- Enable an open, proven and developer-friendly software platform to ensure the leading AI frameworks, libraries and models are fully enabled for AMD hardware.

- Expand our work with the largest cloud, OEM, software and AI companies in the world.

- Co-innovate across our rapidly expanding ecosystem of hardware and software partners to enable data-center scale solutions that will deliver the compute power required to develop the next generation of frontier LLMs.

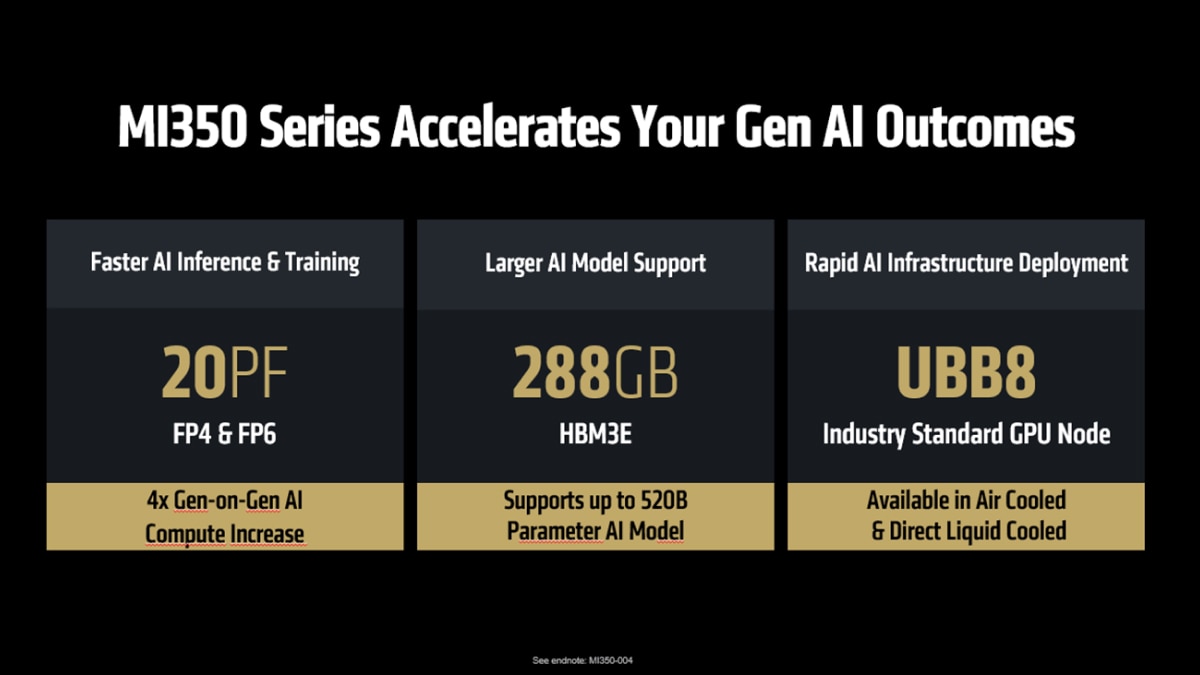

Introducing AMD Instinct™ MI350 GPUs: Continued Generative AI Leadership

As compute scaling in the data center moves from training to inference high performance compute becomes even more important. At Advancing AI 2025, AMD introduced the AMD Instinct™MI350 series.

Already shipping, the AMD Instinct™ MI350 series is the next step on delivering an annual GPU roadmap that powers AI at scale. The highlights:

- 4th Gen CDNA™ architecture – designed from the ground up to accelerate next-gen AI.

- 3nm process node – packs an incredible 185 BILLION transistors on a single device.

- Support for FP4 & FP6 - new AI datatypes that dramatically improve efficiency and throughput for LLMs.

- HMB3E memory - leadership capacity to handle trillion-parameter workloads.

What does this mean for customers? Gen AI outcomes will be accelerated – delivering up to a 4x generation-on-generation AI compute increase (FP4/FP8)1 and a 35x generational leap in inferencing (FP4/FP8)2. And all this increased performance comes with price-performance gains, generating up to 40% more tokens-per-dollar compared to competing solutions3.

Customers like Meta, Oracle, Cohere, Red Hat, and Humain are deploying AMD Instinct and seeing the benefits for their businesses and customers from partnering with AMD for their AI solutions.

That’s not all!

Advancing AI 2025 was more than just hardware announcements. From customer breakout sessions, trainings and speakers for developers, to software and solutions announcements, AMD showcased a vision for the future of AI. In future articles we’ll explore these announcements and stories.

AMD provides the most complete portfolio for AI solutions that includes the highest compute/memory density, open standards for high perf scale up/out, is x86 centric, and open SW for co-innovation. Enterprises around the world are already leveraging AMD solutions to become highly efficient, optimize performance, and generate the business results and competitive advantage they need. Is your data center ready for the AI revolution?

Watch the full replay of the Advancing AI 2025 keynote to hear all the news, announcements, and see the future of AI with AMD.

Related Blogs

Footnotes

- MI350-004: Based on calculations by AMD Performance Labs in May 2025, to determine the peak theoretical precision performance of eight (8) AMD Instinct™ MI355X and MI350X GPUs (Platform) and eight (8) AMD Instinct MI325X, MI300X, MI250X and MI100 GPUs (Platform) using the FP16, FP8, FP6 and FP4 datatypes with Matrix. Server manufacturers may vary configurations, yielding different results. Results may vary based on use of the latest drivers and optimizations.

- MI350-044: Based on AMD internal testing as of 6/9/2025. Using 8 GPU AMD Instinct™ MI355X Platform measuring text generated online serving inference throughput for Llama 3.1-405B chat model (FP4) compared 8 GPU AMD Instinct™ MI300X Platform performance with (FP8). Test was performed using input length of 32768 tokens and an output length of 1024 tokens with concurrency set to best available throughput to achieve 60ms on each platform, 1 for MI300X (35.3ms) and 64ms for MI355X platforms (50.6ms). Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations.

- Based on performance testing by AMD Labs as of 6/6/2025, measuring the text generated inference throughput on the LLaMA 3.1-405B model using the FP4 datatype with various combinations of input, output token length with AMD Instinct™ MI355X 8x GPU, and published results for the NVIDIA B200 HGX 8xGPU. Performance per dollar calculated with current pricing for NVIDIA B200 available from Coreweave website and expected Instinct MI355X based cloud instance pricing. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. Current customer pricing as of June 10, 2025, and subject to change. MI350-049

- MI350-004: Based on calculations by AMD Performance Labs in May 2025, to determine the peak theoretical precision performance of eight (8) AMD Instinct™ MI355X and MI350X GPUs (Platform) and eight (8) AMD Instinct MI325X, MI300X, MI250X and MI100 GPUs (Platform) using the FP16, FP8, FP6 and FP4 datatypes with Matrix. Server manufacturers may vary configurations, yielding different results. Results may vary based on use of the latest drivers and optimizations.

- MI350-044: Based on AMD internal testing as of 6/9/2025. Using 8 GPU AMD Instinct™ MI355X Platform measuring text generated online serving inference throughput for Llama 3.1-405B chat model (FP4) compared 8 GPU AMD Instinct™ MI300X Platform performance with (FP8). Test was performed using input length of 32768 tokens and an output length of 1024 tokens with concurrency set to best available throughput to achieve 60ms on each platform, 1 for MI300X (35.3ms) and 64ms for MI355X platforms (50.6ms). Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations.

- Based on performance testing by AMD Labs as of 6/6/2025, measuring the text generated inference throughput on the LLaMA 3.1-405B model using the FP4 datatype with various combinations of input, output token length with AMD Instinct™ MI355X 8x GPU, and published results for the NVIDIA B200 HGX 8xGPU. Performance per dollar calculated with current pricing for NVIDIA B200 available from Coreweave website and expected Instinct MI355X based cloud instance pricing. Server manufacturers may vary configurations, yielding different results. Performance may vary based on use of latest drivers and optimizations. Current customer pricing as of June 10, 2025, and subject to change. MI350-049

/avx512-blog-primary-photo.png)