Unlocking Optimal LLM Performance on AMD EPYC™ CPUs with vLLM

Nov 05, 2025

AI is reshaping the IT industry and the world at large. While GPUs are well-suited for high-throughput workloads, CPUs remain indispensable—delivering cost-effective, power-efficient, and general-purpose AI deployments.

Enterprises with established infrastructure and skillsets continue to rely on CPUs for smaller-scale inference and hybrid workloads. In fact, CPUs excel in several key scenarios:

1. Resilience as Backup for GPUs

If a GPU fails, inference can automatically shift to CPUs—enabling continuity and avoiding production downtime.

2. Efficient Batch Processing During Off-Peak Hours

With CPUs already deployed across on-prem and cloud, idle resources can be repurposed for offline inference, maximizing utilization during low-demand periods.

3. Low-Barrier Entry for Enterprises

CPUs offer a cost-effective, easy-to-deploy option that leverages existing infrastructure and skills, making them an accessible starting point for AI adoption.

4. Upgrade Path for Performance Uplift

Many workloads—fraud detection, chatbots, recommendation systems, sentiment analysis—already run on CPUs. Migrating to newer generations can deliver significant performance gains without a steep learning curve.

5. Scalable Hybrid Workload Processing

CPUs can run both general-purpose and AI inference workload simultaneously and multiple instances of it to scale, delivering excellent performance through tight hardware and software integration.

Accelerating vLLM Inference on AMD EPYC™ Processors

Recently, a blog post “Accelerating vLLM Inference”, was published highlighting performance results for vLLM inference using a specific benchmarking methodology. While that work offers one perspective, our approach takes a different angle—one that we believe better reflects the customer experience and the true performance potential of AMD EPYC when running large language models (LLMs) efficiently.

Reproducing Published Results: Challenges and Learnings

To start, we attempted to reproduce the benchmark data presented in that blog, which compared across several chatbot use cases.:

- Dual-socket Intel® Xeon® 6767P (64 cores)

- Dual-socket AMD EPYC™ 9755 (128 cores)

- Dual-socket AMD EPYC™ 9965 (192 cores)

- Dual-socket AMD EPYC™ 9555 (64 cores)

Unfortunately, due to limited configuration details, including the specific system setup, model parameters, software stack, and runtime tuning—we were unable to reproduce the published results.

Recognizing the importance of transparency and clarity, we decided to rerun the tests across a myriad of configurations and performant software stack with vLLM that showcases the highest performance that could be measured for both AMD EPYC 9555 and Intel Xeon 6767P. This approach ensures a fair, validated, and customer-relevant view of vLLM inference performance.

Our tests were performed using:

- AMD EPYC 9555 (64-core) with DDR5-6400 MT/s vs Intel Xeon 6767P(64-core) with MRDIMM-8800 MT/s.

- Optimized PyTorch and vLLM builds compiled with ZenDNN

We focused on configurations representative of what customers actually deploy as well as equivalency—no proprietary tuning, no hidden parameters, and no closed-source dependencies.

Benchmark System Configuration & Results:

To provide a clear and transparent baseline, we aligned our experiments to the same SLA and performance measures referenced in the original blog—while noting key differences in industry practices and EPYC CPU-optimized configurations.

1. Chatbot Use Case Parameters

- Scenario 1: Input Tokens = 128; Output Tokens = 256

- Scenario 2: Input Tokens = 256; Output Tokens = 512

- Scenario 3: Input Tokens = 1024; Output Tokens = 1024

2. Service Level Agreement (SLA)

- Defined as Time Per Output Token (TPOT) = 100ms.

3. Performance Metrics

- Output Token Throughput

- Concurrent User Prompts

With this setup, our goal is to help customers evaluate competing claims and see how EPYC CPUs running vLLM deliver real-world inference performance.

To help focus the testing on performance, we aligned system-level configurations across AMD EPYC 9555 and Intel Xeon 6767P platforms. For consistency, the 64-core system was used as the baseline for comparison. The primary distinction lies in memory: Intel Xeon was configured with MRDIMMs (8800 MT/s), while EPYC utilized DDR5 (6400 MT/s). It’s important to note that MRDIMM is used by Intel, not to be confused with the standard by JEDEC, and are more expensive than DDR5 DIMMs.

Experiments were done across single socket and dual socket systems, covering software stack combinations, models and vLLM configurations with the objective of securing maximum output tokens throughput and highest number of concurrent prompts with SLA of TPOT set to 100ms as noted in the blog on both Intel Xeon 6767P and AMD EPYC 9555. We were unable to recreate the benchmark data as noted on blog with the information provided on dual socket environment.

To try to obtain the best performance for Intel Xeon 6767P, we did multiple experiments as noted above and with SNC =ON and SNC=OFF. Though the blog data had highlighted Intel Xeon 6767P config to be using SNC=ON we found as part of the various experiments that SNC=OFF provides better performance on Intel Xeon 6767Pthan SNC ON with Tensor parallelism for vLLM set to 4 [TP=4]

The following Software stack and parameters delivered optimal performance while meeting the TPOT = 100ms SLA for the respective systems:

- AMD EPYC 9555: Single-socket system with ZenDNN 5.1, vLLM 0.9.2 (V0 Engine), and PyTorch 2.6

- Intel Xeon 6767P: Single-socket system with IPEX 2.7 and vLLM V1 0.9.1 (V1 Engine) [ Intel Blog configuration]

Please also note that vLLM 0.9.2 was chosen due to known performance regression with PyTorch 2.7.0 + cpu on x86 platform (refer to https://github.com/vllm-project/vllm/blob/v0.9.2/requirements/cpu.txt and https://github.com/pytorch/pytorch/pull/151218).

On Intel Xeon 6767P higher performance was observed with vLLM 0.9.1 and PyTorch 2.7.0, which was also the configuration utilized in the blog.

Benchmark Performance Data with Intel Xeon 6767P with SNC=OFF

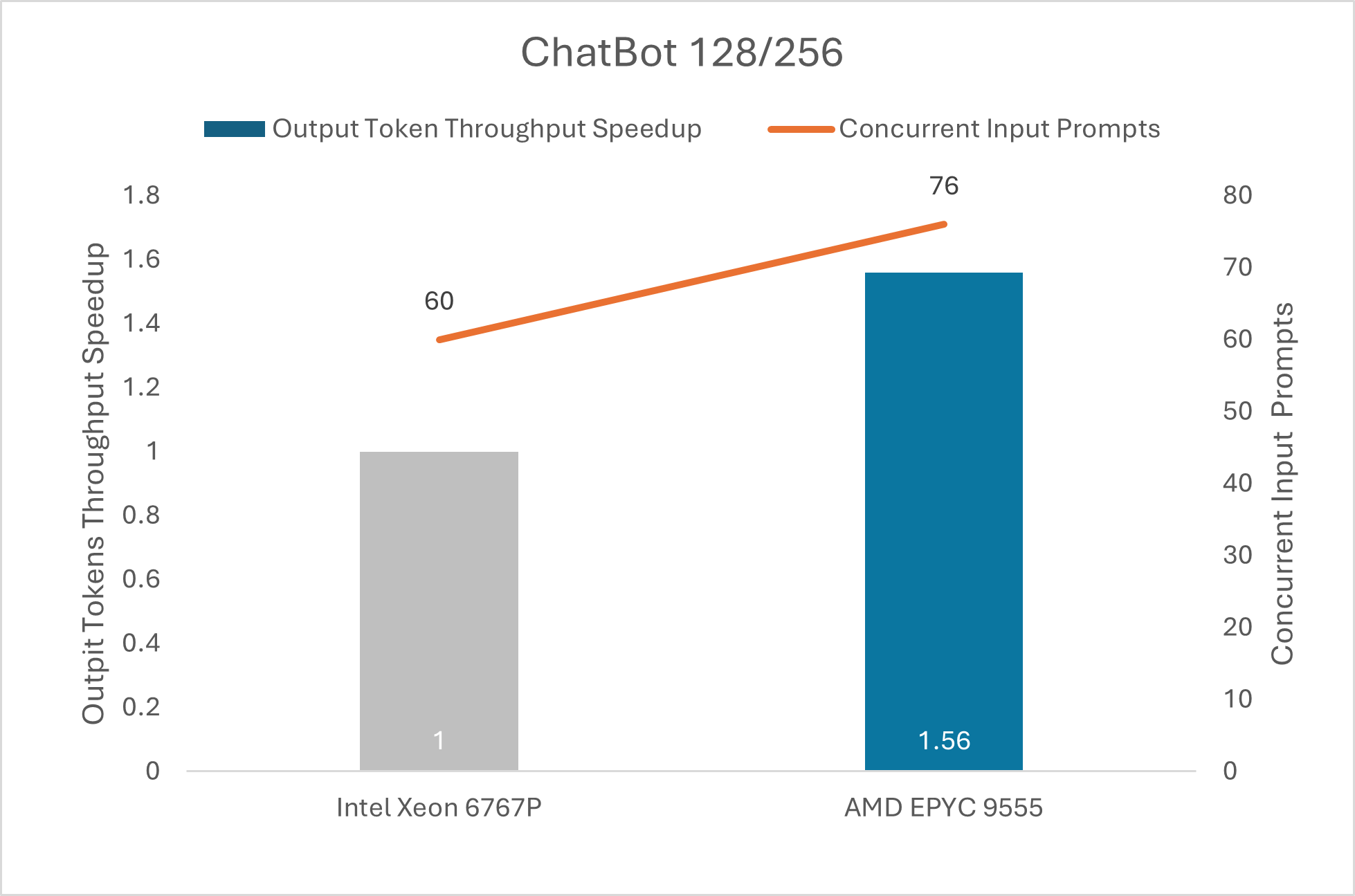

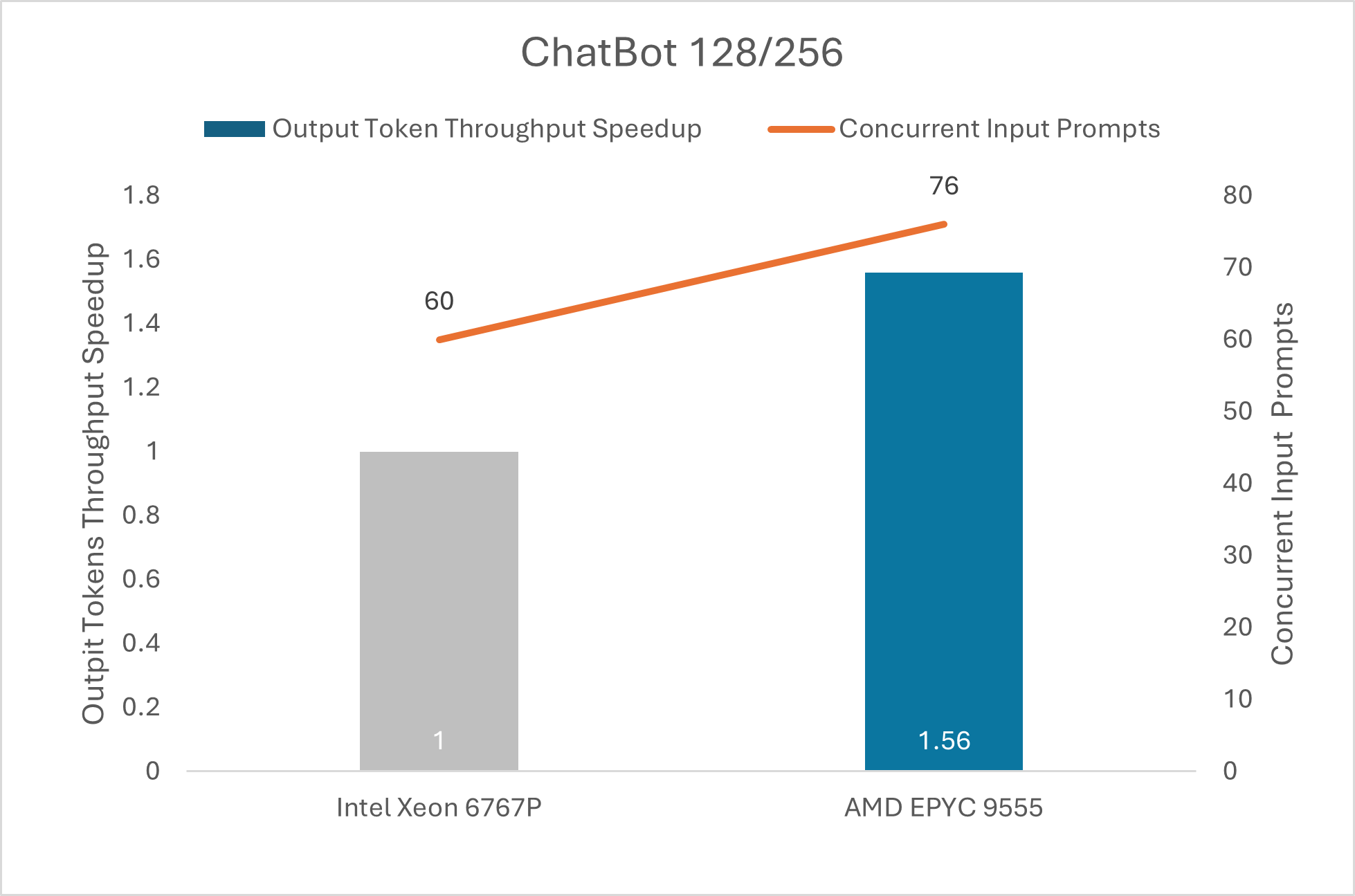

As seen in Fig:i AMD EPYC 9555 (64c) single socket system had 1.56X uplift over Intel Xeon 6767P with SNC=OFF. The same core count with high memory speed would likely increase system cost for MRDIMMs vs DDR5-6400. Also, AMD EPYC 9555 was able to run ~1.27x the number of prompts vs Intel Xeon 6767P.

Fig: i

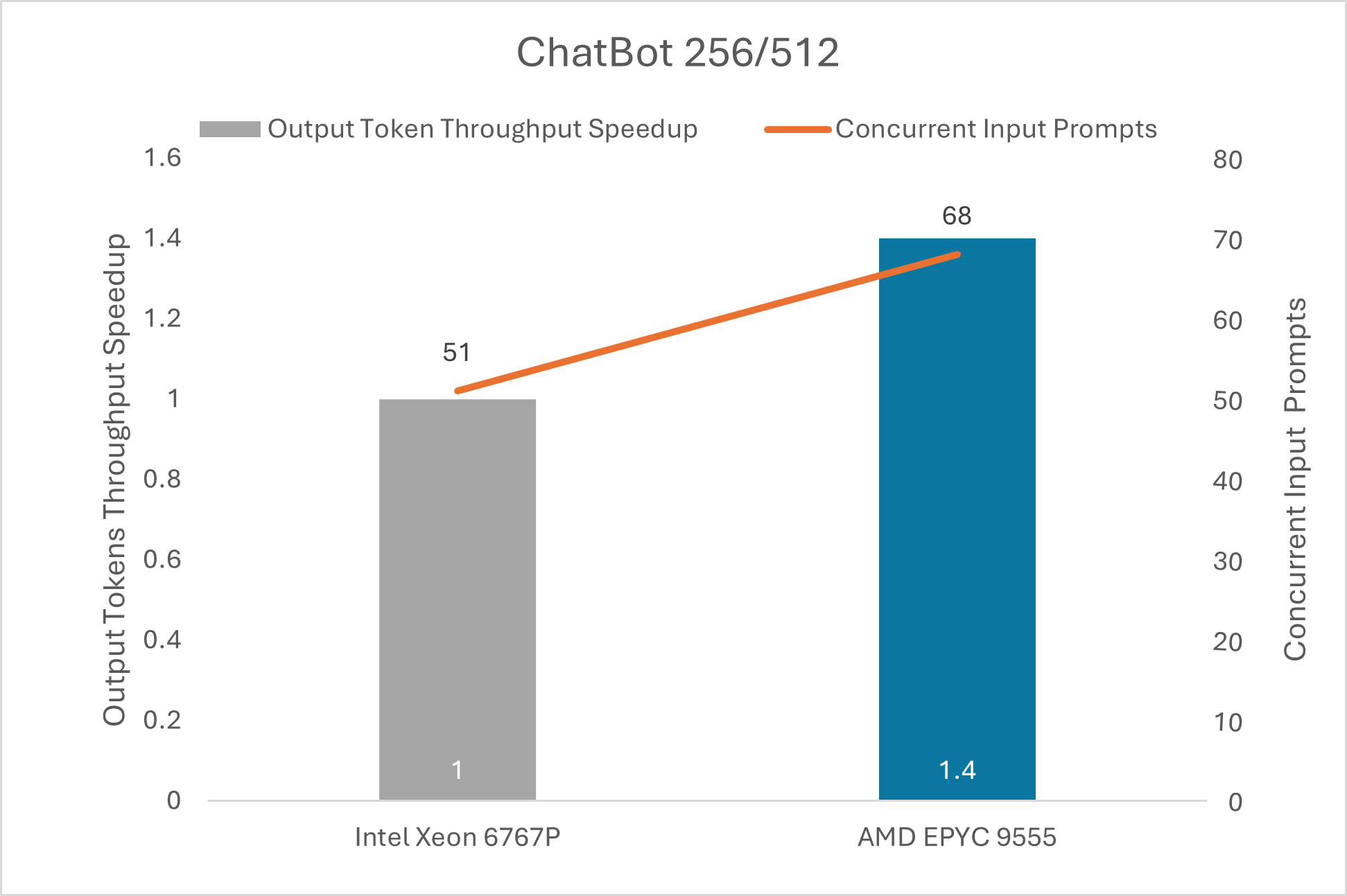

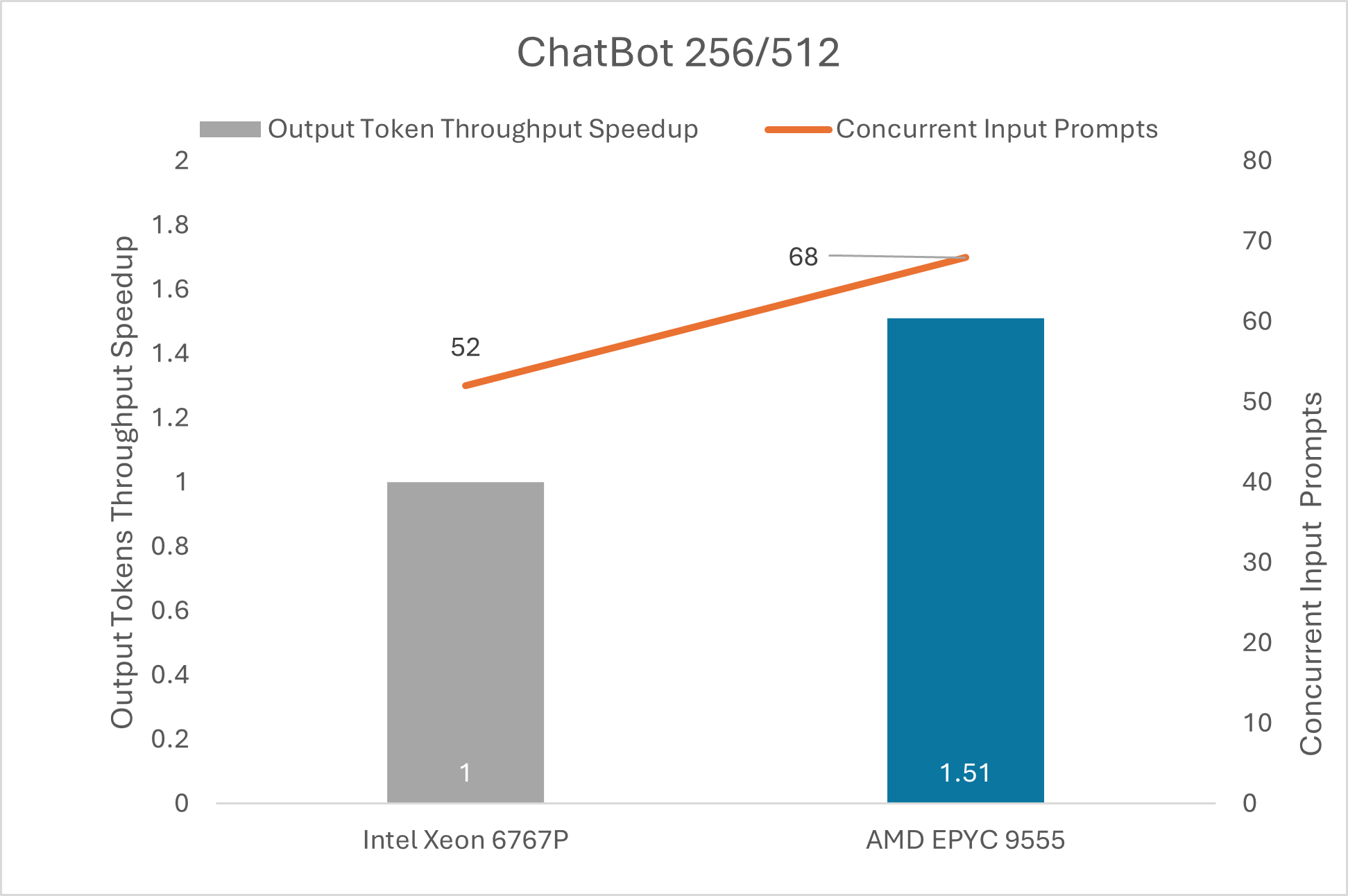

Similarly, as shown in Fig:ii AMD EPYC 9555 (64c) single socket system had ~1.4X uplift over Intel Xeon 6767P with SMC=OFF running Llama 3.1-8B-Instruct with input token of 256 and output token of 512. Also, AMD EPYC 9555 was able to run ~1.33x the number of prompts vs Intel Xeon 6767P.

Fig: ii

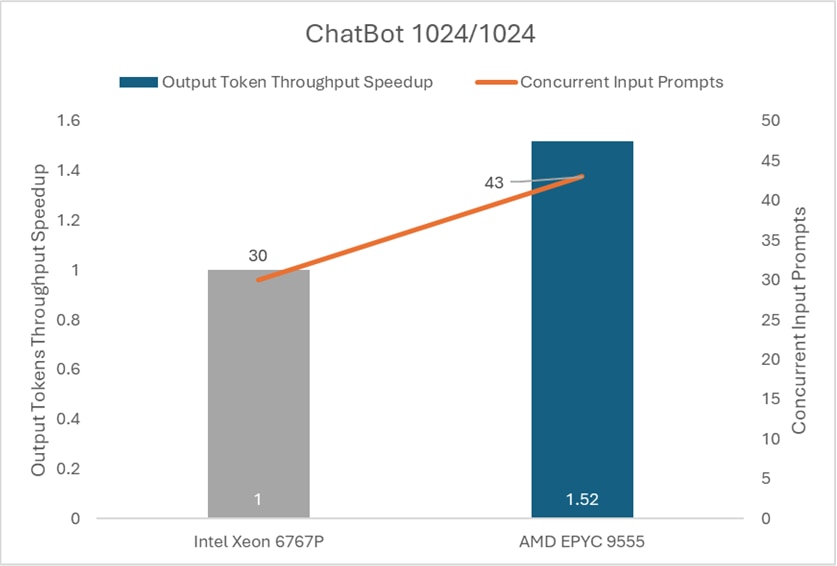

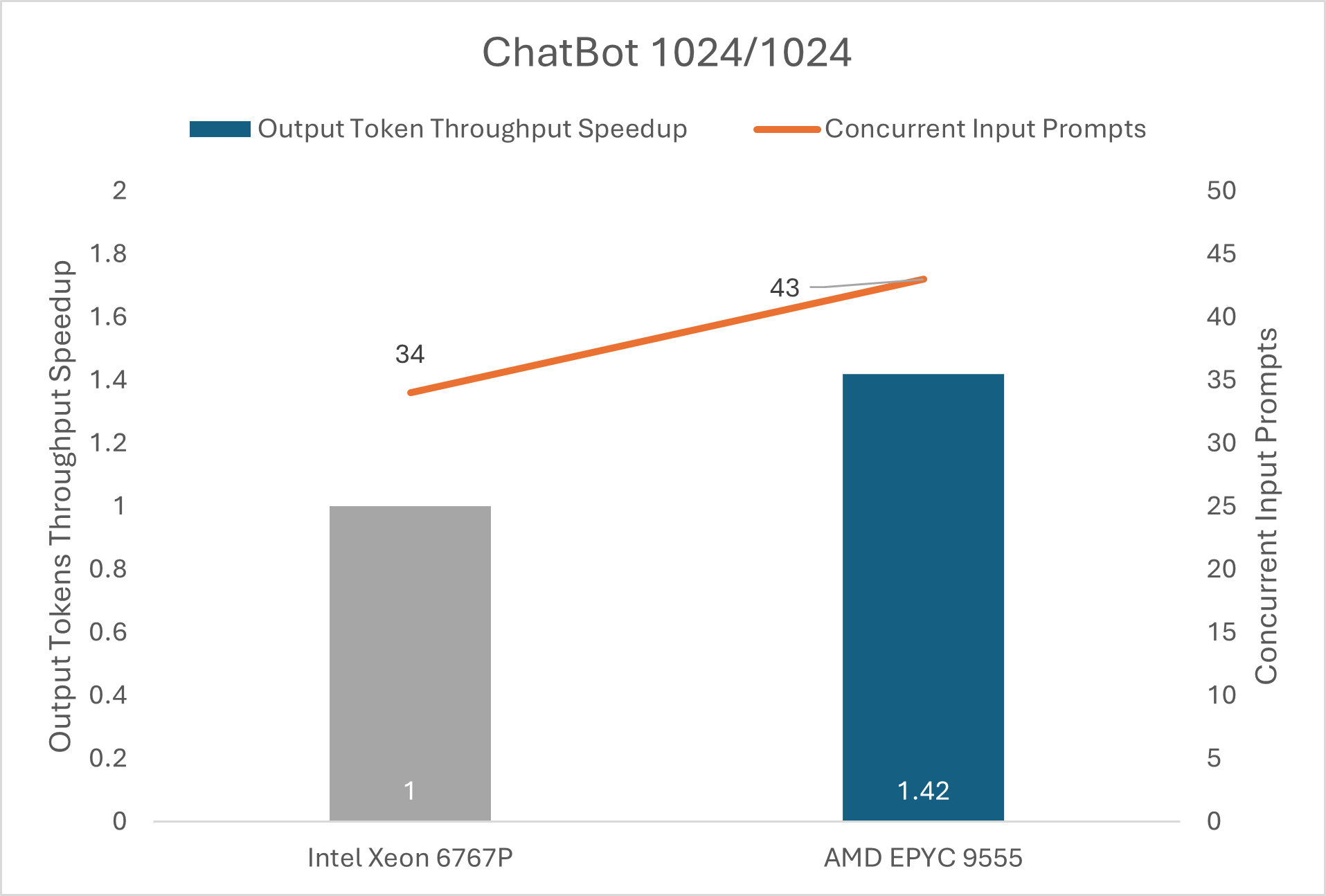

Finally, Fig: iii shows AMD EPYC 9555 (64c) single socket system had ~1.51X uplift over Intel Xeon 6767p with SMC= OFF running Llama 3.1-8B-Instruct with input token of 1024 and output token of 1024. Also, AMD EPYC 9555 was able to run ~1.43x the number of prompts vs Intel Xeon 6767P.

Fig: iii

Benchmark Performance Data with Intel Xeon 6464P with SNC=ON

Similarly, the following three charts on Fig: iv, Fig: v & Fig: vi provide the comparison of AMD EPYC 9555 with Intel Xeon 6767P configured with SMC= ON across multiple chatbot Use-Cases. Due diligence done across all configuration settings of Intel Xeon 6767P for best performance and did experiment with SNC=0N as well, the configuration used in the blog.

Fig: iv

Fig: v

Fig: vi

Optimized, Transparent, and Scalable LLM Inference with AMD EPYC

Our evaluation demonstrates that when tuned for architectural strengths, AMD EPYC processors deliver clear, repeatable advantages for vLLM-based LLM inference. Across all tested chatbot scenarios, EPYC 9555 systems achieved ~1.4x -1.76x the output token throughput depending on use case scenario and configuration settings handled up to 40% more concurrent input prompts compared to equivalent Intel Xeon configurations—all while using the less expensive standard DDR5 memory which is a cost-effective industry standard.

These results underscore EPYC processors’ ability to drive efficient, scalable, and cost-effective AI inference across diverse deployment models, whether augmenting GPUs for resilience, powering CPU-only inference clusters, or optimizing existing on-prem and cloud infrastructure.

With industry-leading core density, high memory bandwidth, and robust ecosystem support, AMD EPYC processors empower enterprises to extract maximum performance—unlocking real-world AI value.

Additional Relevant Configuration Information

Environment Variable used for Intel Xeon for Single Socket with Tensor Parallelism=4

export VLLM_CPU_KVCACHE_SPACE=90

export VLLM_CPU_SGL_KERNEL=1 (For AMX optimizations)

export VLLM_CPU_OMP_THREADS_BIND="0-15|16-31|32-47|48-63" (4 groups for Tensor Parallelism)

Serve:

vllm serve meta-llama/Llama-3.1-8B-Instruct --disable-log-requests --enable-chunked-prefill --enable-prefix-caching --tensor-parallel-size 4 --distributed-executor-backend mp

Test:

vllm bench serve \

--model meta-llama/Llama-3.1-8B-Instruct \

--endpoint /v1/completions \

--dataset-name random \

--random-input-len "$INPUT_TOKENS" \

--random-output-len "$OUTPUT_TOKENS" \

--num-prompts "$PROMPTS"

For AMD EPYC, following variables were used to get best ZenTorch performance:

export ZENDNN_TENSOR_POOL_LIMIT=1024

export ZENDNN_MATMUL_ALGO=FP32:4,BF16:0

export ZENDNN_PRIMITIVE_CACHE_CAPACITY=1024

export ZENDNN_WEIGHT_CACHING=1

For both platforms, the LD_PRELOAD is updated with tcmalloc/libiomp paths.

Footnotes

1. 9xx5-256: Llama3.1-8B throughput results based on AMD internal testing as of 10/16/2025. Configurations: llama3.1-8B, vLLM, python 3.10, TPOT max 100ms, BF16, input/output lengths: [128/256, 256/512, 1024/1024] 1P AMD EPYC 9555 (64 Total Cores, reference system, 768GB 12x128GB DDR5-6400, BIOS RVOT1003C, Ubuntu® 22.04 | 6.8.0-84-generic, SMT=OFF, Determinism=power, Mitigations=off), vLLM 0.9.2, ZenDNN 5.1.0, NPS1 1P Intel Xeon 6767P (64 Total Cores, production system, 512GB 8x64GB MRDIMM at 8000 MT/s, BIOS IHE110U-1.20 (AMX on), Ubuntu 24.04 | 6.8.0-84-generic, SMT=OFF, Performance Bias, Mitigations=off), vLLM 0.9.1, IPEX 2.7, NPS1, SNC ON Results: input output prompts6767P throughput6767P prompts9655 throughput9655 128 256 60 385.62 76 680.58 256 512 52 387.9 68 586.18 1024 1024 34 266.91 43 378.03 Results may vary due to factors including system configurations, software versions, and BIOS settings.

2. 9xx5-255: Llama3.1-8B throughput results based on AMD internal testing as of 10/16/2025. Configurations: llama3.1-8B, vLLM, python 3.10, TPOT max 100ms, BF16, input/output lengths: [128/256, 256/512, 1024/1024] 1P AMD EPYC 9555 (64 Total Cores, reference system, 768GB 12x128GB DDR5-6400, BIOS RVOT1003C, Ubuntu® 22.04 | 6.8.0-84-generic, SMT=OFF, Determinism=power, Mitigations=off), vLLM 0.9.2, ZenDNN 5.1.0, NPS1 1P Intel Xeon 6767P (64 Total Cores, production system, 512GB 8x64GB MRDIMM at 8000 MT/s, BIOS IHE110U-1.20 (AMX on), Ubuntu 24.04 | 6.8.0-84-generic, SMT=OFF, Performance Bias, Mitigations=off), vLLM 0.9.1, IPEX 2.7, NPS1, SNC OFF Results: input output prompts6767P throughput6767P prompts9655 throughput9655 128 256 60 435.98 76 680.58 256 512 51 419.63 68 586.18 1024 1024 30 249.53 43 378.03 Results may vary due to factors including system configurations, software versions, and BIOS settings.

1. 9xx5-256: Llama3.1-8B throughput results based on AMD internal testing as of 10/16/2025. Configurations: llama3.1-8B, vLLM, python 3.10, TPOT max 100ms, BF16, input/output lengths: [128/256, 256/512, 1024/1024] 1P AMD EPYC 9555 (64 Total Cores, reference system, 768GB 12x128GB DDR5-6400, BIOS RVOT1003C, Ubuntu® 22.04 | 6.8.0-84-generic, SMT=OFF, Determinism=power, Mitigations=off), vLLM 0.9.2, ZenDNN 5.1.0, NPS1 1P Intel Xeon 6767P (64 Total Cores, production system, 512GB 8x64GB MRDIMM at 8000 MT/s, BIOS IHE110U-1.20 (AMX on), Ubuntu 24.04 | 6.8.0-84-generic, SMT=OFF, Performance Bias, Mitigations=off), vLLM 0.9.1, IPEX 2.7, NPS1, SNC ON Results: input output prompts6767P throughput6767P prompts9655 throughput9655 128 256 60 385.62 76 680.58 256 512 52 387.9 68 586.18 1024 1024 34 266.91 43 378.03 Results may vary due to factors including system configurations, software versions, and BIOS settings.

2. 9xx5-255: Llama3.1-8B throughput results based on AMD internal testing as of 10/16/2025. Configurations: llama3.1-8B, vLLM, python 3.10, TPOT max 100ms, BF16, input/output lengths: [128/256, 256/512, 1024/1024] 1P AMD EPYC 9555 (64 Total Cores, reference system, 768GB 12x128GB DDR5-6400, BIOS RVOT1003C, Ubuntu® 22.04 | 6.8.0-84-generic, SMT=OFF, Determinism=power, Mitigations=off), vLLM 0.9.2, ZenDNN 5.1.0, NPS1 1P Intel Xeon 6767P (64 Total Cores, production system, 512GB 8x64GB MRDIMM at 8000 MT/s, BIOS IHE110U-1.20 (AMX on), Ubuntu 24.04 | 6.8.0-84-generic, SMT=OFF, Performance Bias, Mitigations=off), vLLM 0.9.1, IPEX 2.7, NPS1, SNC OFF Results: input output prompts6767P throughput6767P prompts9655 throughput9655 128 256 60 435.98 76 680.58 256 512 51 419.63 68 586.18 1024 1024 30 249.53 43 378.03 Results may vary due to factors including system configurations, software versions, and BIOS settings.