[How-To] Running Optimized Llama2 with Microsoft DirectML on AMD Radeon Graphics

Nov 15, 2023

Prepared byHisham Chowdhury (AMD)and Sonbol Yazdanbakhsh (AMD).

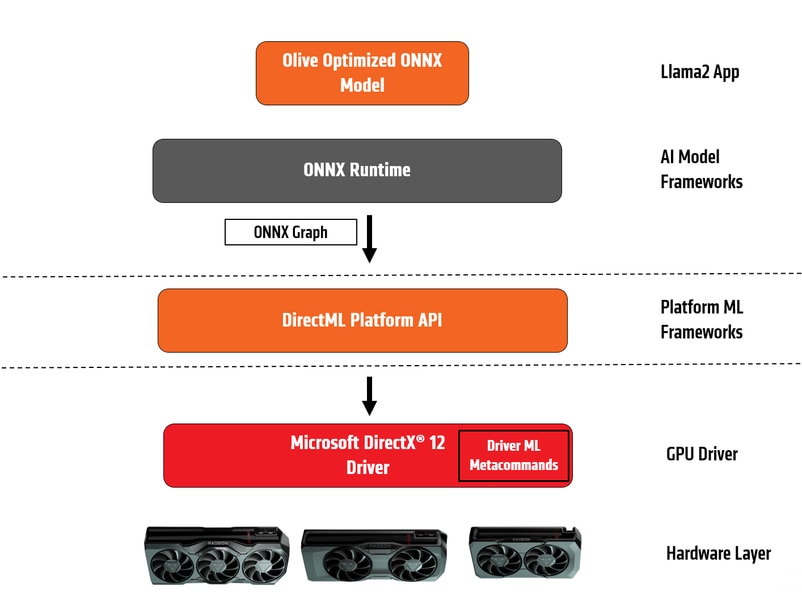

Microsoft and AMD continue to collaborate enabling and accelerating AI workloads across AMD GPUs on Windows platforms. Following up to our earlier improvements made to Stable Diffusion workloads, we are happy to share that Microsoft and AMD engineering teams worked closely to optimize Llama2 to run on AMD GPUs accelerated via the Microsoft DirectML platform API and AMD driver ML metacommands. AMD driver resident ML metacommands utilizes AMD Matrix Processing Cores wavemma intrinsics to accelerate DirectML based ML workloads including Stable Diffusion and Llama2.

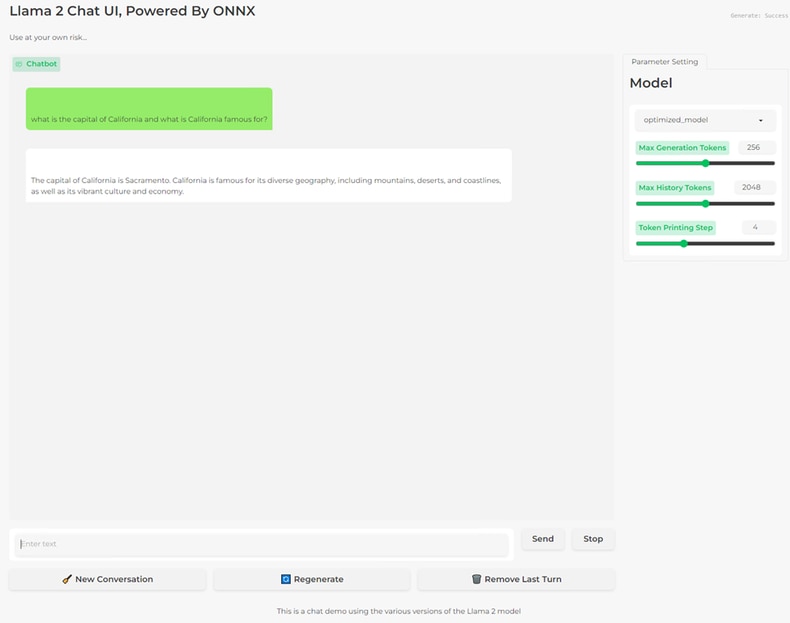

Fig 1:OnnxRuntime-DirectML on AMD GPUs

As we continue to further optimize Llama2, watch out for future updates and improvements via Microsoft Olive and AMD Graphicsdrivers.

Below are brief instructions on how to optimize the Llama2 model with Microsoft Olive, and how to run the model on any DirectML capable AMD graphics card with ONNXRuntime, accelerated via the DirectML platform API.

If you have already optimized the ONNX model for execution and just want to run the inference, please advance to Step 3 below.

- Installed Git (Git for Windows)

- Installed Anaconda

- onnxruntime_directml==1.16.2 or newer

- Platform having AMD Graphics Processing Units (GPU)

- Driver: AMD Software: Adrenalin Edition™ 23.11.1 or newer (https://www.amd.com/en/support)

Download the Llama2 models from Meta’s release, use Microsoft Olive to convert it to ONNX format and optimize the ONNX model for GPU hardware acceleration.

Using the instructions from Microsoft Olive, download Llama model weights and generate optimized ONNX models for efficient execution on AMD GPUs

Open Anaconda terminal and input the following commands:

- conda create --name=llama2_Optimize python=3.9

- conda activate llama2_Optimize

- git clone https://github.com/microsoft/Olive.git

- cd olive

- pip install -r requirements.txt

- pip install -e .

- cd examples/directml/llama_v2

- pip install -r requirements.txt

Request accessto the Llama 2 weights from Meta, Convert to ONNX, and optimize the ONNX models

- python llama_v2.py --optimize

- Note: The first time this script is invoked can take some time since it will need to download the Llama 2 weights from Meta. When requested, paste the URL that was sent to your e-mail address by Meta (the link is valid for 24 hours)

Once the optimized ONNX model is generated from Step 2, or if you already have the models locally, see the below instructions for running Llama2 on AMD Graphics.

Open Anaconda terminal

- conda create --name=llama2 python=3.9

- conda activate llama2

- pip install gradio==3.42.0

- pip install markdown

- pip install mdtex2html

- pip install optimum

- pip install tabulate

- pip install pygments

- pip install onnxruntime_directml // make sure it’s 1.16.2 or newer.

- git clone https://github.com/microsoft/Olive.git

- cd Olive\examples\directml\llama_v2

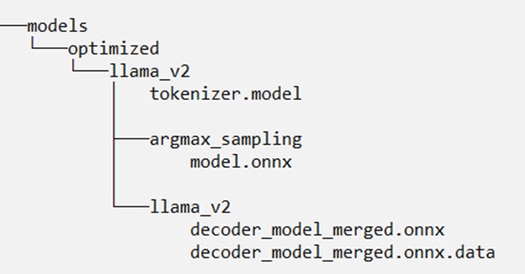

Copy the optimized models here (“Olive\examples\directml\llama_v2\models” folder). The optimized model folder structure should look like this:

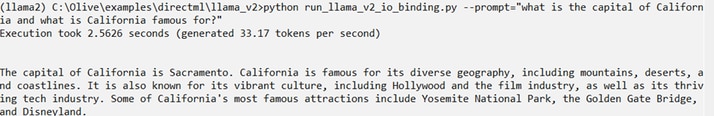

The end result should look like this when using the following prompt:

- Python run_llama_v2_io_binding.py --prompt="what is the capital of California and what is California famous for?"

To use Chat App which is an interactive interface for running llama_v2 model, follow these steps:

Open Anaconda terminal and input the following commands:

- conda create --name=llama2_chat python=3.9

- conda activate llama2_chat

- pip install gradio==3.42.0

- pip install markdown

- pip install mdtex2html

- pip install optimum

- pip install tabulate

- pip install pygments

- pip install onnxruntime_directml // make sure it’s 1.16.2 or newer.

- git clone https://github.com/microsoft/Olive.git

- cd Olive\examples\directml\llama_v2

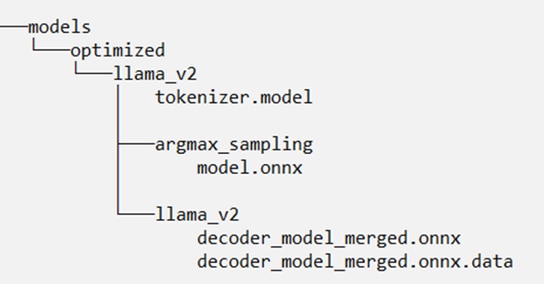

Copy the optimized models here (“Olive\examples\directml\llama_v2\models” folder). The optimized model folder structure should look like this:

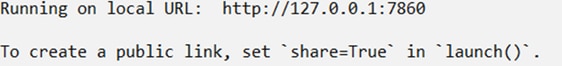

Launch the Chat App

- python chat_app/app.py

- Click on open local URL

It opens the below page. Add your prompt and start chatting.