From CES 2026 to Yottaflops: Why the AMD Keynote Highlights a Turning Point for AI Compute

Jan 06, 2026

At CES 2026, AMD Chair and CEO Lisa Su showcased the scale AI compute is approaching within the next 5 years:

Yottaflops.

Not as a distant theory—but as a real, necessary scale of compute for the AI era we are now entering.

AI Compute Enters the Yottascale Era

Just a few years ago, when ChatGPT first emerged, the total global AI compute capacity was on the order of one zettaflop. That same year—2022—the world’s first exascale supercomputer, Frontier at Oak Ridge National Laboratory, powered by AMD technologies, made its debut on the TOP500 list.

In the short span since then, AI has driven an unprecedented infrastructure buildout. Global AI compute capacity has grown roughly 100×—from about 1 zettaflop to nearly 100 zettaflops today—fueled by rapid adoption across consumers, enterprises, and scientific computing.

And this is just the beginning.

As AI systems become more deeply embedded in everyday life—with AI agents reasoning continuously, multimodal models processing text, images, video, and sensor data, and inference running at massive scale—AI demand is shifting from occasional use to always-on intelligence. That shift is driving a far greater need for compute infrastructure.

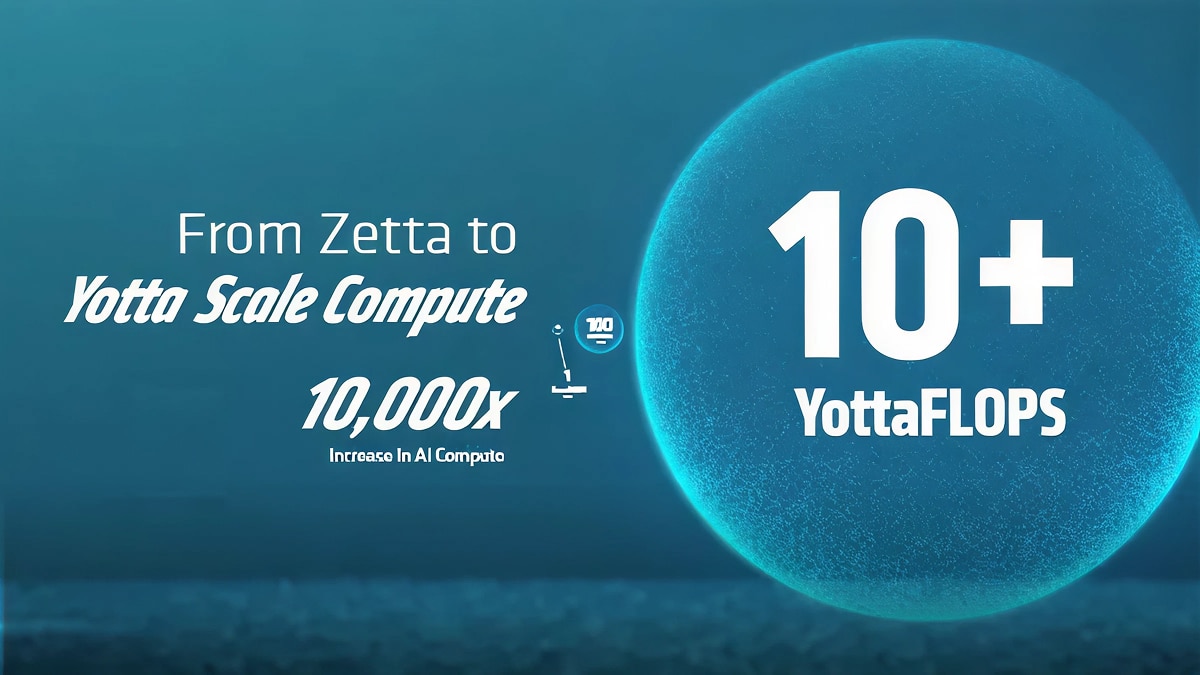

To truly bring AI everywhere, the industry is now on a path toward yottascale AI compute, with global capacity projected to reach well into the tens of yottaflops over the next five years.

Before we go deeper, here’s how big yottascale really is.

How Big Is Yottascale AI Compute? (Really Big.)

- 1 ExaFlop = a billion billion calculations every second

- 1 ZettaFLop = 1,000 exaflops

- 1 yottaflop = 1,000,000 exaflops (10²⁴ operations per second)

- 10 yottaflops = 10 million exaflops of AI compute

Put another way:

Reaching yottascale AI compute would require the equivalent of millions of today’s Frontier exaflop-class supercomputers operating together— a scale that would have been unimaginable just a few years ago.

And now, it’s becoming a necessity.

Why AI Is Forcing the Leap to Yottaflops

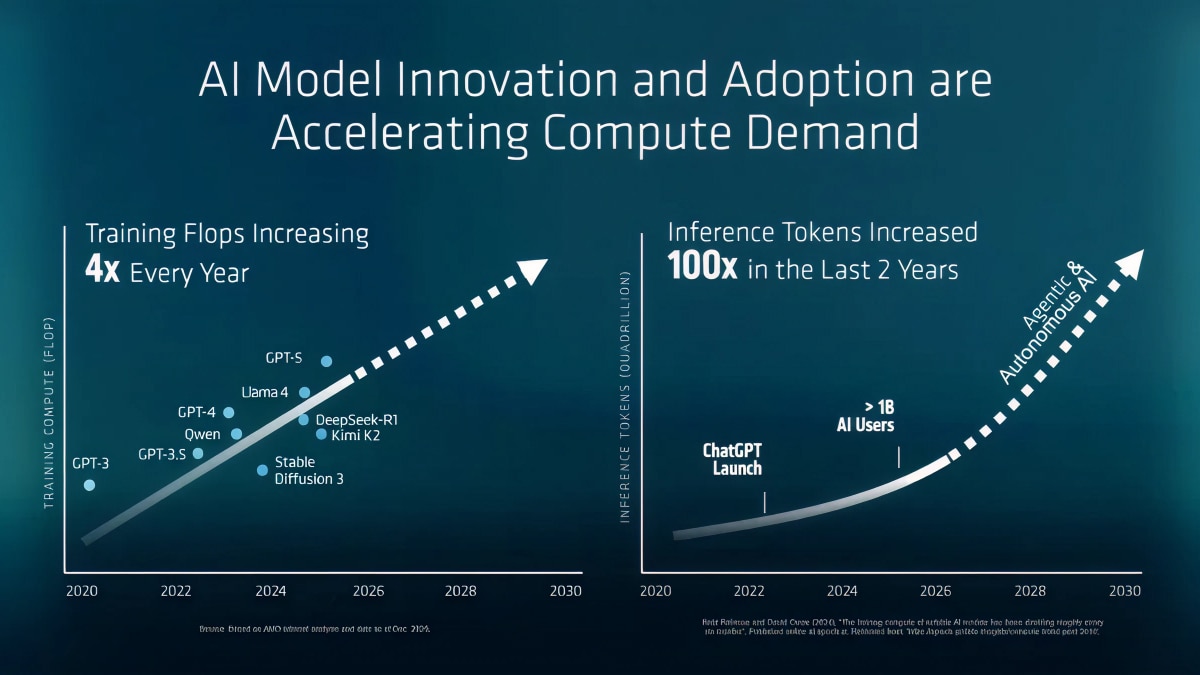

AI compute demand is accelerating—not simply because models are larger, but because AI is being asked to do fundamentally more work, more often, and in more places.

Over the past decade, AI progress has been fueled by a steady increase in compute, enabling models to become smarter, more capable, and more broadly useful. But today, the primary driver of AI compute growth is no longer training alone. Inference has reached a critical inflection point. As AI adoption has surged over the last few years, AI systems are now being used continuously—serving billions of users, powering agents, and operating across real-world applications at massive scale. The result is a dramatic increase in inference activity, with orders-of-magnitude growth in tokens and signals processed, reflecting a shift from occasional AI interactions to always-on intelligence embedded throughout the digital and physical world.

The center of gravity has shifted from one-time training runs to continuous reasoning, inference, and autonomous action:

- AI agents operate continuously, planning, reasoning, and acting on our behalf rather than responding to isolated prompts

- Inference at scale now rivals—and often exceeds—training as models are deployed across billions of users and devices

- Reasoning workloads dramatically increase compute per query, especially for multi-step, agentic tasks

- Algorithms are moving beyond text, driving massive multimodal demand across vision, speech, video, 3D, and sensor data—often processed simultaneously

- Trillions of tokens are processed every day, across an expanding set of applications

- AI is embedded everywhere, from digital services and enterprise workflows to edge and physical systems

The result is an insatiable and persistent demand for AI compute, driven not by a single breakthrough model, but by the broad adoption of AI across diverse applications, industries, and environments.

This is why zettascale was only the beginning—and why the AI era is now pulling computing toward yottascale.

What 10 Yottaflops Can Power

Reaching yottascale compute isn’t about chasing a bigger number. It’s about unlocking entirely new classes of innovation, including:

- Healthcare & life sciences

Drug discovery, protein modeling, precision medicine, and real-time diagnostics - Scientific discovery & climate modeling

Higher-resolution simulations for weather, energy, materials, and physics - Autonomous systems & robotics

Physical AI for factories, warehouses, transportation, and exploration - Industrial & manufacturing AI

Digital twins, predictive maintenance, and AI-driven design optimization - Personal and enterprise AI agents

Always-on copilots that reason, plan, and act on our behalf

Yottascale compute is what allows AI to move from assistive to transformational.

Why Unlocking Yottascale Requires AI Engines Everywhere

One critical insight from CES 2026 is that yottascale AI cannot live in one place and AMD has been saying it all along.

Unlocking AI’s full potential requires AI engines everywhere:

- In data centers, powering massive training and inference clusters

- At the edge, driving physical AI in the real world—robots, vehicles, sensors, and machines

- On PCs, enabling local, privacy-centric, responsive AI experiences

This distributed model is essential for performance, latency, efficiency, and scale.

The path to yottascale isn’t a single supercomputer. It’s a globally connected fabric of AI compute.

The AMD Unmatched Technology Portfolio for the Yottaflop Era

AMD enters this moment with a uniquely broad and deep technology portfolio—purpose-built for AI engines everywhere. Deep co-innovation with AI leaders helps ensure AMD solutions are optimized for real-world workloads—from frontier models to large-scale deployments.

Across cloud, edge, and PC, AMD delivers:

- AMD Instinct™ GPUs for large-scale AI training and inference

- AMD EPYC™ CPUs that feed, orchestrate, and scale accelerators

- AMD Pensando™ AI NICs and AMD Salina™ DPUs enabling high-performance networking and infrastructure offload for scalable AI clusters

- AMD Versal™, AMD Zynq™ and AMD Kria™ for embedded, edge, and physical AI platforms

- AMD Ryzen™ and Ryzen™ AI processors bringing AI directly to the PC

- AMD ROCm™ platform, an open software ecosystem that scales with the industry

No other company spans the full AI compute spectrum the way AMD does.

AMD “Helios”: A Critical Building Block for Yottascale Compute

As AI pushes toward yottascale, system architecture and software becomes just as important as silicon.

That’s why AMD “Helios” is a critical part of the AMD journey.

“Helios” is AMD’s open, rack-scale AI infrastructure platform, designed for the next generation of agentic and large-scale AI workloads. Key principles include:

- Open rack-scale design, built on OCP standards

- Dense, scalable compute optimized for massive AI clusters

- Advanced power and cooling efficiency, essential for yottascale growth

- End-to-end integration of CPUs, GPUs, networking, and software

“Helios” enables AI to scale by the rack and by the data center, not one server at a time—making yottascale compute practical, deployable, and sustainable.

From CES 2026 Forward: Looking towards the Yottaflop Era

Zettaflops defined the early AI boom. Yottaflops will define what comes next.

AI is making computing more powerful, more accessible, and more impactful than ever before—and AMD is helping build the open foundation needed to support this unprecedented scale.

The yottaflop era isn’t a future milestone. After CES 2026, it’s clearly the path forward.

/avx512-blog-primary-photo.png)