RAG with Hybrid LLM on AMD Ryzen AI Processors

Aug 15, 2025

Introduction

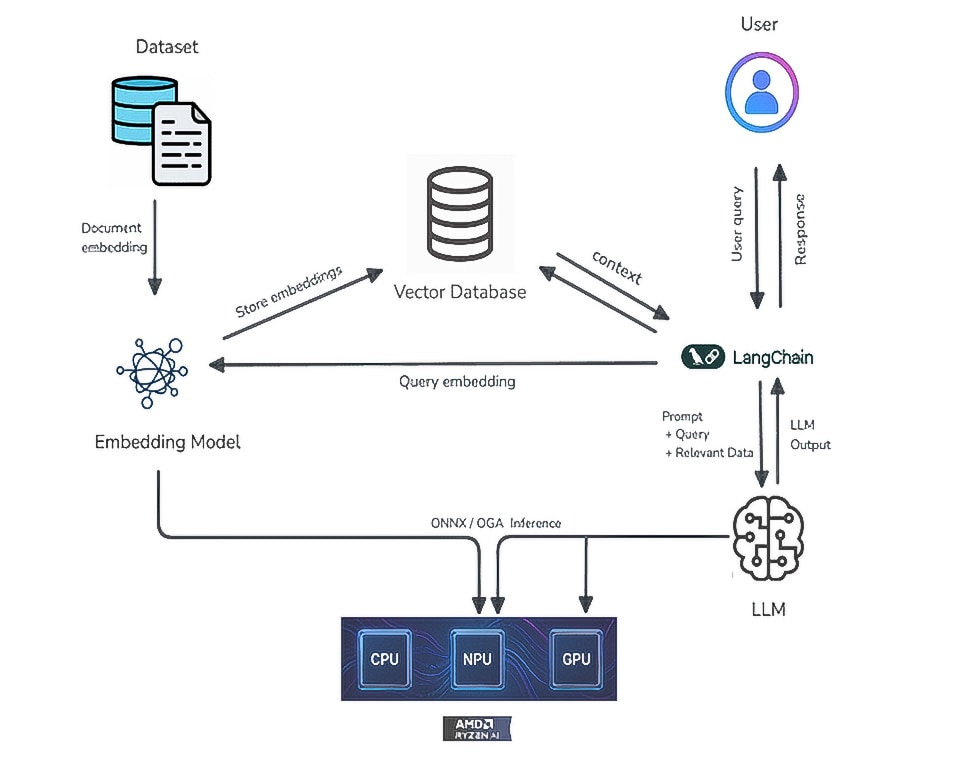

Retrieval-augmented generation (RAG) has become a popular approach for building LLM-based applications that require accurate, context-aware responses by grounding the model in relevant external data. While most RAG deployments rely on cloud-based inference, running RAG fully on-device can improve privacy, reduce latency, and ensure availability without an internet connection- provided the models are optimized for local execution.

This blog showcases a foundational RAG application running on a PC with an AMD Ryzen™ AI processor, leveraging both NPU and GPU for efficient, high compute, and low power on-device inference. The sample RAG framework is built using the popular LangChain library, integrated into the Ryzen AI software environment with a pre-quantized and preprocessed LLM based on the ONNX Runtime GenAI (OGA) framework. In addition, the other core component of the RAG flow, the embedding model, is also compiled and runs on the NPU to enable efficient low power embedding generation.

LLMs present unique inference challenges due to the different compute-bound and memory-bound characteristics of their prefill and decode phases. These challenges become more pronounced for on-device acceleration, where available compute and memory bandwidth are more constrained compared to cloud environments. A key aspect of this setup is the OGA hybrid model, which enables disaggregated inference by splitting execution between the NPU and GPU. During the prefill phase, high-compute workloads are offloaded to the NPU, while the GPU handles the decode phase, where high memory bandwidth is critical. This hybrid execution improves end-to-end performance and responsiveness while keeping inference fully on-device.

Figure 1: RAG on Ryzen AI software environment with Hybrid LLM

RAG Pipeline Overview

The example implements a basic RAG flow that retrieves relevant information from local documents using Facebook AI Similarity Search (FAISS) as the vector store. For brevity, details such as document loading, chunking, indexing, and retrieval logic used in this example are not discussed here, as they follow common patterns found in most RAG implementations. Instead, we focus on the key custom classes that integrate the local LLM and local embedding model into the LangChain framework.

Note: For the associated code and a detailed README with step-by-step instructions, please refer to the GitHub repository: https://github.com/amd/RyzenAI-SW/tree/main/example/llm/RAG-OGA

Custom LLM class

To integrate the hybrid LLM model into the LangChain framework, we implement a custom LLM class that wraps the ONNX Runtime GenAI (OGA) model using the OGA Python API. The LLM used in this example is a precompiled, ready-to-run Llama-3.2-3B-Instruct model available from AMD Hugging Face repository. This custom wrapper handles tokenization, model inference, and integrates seamlessly with LangChain’s LLM interface.

class custom_llm(LLM):

....

def __init__(self, model_path: str, **kwargs: Any):

...

self._model = og.Model(model_path)

self._tokenizer = og.Tokenizer(self._model)

...

def _prepare_generator(self, prompt: str) -> og.Generator:

...

params = og.GeneratorParams(self._model)

search_options = {

"max_length": min(2048, len(input_tokens) + 1024),

"temperature": 0.5,

"top_k": 40,

"top_p": 0.9

}

params.set_search_options(**search_options)

generator = og.Generator(self._model, params)

...

def _call(self, prompt: str, stop: Optional[List[str]] = None, **kwargs: Any) -> str:

...

while not generator.is_done():

...

generator.generate_next_token()

token = generator.get_next_tokens()[0]

response_tokens.append(token)

decoded_tokens = [self._tokenizer_stream.decode(t) for t in response_tokens]

response = "".join(decoded_tokens)

For more information related to ONNX Runtime GenAI API-based LLM deployment on an AI PC powered by Ryzen AI processor, refer to: https://ryzenai.docs.amd.com/en/latest/hybrid_oga.html.

Custom Embedding class

Similar to the custom LLM, a custom embedding class is implemented to wrap the ONNX model for the BGE large embedding model (bge-large-en-v1.5). This model generates a 1024-dimensional embedding vector and supports a maximum sequence length of 512 tokens. The ONNX Runtime session is configured to use the Vitis AI Execution Provider (EP) from the Ryzen AI software stack, which compiles and caches the model for the NPU. The compiled model is stored locally, enabling fast, low-power embedding generation on the NPU during subsequent runs.

class custom_embeddings(Embeddings): def init(self, model_path: str, tokenizer_name: str):

self.session = ort.InferenceSession(

model_path,

providers=["VitisAIExecutionProvider"],

provider_options=[{

"config_file": "vaiml_config.json",

"cache_dir": "./",

"cacheKey": "modelcachekey_bge"

}]

)

def _embed(self, texts: List[str]) -> List[List[float]]:

...

for text in texts:

inputs = self.tokenizer(

text,

max_length=512,

padding="max_length",

truncation=True,

return_tensors="np",

return_token_type_ids=False

)

input_ids = inputs["input_ids"]

total_input_tokens += np.count_nonzero(input_ids)

onnx_inputs = {

"input_ids": input_ids.astype(np.int64),

"attention_mask": inputs["attention_mask"].astype(np.int64)

}

outputs = self.session.run(None, onnx_inputs)

...

For more information about compilation of the embedding model, refer to the official documentation page: https://ryzenai.docs.amd.com/en/latest/modelrun.html. You can also find a standalone example of embedding model compilation here: https://github.com/amd/RyzenAI-SW/tree/main/example/gte-large-en-v1.5-bf16.

Sample Questions and Answers

Here are a few sample questions and their corresponding answers generated by the RAG example using local documents. The input document used for this demonstration is the Ryzen AI documentation PDF.

##Question 1: Enter your question: what is NPU and tell me the three important features of NPU. # RAG based Contextual answer python rag.py Enter your question: what is NPU and tell me the three important features of NPU. |

LLM Performance on Ryzen AI Processor

The hybrid LLM implementation on Ryzen AI processor provides industry-leading performance for key metrics such as time-to-first-token (TTFT) and tokens-per-second (TPS). By distributing execution across the NPU and GPU, it delivers optimal performance in both the compute-bound prefill phase and the memory-bound decode phase. Below is a sample performance snapshot collected from this RAG example running on a Ryzen AI 9 HX 370 processor based PC. Actual numbers may vary depending on the LLM used, model version, and specific system configuration.

Q1: Avg Input Tokens: 1608 Avg Output Tokens: 440 Avg TTFT(Sec): 2.272704 Avg TPS: 30.07

Q2: Avg Input Tokens: 1172 Avg Output Tokens: 232 Avg TTFT(Sec): 1.86373 Avg TPS: 32.65

Q3: Avg Input Tokens: 1452 Avg Output Tokens: 11 Avg TTFT(Sec): 2.099082 Avg TPS: 24.53 |

For the latest performance data, refer to the GitHub example associated with this blog, which will reflect the most up-to-date results.

Conclusion

In this blog, we demonstrated a sample RAG application on an AI PC powered by a Ryzen AI processor using LangChain, demonstrating how to efficiently run both the LLM and embedding model through the Ryzen AI Software. By leveraging hybrid execution - NPU for compute-intensive prefill and GPU for memory-intensive decode - we achieved faster end-to-end inference, lower latency, and reduced power consumption, all while keeping data fully on-device.

This approach shows how performance-optimized, locally executed RAG flows can deliver responsive, private, and energy-efficient AI experiences without relying on the cloud, providing a foundation for building more advanced RAG or agentic applications using LangChain or similar frameworks on Ryzen AI processor-based PCs

Call to Action

Discover the full potential of RAG on AMD Ryzen AI processor by exploring the example and building your own applications. Access the full code and detailed instructions on our GitHub Repository.