Open Source AI Week Recap: Powering Open-Source AI Together

Oct 24, 2025

Open Source AI Week in San Francisco brought together leading AI and ML conferences, hackathons, and networking events for several days of announcements, demos, and cross‑industry collaboration. Our presence spanned AMD AI DevDay (Oct 20), the Open Agent Summit panel sessions (Oct 21), and multiple keynotes and technical presentations at PyTorch Conference (Oct 22 and 23), showcasing how AMD ROCm™ software, AMD Instinct™ GPUs, and AMD Ryzen™ AI power open‑source AI from research through production.

AMD AI DevDay, October 20th

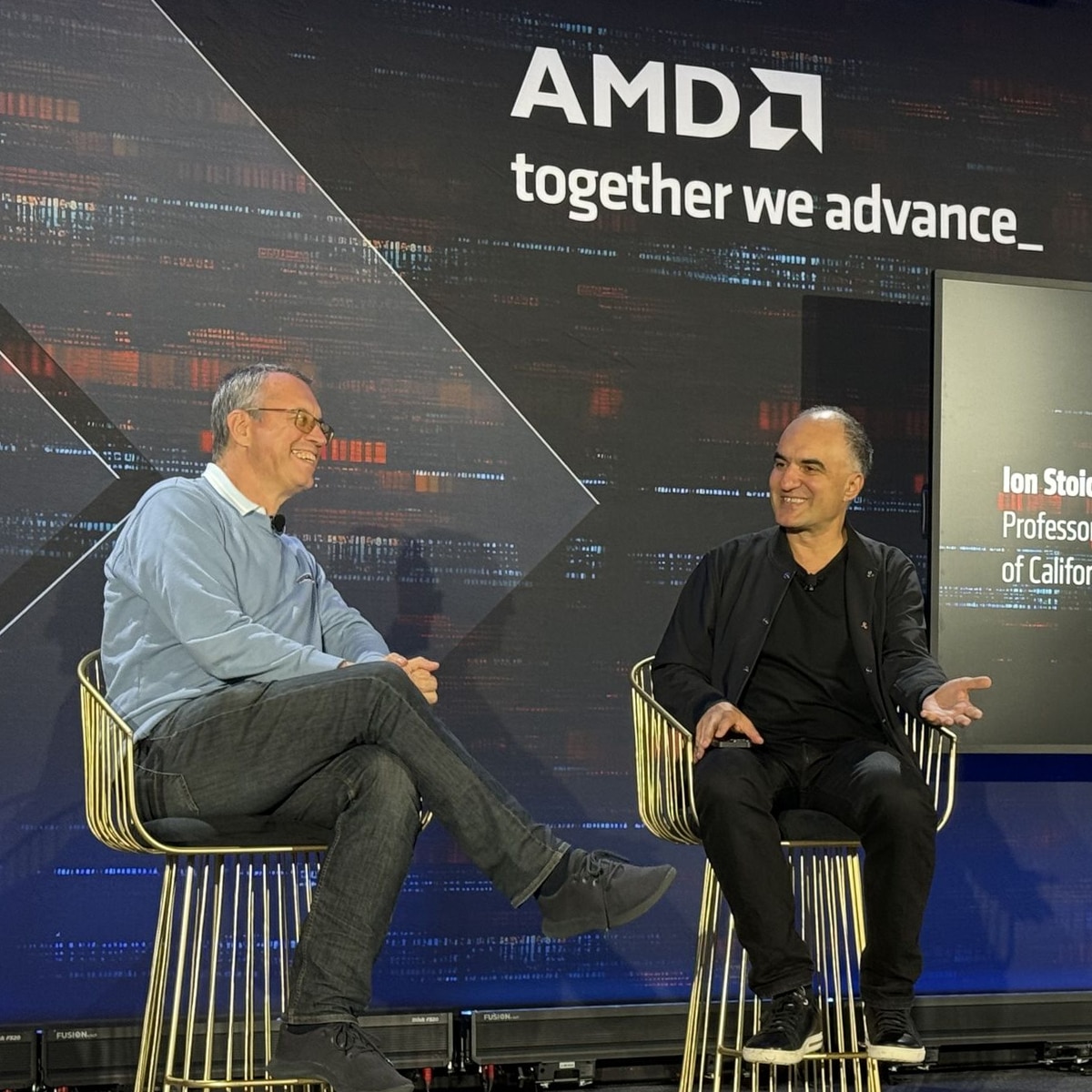

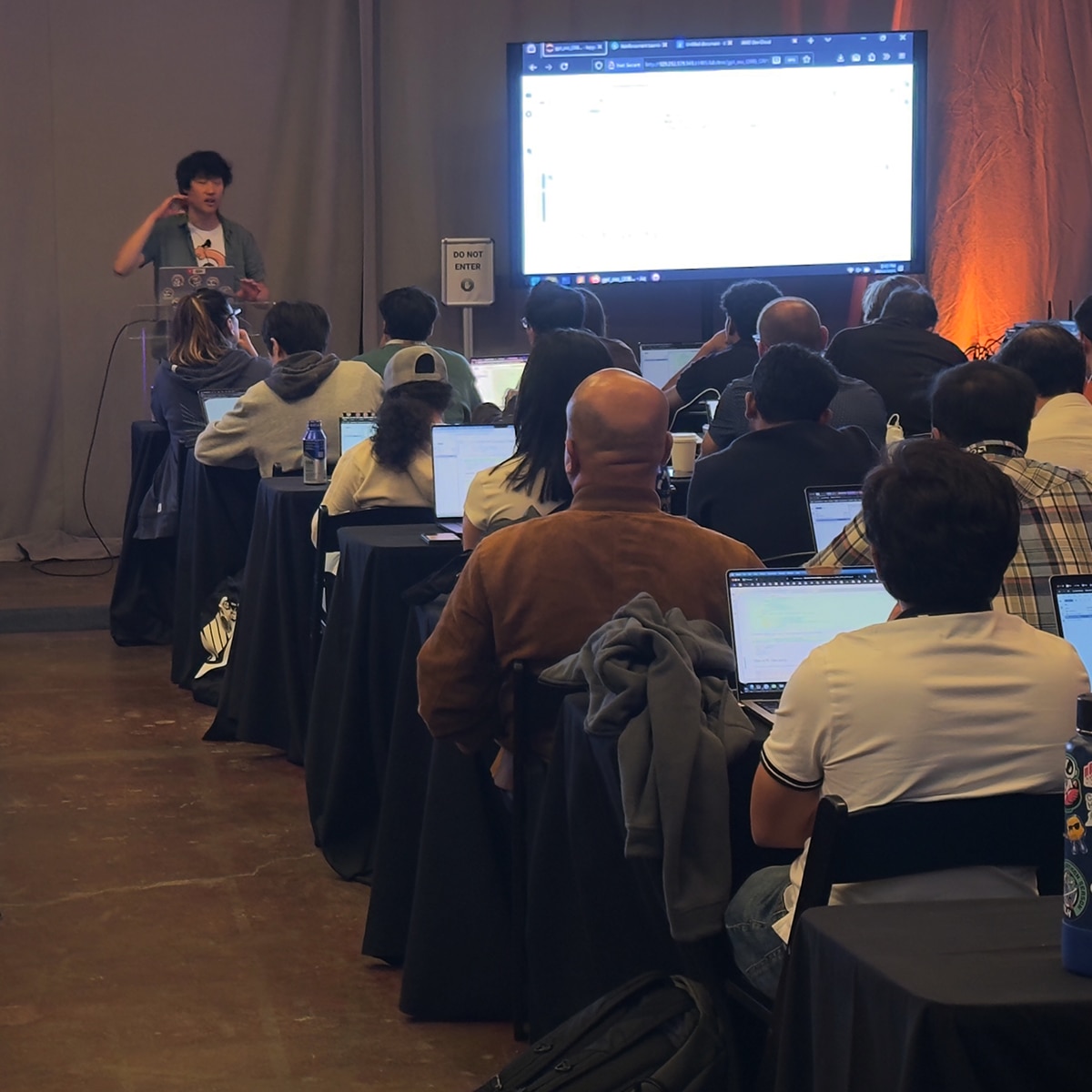

AMD AI DevDay opened with Ion Stoica, an AI icon and professor at UC Berkeley, as a headliner and featured speakers from Google, OpenAI, Unsloth, vLLM, Hugging Face, and many other opensource projects. The lineup underscored strong collaboration among universities, open source communities, and AI innovators. Developers also learned about the latest AI technology running on AMD GPUs throughout four hands-on workshops. Near the close of the event, Vamsi Boppana, Senior Vice President of AI at AMD, invited Mark Saroufim, co-creator of GPU mode, to discuss the results of the AMD developer contest (more details in next section). It was a day filled with innovation and networking with the brightest minds in AI.

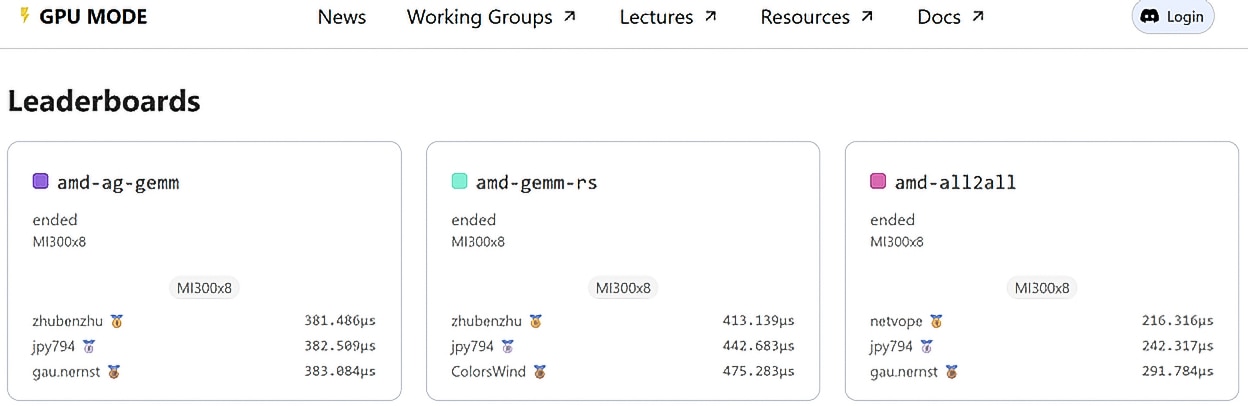

Distributed Inference Communication Kernel Contest

Teams were challenged to optimize multi-GPU communication kernels and enhance LLM inference performance on AMD GPUs. The contest drew more than 600 developers, 60,000 kernel submissions with average up to 2,000 submissions/day. Mark Saroufim quoted “the contest submissions generated more AMD kernel data than what exists in internet”. The leaderboard shows the top for the 3 kernels (AllGather, ReduceScatter, All2All) where the contestants completed for the fastest and generic implementation across multiple kernel shapes. Check out the Kernel Leaderboard.

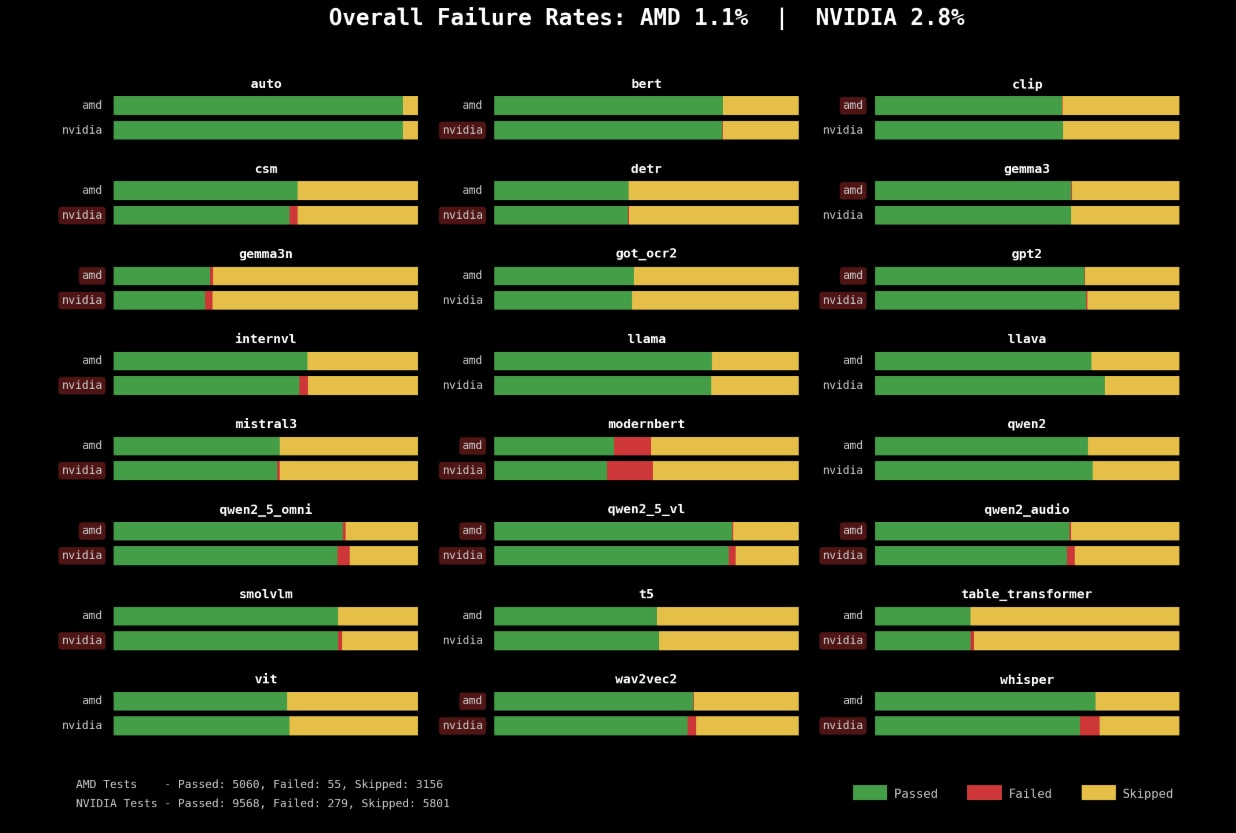

Hugging Face and Pytorch CI Dashboard

AMD also published a daily dashboard showing the robost CI testing being done on the popular projects like Hugging Face and PyTorch. Currently the pass rate on AMD is higher than any competitions, proving the most first-class citizen support. Check out the here.

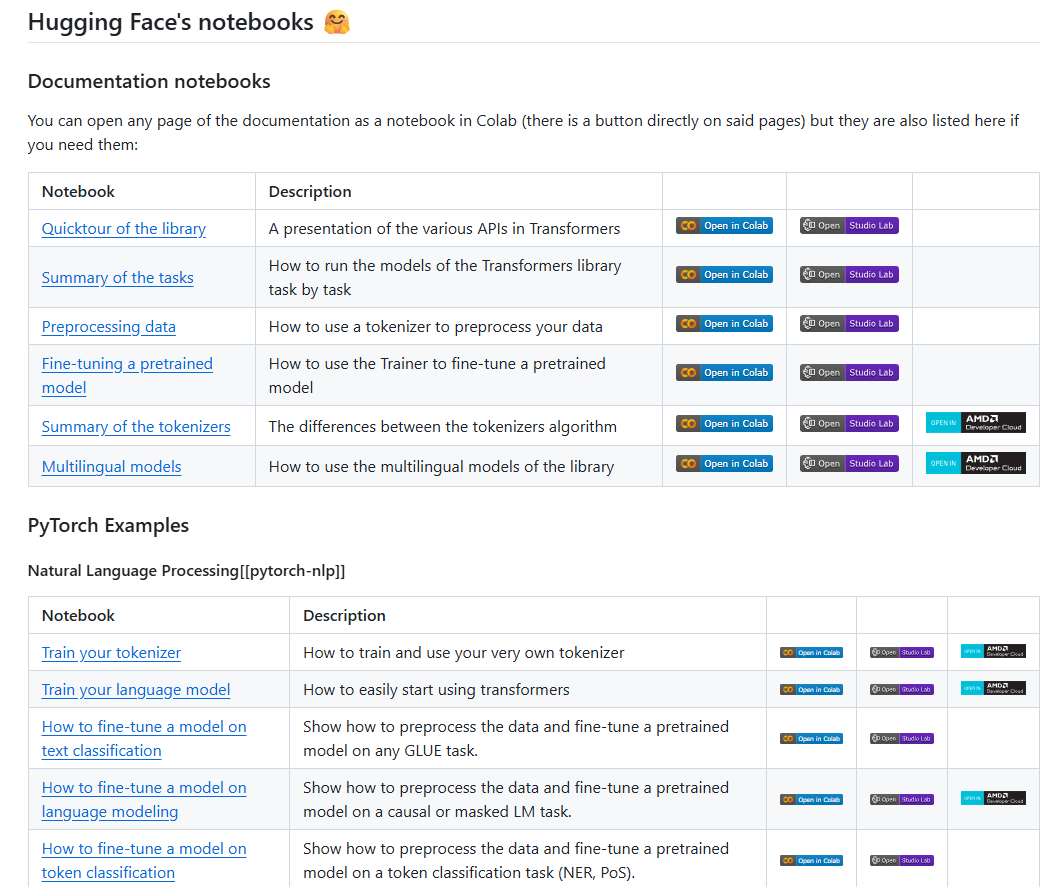

One-click Access to Popular Jupyter Notebooks on AMD DevCloud

We have now made access to AMD GPUs as easy as one-click. From popular Jupyter notebooks like Hugging Face Transformers, you can simply click “AMD Developer Cloud” and it launches without any user account creation. Check it out here.

A clear theme of the day was “open and developer first.” AMD and our partners were amplifying community contributions as a path to wider hardware choice and competitive performance outside closed stacks.

PyTorch Conference, October 20 - 23rd

Agents in the Wild Panel, Open Agent Summit

As a featured panelist at the Open Agent Summit, Mahdi Ghodsi discussed the emerging world of agentic AI systems - agentic evaluation, tackling the challenge of measuring nondeterministic agent behavior beyond model outputs; governance and privacy, balancing data control with usability; and agent evolution, exploring how agents are transitioning from simple tools to true collaborators and what protocols like MCP or A2A might define their interactions. He also expressed how open software should run on open hardware with no dependency on any sepecific vendor.

.png)

Training at Scale

AMD also published a new white paper, “Training at Scale,” which outlines best practices and system architectures for largescale model training on AMD hardware. The paper synthesizes guidance on parallelism strategies, communication optimization, memory and resource management, and benchmark case studies that demonstrate scalable performance on AMD Instinct GPU clusters with AMD ROCm software.

Keynote, TorchComms Announcement, and Large-Scale Training

As a Diamond Sponsor at PyTorch Conference 2025, AMD showcased its continued commitment to the open-source AI ecosystem and developer community. Through keynote sessions, panels, technical talks, booth demos, and community activations, AMD demonstrated how the AMD ROCm software , AMD Instinct GPUs, and AMD Ryzen AI processor based AI PCs empower developers to accelerate AI innovation across cloud, edge, and client devices.

AMD speaker, Anush Elangovan, Corporate VP of AI Software at AMD, shared how the ROCm open software ecosystem, tightly integrated with PyTorch, enables developers to accelerate AI workloads across diverse environments. With day-0 support for major frameworks and frontier models, improved performance in the latest ROCm releases, and expanded Windows support, Anush emphasized the mission of AMD to deliver a unified, open, and high-performance foundation for AI development, spanning data centers to personal devices.

Practical AI Deep Dive Sessions

Keynote panel discussion, moderated by Mark Saroufim (GPUMode), featured Dylan Patel (SemiAnalysis), Sharon Zhou (AMD), Peter Salanki (CoreWeave), and Nitin Perumbeti (Crusoe), where panelists debated trends in accelerator design, deployment, and economics and emphasized how open hardware and open software are reshaping scalability and collaboration across the AI stack. In technical sessions, IBM and AMD engineers demonstrated enabling vLLM v1 on AMD GPUs with Triton and presented a Triton vLLM backend that achieves state-of-the-art performance on AMD hardware, showing concrete paths to portable, high-performance inference. A separate lightning talk on composable kernels covered improvements to GEMM and SDPA performance on ROCm software, underlying low-level kernel and runtime work that feeds directly into the higherlevel training and inference gains highlighted throughout the week.

Efficient MoE Pre-training at Scale on AMD GPUs with TorchTitan

In a joint AMD–Meta session, presenters, Liz Li & Yanyuan Qin (AMD), Matthias Reso (Meta), introduced efficient Mixture of Experts (MoE) pre-training on AMD GPUs using TorchTitan. The talk covered distributed training optimizations, performance scaling, and best practices for large-model pre-training efficiency. This session showcased strong linear scaling and high efficiency across large AMD Instinct GPU clusters (1,204 MI325G GPU cluster running training DeepSeek v3 and Llama 4 models), illustrating the collaboration between AMD and Meta team to validate ROCm performance at hyperscale.

The AMD booth became a central hub for developers to explore new tools, connect with AMD engineers, enter sweepstakes, and learn about the growing suite of developer resources across AMD platforms. Here are some highlights:

Exhibitor Demos

At the AMD booth, we highlighted 5 AI demos. Let's dive in.

1. Simplifying AI Development with AI Workbench and Resource Manager

The demo showcased how AI Workbench provides a low-code environment for model development, while Resource Manager offers streamlined compute orchestration with granular access control and real-time cluster management. Developers could sign up for early access directly at the booth.

2. Text-to-Video Generation on AMD Instinct™ MI300X GPU

Attendees witnessed cinematic video generation from text prompts using ComfyUI and the Wan 2.2 14B model with LoRA, powered by the AMD Instinct™ MI300X GPU, highlighting exceptional performance and scalability for generative AI.

3. Two-Agent Nutrition Advisor (vLLM + MCP)

This demo illustrated an open, multi-agent workflow using two models running on AMD GPUs:

- A vision orchestrator analyzing products via Qwen3-VL-30B for ingredient data retrieval.

- A nutrition consultant model (Qwen3-30B) analyzing health impact and suggesting alternatives.

The demo, built with vLLM, it demonstrated how AMD hardware supports complex reasoning and retrieval pipelines using open models.

4. ComfyUI: Local Generative AI on Radeon GPUs

Developers generated images and 3D visuals locally using ComfyUI powered by PyTorch on Windows and the Radeon AI Pro R9700 GPU, highlighting the commitment of AMD to bringing high-performance generative workflows to desktop and workstation users.

5. Nexa AI: Frontier AI Models on Device

Partnering with Nexa AI, AMD featured NexaSDK, enabling one-line deployment of frontier models on NPU, GPU, or CPU, supporting multiple languages including Python, C++, and Swift. Nexa also previewed a personal on-device AI agent with local RAG capabilities for private, real-time assistance.

Developer Engagements & Giveaways

The AMD booth buzzed with engagement throughout the conference. Developers were invited to participate in 2 giveaways to get their hands on AMD hardware.

- AMD x Framework Desktop Giveaway - 40 Ryzen™ AI 395+ desktops (128 GB RAM) offered to developers who scanned and signed up.

- Radeon RX 9070 XT Sweepstakes - Two GPUs were raffled each day, with our VP, Sharon Zhou, personally presenting prizes on Day-2 to cheering crowds.

These activations encouraged direct interaction between developers and AMD engineers, building stronger community connections on the show floor.

AMD Reception After-Party at Press Club

To conclude the final day, AMD hosted an exclusive reception at the Press Club in San Francisco. The evening brought together developers, partners, and industry peers for relaxed networking over food and drinks. The event celebrated collaboration within the open-source community and reinforced our message of advancing AI together through shared innovation.

Advancing AI Together

Attendees at Open Source AI Week in San Francisco heard one big story: open source projects, vendors, and institutions are building the future of AI in the open, together.

Across AMD AI DevDay, the Open Agent Summit, PyTorch sessions, keynotes, and technical talks ranged from low-level kernels and communication libraries to monitoring dashboards, oneclick notebooks, and hyperscale training runs.

Ongoing engineering and community contributions by AMD, from developer challenges, ROCm software enhancements, to TorchTitan and TorchComms integrations, the Hugging Face Dashboard, oneclick Jupyter notebooks, and public PyTorch test coverage, showcased how vendor engineering, community contributions, and collaboration across companies combine to make PyTorch and other frameworks performant and portable across a diverse range of accelerators. Sessions with partners like Meta and IBM (vLLM + Triton) showcased concrete, portable paths to state-of-the-art training and inference, while work on composablekernel and CI/CD is lowering barriers to entry for developers as they move from prototype to production.

The net result is more choice, better interoperability, and faster time to scale. A healthier ecosystem gives developers more freedom to choose the right hardware for their needs without sacrificing speed, tooling, or reproducibility. Open Source AI Week reinforced the message that multivendor collaboration and community momentum are accelerating the entire stack and unlocking new opportunities for research and production AI. For readers who want deeper technical detail, see AMD ROCm blog post on PyTorch and AMD GPUs, which explains our integration approach, day-0 framework support, and practical guidance for running PyTorch on ROCm-enabled Instinct GPUs You can also review our public PyTorch testcoverage and CI dashboards for up-to-date integration status and validation data.

With a growing ROCm™ ecosystem, deep PyTorch integration, and a thriving community, AMD is committed to empowering developers to build, finetune, and deploy the next generation of AI.

Additional Developer Resources

- Developer Resources Portal – Access SDKs, libraries, documentation, and tools to accelerate AI, HPC, and graphics development.

- AI@AMD X – Stay updated with the latest software releases, AI blogs, tutorials, and news.

- Developer Cloud – Start projects on AMD Instinct™ GPUs with $100 in complimentary credits for 30 days, offering an easy on-ramp for experimentation and benchmarking.

- Developer Central YouTube – Explore hands-on videos, demos, and deep-dive sessions from engineers and community experts.

- Developer Community Discord – Join global developer communities, share feedback, and exchange optimization tips directly with peers and AMD specialists.