Rack Scale AI and the Promise of Agentic AI

Jul 10, 2025

When generative AI tools first gained mainstream traction in late 2022, they captured the world's attention with their ability to produce text, code, and imagery on demand. But their real significance was in signaling the start of a much broader transformation—one that’s now taking shape through Agentic AI.

Agentic AI represents a new frontier: AI systems capable of autonomous, multi-step decision-making that span tasks, tools, and even domains. Unlike traditional prompt-based systems, Agentic AI dynamically coordinates actions across multiple models and applications—acting on behalf of users and organizations to drive outcomes. It’s the next evolution of enterprise productivity, unlocking previously untapped operational potential.

But this leap in complexity requires immense computing power and cutting-edge infrastructure. and infrastructure requirements.

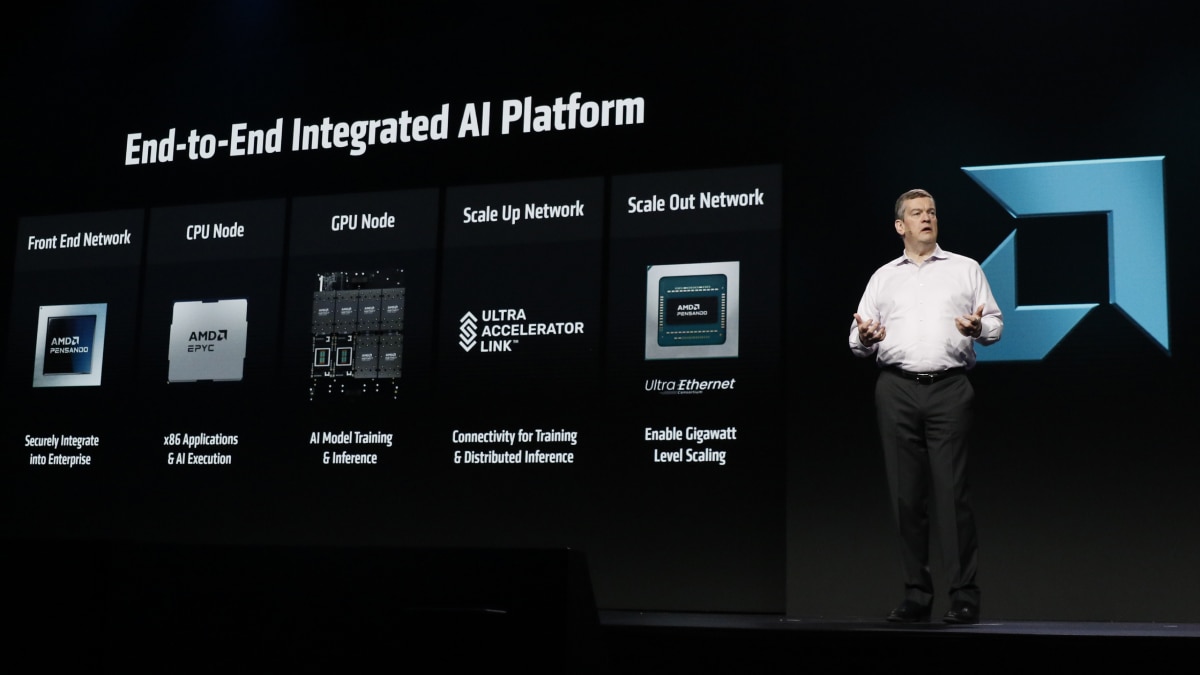

Agentic AI requires not just powerful GPUs, but high-performance CPUs and networking. To support this AMD is designing rack-scale infrastructure solutions that bring the best of the AMD AI portfolio to a single, high-performance solution. AMD is uniquely positioned to power every layer of this stack—from AMD Instinct™ GPUs and EPYC™ CPUs to Pensando™ DPUs and scale-out networking—all underpinned by open, flexible, and programmable infrastructure designed for modern AI.

Rack-Scale AI Infrastructure, Purpose-Built for the Enterprise

At Advancing AI 2025, AMD executives and partners discussed the value and necessity of open, rack-scale AI infrastructure for AI deployments. AMD is uniquely positioned to build truly end-to-end rack scale solutions. These solutions take advantage of leadership performance from AMD EPYC CPUs to process data, manage GPUs, and run enterprise applications, AMD Instinct GPUs to power agentic model execution, and AMD Pensando networking solutions to quickly and reliably access data. These technologies, paired with scale-up and scale-out networking, allow you to go from small enterprise deployments to large, gigawatt data centers.

“At AMD, we know that agentic AI isn’t just a visionary concept, it is emerging here today,” said Forrest Norrod, Executive Vice President and General Manager of Data Center Solutions at AMD, “ Our customers want it, the industry demands it, and we are enabling it with a leadership portfolio of products and our open rack infrastructure.”

Introducing “Helios”: the Future of AI Rack Solutions from AMD

At Advancing AI 2025, AMD CEO Dr. Lisa Su provided a sneak peek of the next stage of AI Infrastructure development at AMD when she previewed AMD Instinct MI400 GPUs and “Helios”. “Helios” is built to deliver the compute density, memory bandwidth, performance and scale out bandwidth needed for the most demanding AI workloads, in a ready-to-deploy solution that accelerates time to market. It has been designed from the ground up as a unified system that combines the broad portfolio AMD offers for enterprise AI in a single platform.

“Helios” has planned availability in 2026, and its design will include the latest AMD solutions. This will encompass AMD Instinct MI400 GPUs, 6th Gen AMD EPYC CPUs, and AMD Pensando “Vulcano” NICs, all integrated into an OCP compliant rack with support for UAlink and Ultra Ethernet. This infrastructure platform will be a launchpad for what’s next in enterprise AI.

To learn more about Agentic AI and the rack scale infrastructure that powers it, watch the replay of Advancing AI 2025.