AMD Expands AI Momentum with First MLPerf Training Submission

Jun 04, 2025

As AI advances, training performance has become critical, especially as organizations shift from using pretrained models to fine-tuning large-scale generative AI for their own needs.

Training is a strategic focus for AMD and today marks a major milestone: our first-ever MLPerf Training submission. With AMD Instinct™ MI300 Series GPUs and the AMD ROCm™ software stack, we’re demonstrating the strength of our platform with competitive performance on Instinct MI300X GPUs, and in some cases leading performance on Instinct MI325X GPUs, in one of today’s most important workloads: fine-tuning the Llama 2-70B-LoRA model.

This submission underscores a key industry shift. Instead of building foundation models from scratch, enterprises are rapidly embracing techniques like LoRA to adapt models faster and more cost-effectively. AMD is helping lead that shift.

Backed by high memory bandwidth, versatile compute, and an open, optimized software stack, Instinct MI300 Series GPUs are built to meet the demands of modern AI infrastructure, enabling organizations to train and fine-tune massive models with efficiency and scale.

AMD Instinct MI300 Series: engineered for next-gen AI training.

Debut AMD MLPerf Training Results Deliver Competitive Performance in Fine-Tuning

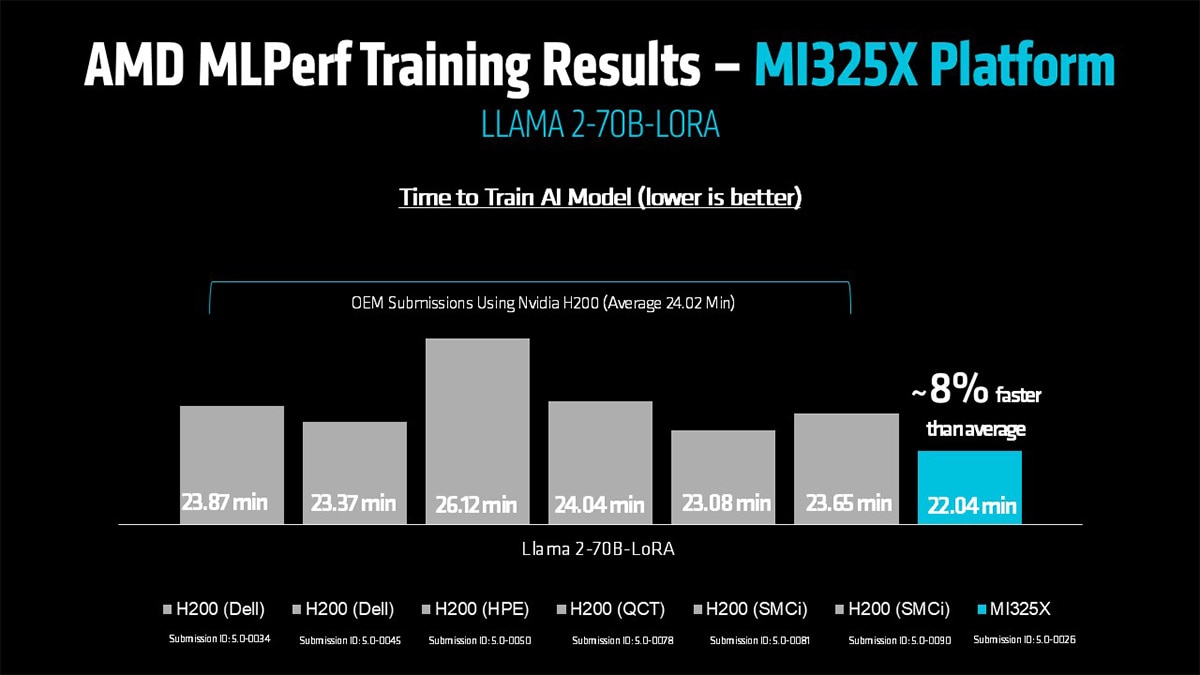

MLPerf Training v5.0, released on June 4th, marks the debut of AMD’s MLPerf Training submission. The highlights include leadership performance on Instinct MI325X GPUs and the first ever multi-node training submission on Instinct hardware demonstrating strength of the AMD AI training portfolio.

In the AMD MLPerf Training v5.0 submission, the Instinct MI325X platform outperforms the NVIDIA H200 platform by up to 8% when fine-tuning Llama 2-70B-LoRA, a widely adopted workload for customizing large language models. This is a strong signal that AMD is competitive in more widely adopted AI workloads moving forward.

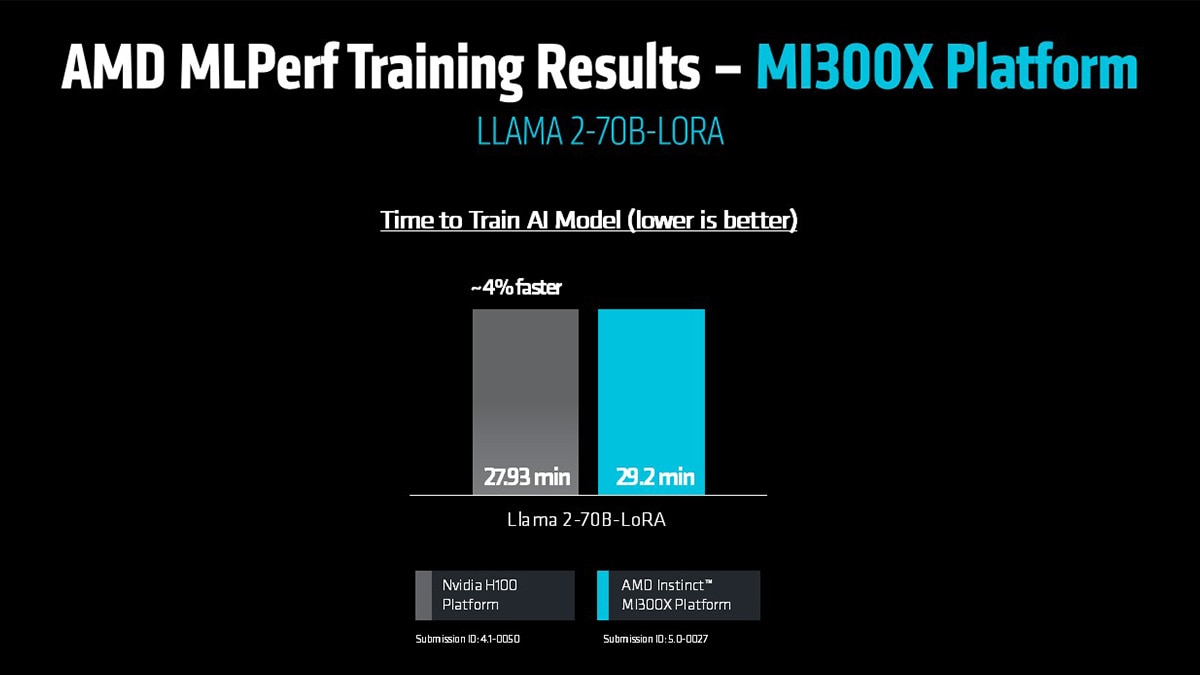

At the same time, the AMD Instinct MI300X platforms deliver competitive performance compared to NVIDIA H100 platform on this same workload, validating that both GPU platforms in the Instinct MI300 Series show strong potential to address a broad range of training needs, from enterprise applications to cloud-scale environments.

Industry-Wide Validation on Llama 2-70B-LoRA Training

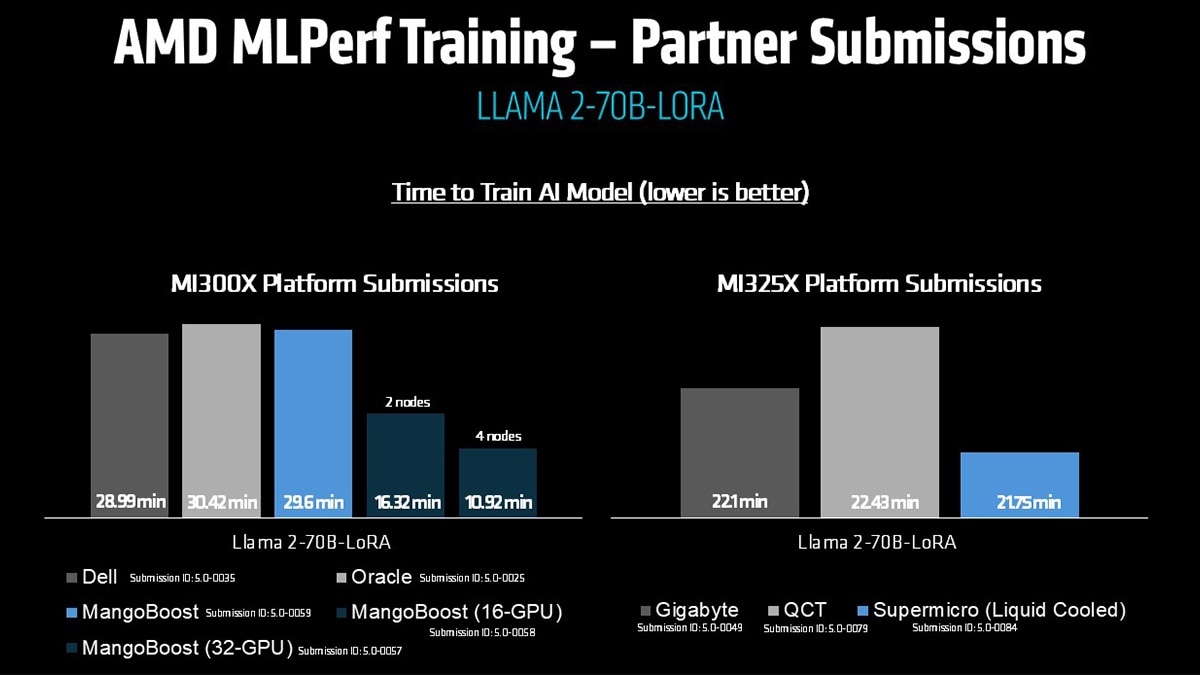

In addition to AMD’s own submission, six OEM and ecosystem partners submitted MLPerf Training results using AMD Instinct MI300 Series GPUs, demonstrating that AMD Instinct performance is not just a lab benchmark, but reproducible across a wide range of platforms and configurations. All submissions were based on the same real-world benchmark, fine-tuning the Llama 2-70B-LoRA model, one of the most important AI workloads for today’s generative AI developers.

These third-party results reinforce that Instinct MI300X and MI325X deliver consistently across diverse infrastructure environments, and in several cases, set new industry milestones.

Supermicro became the first company to ever submit training results to MLPerf using a liquid-cooled AMD Instinct solution. Using a liquid-cooled Instinct MI325X platform, Supermicro achieved a time-to-train score of 21.75 minutes, demonstrating not only top-tier performance but also the thermal efficiency and scaling potential of advanced cooling solutions in dense AI deployments.

MangoBoost raised the bar with a series of groundbreaking submissions:

A single-node Instinct MI300X platform resulted in completing training in just 29.6 minutes. More significantly, MangoBoost delivered the first-ever multi-node training submission powered by AMD Instinct GPUs with a 2-node (16-GPU MI300X) setup, with a time-to-train score on of 16.32 minutes and completing 4-node configuration (32-GPU MI300X) training in just 10.92 minutes.

These multi-node results showcase not only the scalability of the MI300X platform but mark a major milestone for AMD in distributed AI infrastructure. This is a new era of multi-GPU, multi-node performance powered by open software and industry-leading hardware.

Dell submitted on an Instinct MI300X 8-GPU platform, completing training in 28.99 minutes.

Oracle followed with a strong result of 30.42 minutes using the same MI300X 8-GPU configuration.

Gigabyte submitted on an MI325X 8-GPU platform, hitting 22.1 minutes.

QCT submitted similar results on an MI325X 8-GPU setup with 22.43 minutes.

These partner submissions don’t just validate AMD’s internal results, they demonstrate that the Instinct MI300 Series GPUs and platforms perform across both air-cooled and liquid-cooled systems, single-node and multi-node clusters, and across multiple OEM configurations. The power, flexibility, and openness of AMD Instinct GPUs is being adopted, and pushed to new heights, by innovators across the ecosystem.

Commenting on the debut MLPerf Training publication submitted by AMD, Davide Kanter, Head of MLPerf said: “I'm thrilled to congratulate AMD on their first MLPerf Training results. Not only did they submit two different accelerators, but they also enabled their ecosystem of partners - an impressive accomplishment for a first submission. We are looking forward to many more in the future.”

ROCm Software: Open, Optimized, and Driving Real-World Results

The performance gains shown by AMD are powered by the rapid evolution of AMD ROCm™ V6.5 software, AMD’s open software stack. Over the past year, ROCm software has matured into a robust training platform. In the Llama 2-70B-LoRA fine-tuning task, ROCm was key to unlocking top-tier performance on Instinct MI325X and MI300X GPUs, with improvements like Flash Attention, Transformer Engine support, and optimizer-level tuning. More in-depth, information on these optimizations can be found in our technical blog. In addition, we have released optimized docker containers used in this work along with easy-to-use reproduction instructions.

These results establish that an open software stack, backed by deep engineering optimizations, can rival and in some cases outperform proprietary ecosystems.

For customers scaling generative AI, ROCm software delivers the performance, flexibility, and developer support needed to move fast.

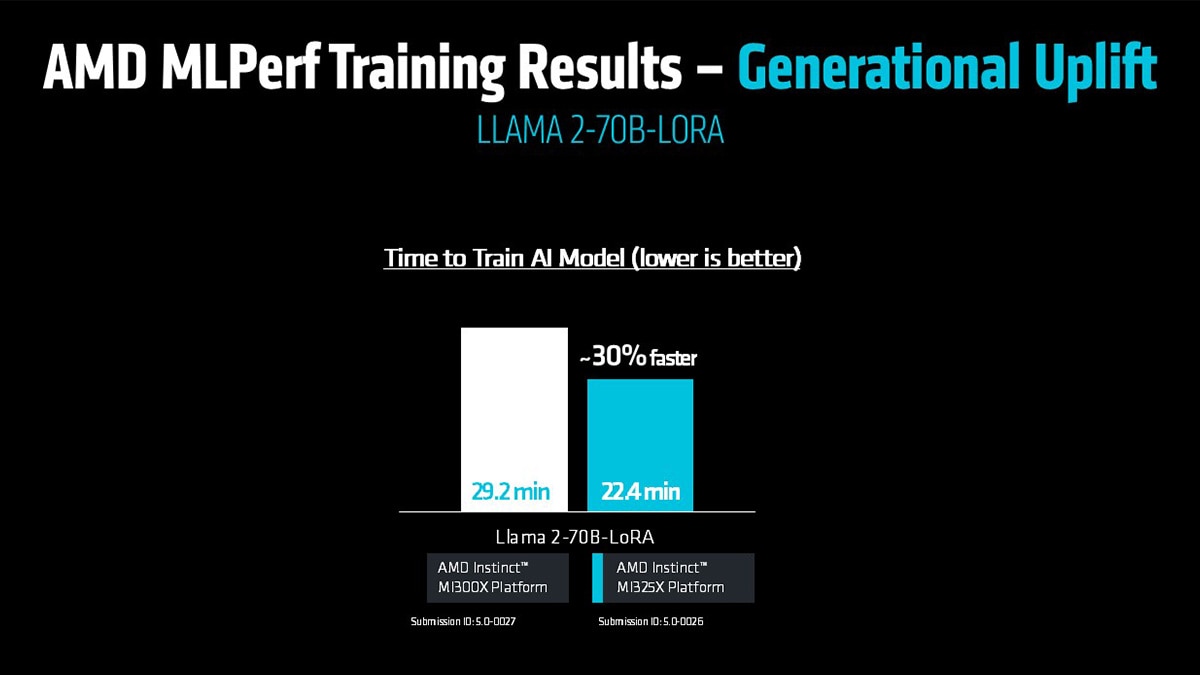

Outpacing the Competition in Generational Performance

In AI infrastructure, moving to a new generation of hardware isn't just about staying current, it’s essential for keeping pace with rapidly growing model sizes, faster training requirements, and evolving deployment needs. Organizations investing in AI expect meaningful performance gains with each new platform, because the difference between incremental improvement and true advancement can have a major impact on training costs, speed to market, and competitive advantage.

The AMD Instinct MI325X GPU sets a new standard for what a generational leap can deliver. In platform (8-GPU) fine-tuning workloads like Llama 2-70B-LoRA, the Instinct MI325X offers up to a 30% performance uplift compared to the Instinct MI300X, a significant generational advancement that accelerates large-scale AI development and shortens training timelines.

The Future is Open and Accelerated

The first AMD MLPerf training submission marks a major milestone, but it’s only the beginning. With competitive, and in critical cases, leading performance on key AI workloads, AMD Instinct MI300 Series GPUs provide customers with new options for scaling AI innovation.

As models grow larger and AI use cases expand, AMD will continue to drive forward with open, high-performance solutions that empower customers to train, fine-tune, and deploy AI faster and more flexibly than ever before.

The momentum behind AMD Instinct is real, and it's just getting started.

Make sure you check out one of our partner MangoBoost’s upcoming blog, where they share insights from their own MLPerf Training submission powered by AMD Instinct™ MI300X GPUs. It’s a great opportunity to see how our ecosystem is driving real-world AI performance. (https://www.mangoboost.io/resources/blog/mangoboost-sets-a-new-standard-for-multi-nodes-llama2-70b-lora-on-amd-mi300x-gpu)

---amd-platform-aware-compute-engine-(amd-pace)/3610250_Speculative_LLM_Inference_Blog_Image_1200x627.png)