Accelerating Generative AI: How AMD Instinct™ GPUs Delivered Breakthrough Efficiency and Scalability in MLPerf Inference v5.1

Sep 09, 2025

Why This Submission Matters

Generative AI is transforming industries, creating unprecedented demand for compute performance, cost efficiency, and scalable infrastructure. Organizations are deploying larger models, serving more users, and driving the need for faster inference at lower cost.

That’s why MLPerf Inference matters, it’s the industry’s gold standard for evaluating how GPUs handle real-world AI workloads.

In the latest AMD MLPerf Inference v5.1 submission, Instinct™ GPUs reached a major milestone: for the first time, AMD submitted results across three generations of hardware, MI300X, MI325X, and the all new MI355X GPUs.

This submission tells a story of innovation, efficiency, and ecosystem maturity, showing how AMD is helping customers scale generative AI deployments faster, more predictably, and more cost-effectively.

Accelerating the AMD Instinct Platform

Over the past 18 months, AMD has accelerated its AI roadmap to meet customer needs for generative AI:

- AMD Instinct MI300X GPU - Launched in 2023, the MI300X became the most widely deployed Instinct GPU, establishing deployment readiness for large-scale inference and powering today’s generative AI environments.

- AMD Instinct MI325X GPU - Launched in 2024 with expanded 256GB HBM3E memory and higher compute density, the MI325X GPU enabled customers to deploy larger LLMs and support more concurrent inference sessions.

- AMD Instinct MI355X GPU - Launched in 2025, the MI355X GPU features all new AMD CDNA™4 architecture, optimized for efficiency, scalability, and serving ultra-large models with up to 288GB HBM3E and supports up to a 520B parameter AI model on a single GPU1.

This rapid cadence reflects the hyper-focus AMD has on enabling practical AI at scale. MLPerf v5.1 demonstrates that the AMD Instinct portfolio is evolving beyond raw performance, it’s about efficiency, cost optimization, and flexibility for real-world deployments.

Breakthroughs from the AMD MLPerf Inference 5.1 Submission

Rather than chasing peak benchmark leadership performance, submissions made by AMD focused on efficiency, scalability, and customer value. Several breakthroughs stand out:

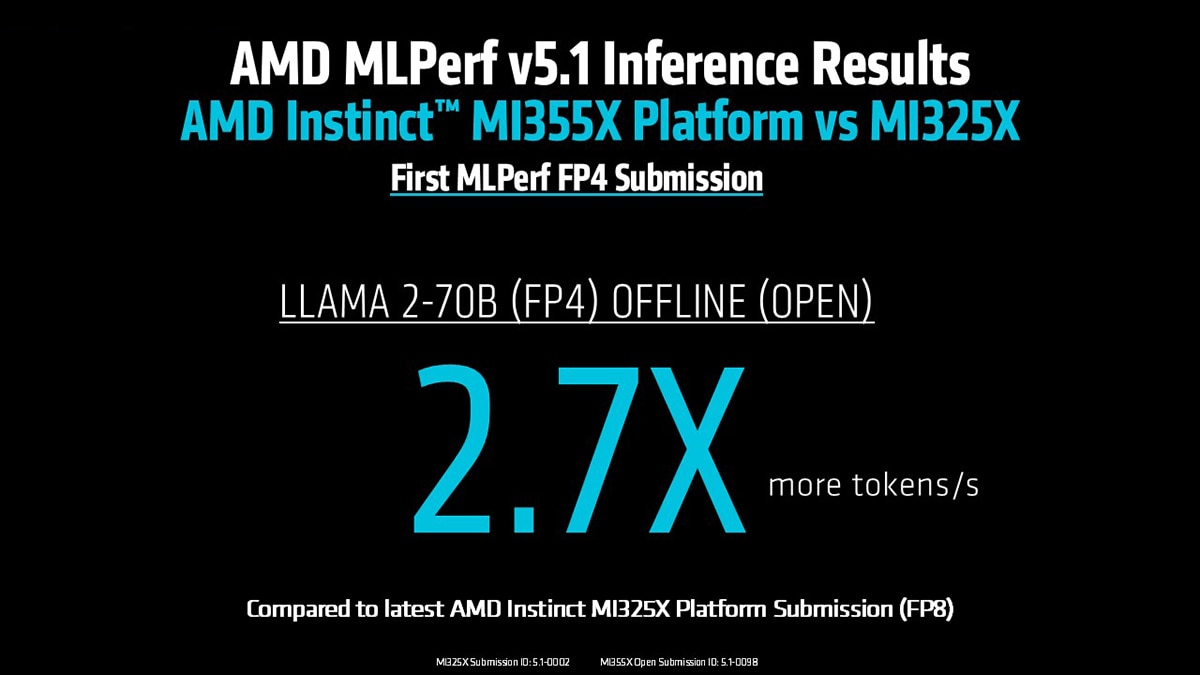

1. FP4 Precision: A Breakthrough in Inference Efficiency

A major highlight from the AMD MLPerf Inference v5.1 submission is the introduction of FP4 precision on the Instinct MI355X GPU, unlocking next-generation efficiency for generative AI inference.

It’s worth mentioning that these results were submitted in the open category but fully satisfied all the same rules and requirements as closed submissions, demonstrating production-ready performance and competitive efficiency compared to the competition.

On the Llama 2 70B Server benchmark, the AMD Instinct MI355X delivered a 2.7X increase in tokens per second compared to submitted Instinct MI325X GPU results in FP8, all without sacrificing accuracy.

For customers, FP4 delivers clear benefits:

- Serve larger models faster - Run ultra-large LLMs with fewer GPUs and higher throughput.

- Lower cost per query - Drive greater efficiency and reduce infrastructure costs.

- Maintain accuracy – AMD ROCm™ software optimizations ensure FP4 inference matches FP8 quality, enabling production-ready deployments.

- Confident scalability - FP4 positions infrastructure to handle the next wave of parameter-heavy models efficiently.

With AMD CDNA 4 architecture, 288GB of HBM3E memory, and ROCm 7 software optimizations, the Instinct MI355X GPU sets a new bar for efficient, competitive generative AI inference at scale.

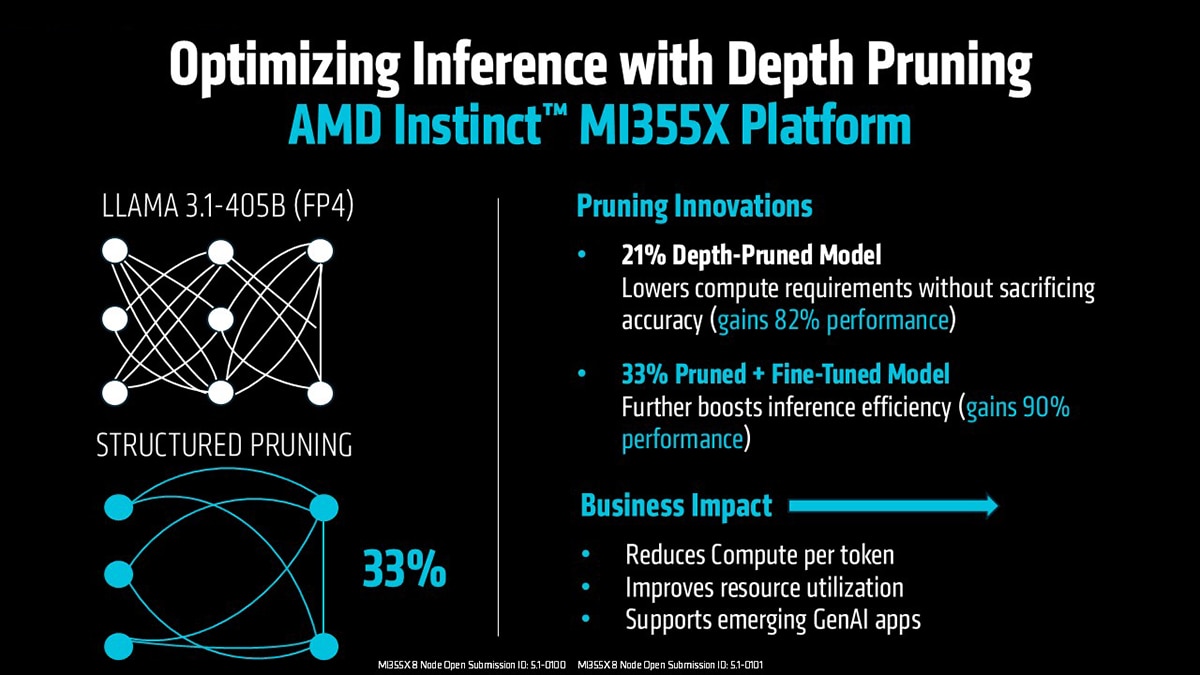

2. Structured Pruning Accelerates Ultra-Large Models

One of the most exciting highlights from the AMD MLPerf Inference v5.1 submission is the work on structured pruning, a highly innovative approach that combines hardware efficiency with intelligent software optimization to tackle one of the biggest customer challenges: how to serve massive models at scale without exploding infrastructure costs.

Using the AMD Instinct MI355X GPU and ROCm software pruning libraries, the team optimized Llama 3.1 405B, one of the largest models in the industry today, achieving remarkable results:

- A 21% depth-pruned model delivered an 82% increase in inference throughput, without sacrificing accuracy.

- A 33% pruned + fine-tuned model pushed efficiency even further, achieving up to a 90% performance uplift while still maintaining accuracy.

This innovation matters because it directly addresses real-world customer pain points:

- Reduce infrastructure cost - By pruning non-critical model layers, enterprises can serve the same workloads on fewer GPUs.

- Accelerate time-to-insight - Optimized models deliver faster responses for high-volume, latency-sensitive inference.

- Maintain accuracy, preserve quality - Even at 405B+ parameters, structured pruning maintains output quality, unlocking cost savings without compromise.

- Deploy larger models faster - Customers can now run ultra-large LLMs on infrastructure they already have, instead of waiting for massive multi-rack deployments.

Structured pruning represents a breakthrough moment in AI strategy for AMD, showing that innovation isn’t just about pushing peak performance numbers but about finding smarter ways to scale generative AI efficiently and affordably.

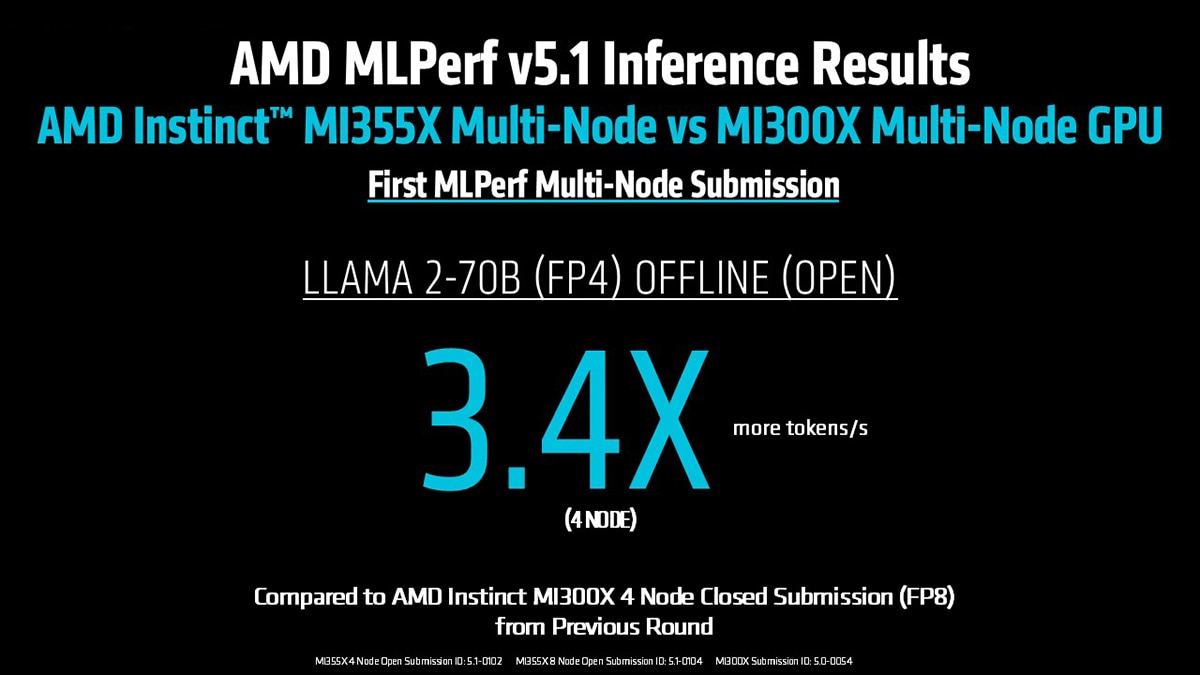

3. Scaling Smoothly from Single Node to 8 Nodes

Another highlight of the AMD MLPerf Inference v5.1 submission is the demonstration of seamless scaling from single-GPU inference to large multi-node clusters, unlocking a new level of flexibility, efficiency, and customer choice when deploying generative AI at scale.

Using the AMD Instinct MI355X GPU and ROCm software multi-node optimizations, the submission shows that scaling performance can be predictable, efficient, and cost-effective:

- A 4-node MI355X FP4 cluster delivered a 3.4X increase in tokens/sec on Llama 2 70B Offline compared to a 4-node MI300X FP8 configuration from the previous MLPerf round.

- The first-ever 8-node MI355X GPU submission showcased linear scaling and achieving high throughput. (To learn more, see the submission ID on the slide below.)

This matters because customers running ultra-large LLMs need confidence that scaling infrastructure won’t mean scaling inefficiency. The results demonstrate that AMD ROCm software and Instinct MI355X GPUs work hand-in-hand to solve this problem:

- Faster scaling, fewer limits - MI355X clusters scale smoothly up to 8 nodes without diminishing returns, enabling customers to serve more users or larger models.

- No wasted compute - ROCm software’s high-speed interconnect optimizations and communication libraries ensure GPUs operate at peak utilization across nodes.

- Lower total cost - Predictable scaling means organizations can increase deployments step-by-step instead of over-provisioning resources upfront.

- Future-ready infrastructure - These results prove Instinct GPUs can handle next-generation workloads requiring multi-node scaling from day one.

For organizations deploying generative AI at enterprise scale, this submission demonstrates that AMD Instinct MI355X platforms deliver scaling that works as expected, enabling customers to add capacity seamlessly while keeping efficiency and predictability at the center.

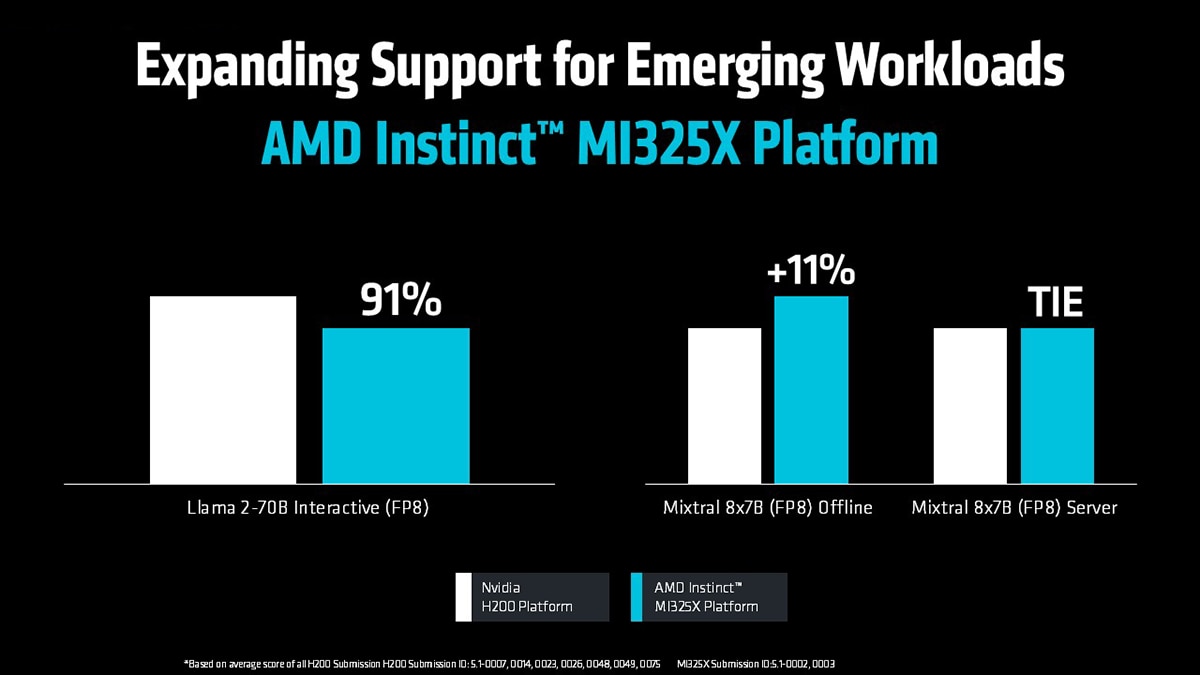

4. AMD Instinct™ MI325X Expands Workload Coverage and Flexibility

The AMD MLPerf Inference v5.1 submission also introduced first-time Instinct MI325X GPU results on several emerging generative AI workloads, giving customers new proof points for deploying large-scale inference efficiently.

These results compare single-node AMD Instinct MI325X platform performance against the average of all NVIDIA H200-SXM platform partner submissions in this MLPerf round, providing a balanced and fair competitive view.

Key highlights:

- Llama 2-70B Interactive (FP8) - MI325X achieves 88% of the averaged H200-SXM performance, demonstrating readiness for real-time, conversational generative AI use cases.

- Mixtral 8x7B (FP8):

o Offline inference - MI325X leads with a +11% throughput advantage.

o Server inference - Delivers performance parity with averaged H200-SXM submissions.

For customers, these results deliver clear benefits:

- Support for next-generation models – AMD Instinct MI325X GPUs demonstrate strong performance on emerging workloads like Llama 2-70B Interactive and Mixtral, showing readiness for future generative AI applications.

- Balanced performance and cost efficiency - High throughput combined with 256GB of HBM3E memory enables running large LLMs cost-effectively without over-provisioning infrastructure.

- Flexibility for diverse deployment models - With strong performance across offline, server, and interactive inference, AMD Instinct MI325X GPUs support a wide range of real-world use cases.

- Confidence through competitive positioning – AMD Instinct MI325X GPUs deliver performance on par with or better than the averaged H200-SXM results in several scenarios, providing customers with validated efficiency at scale.

These results highlight how the AMD Instinct MI325X GPU continues to expand workload coverage, giving organizations a proven, efficient platform for deploying today’s and tomorrow’s generative AI models.

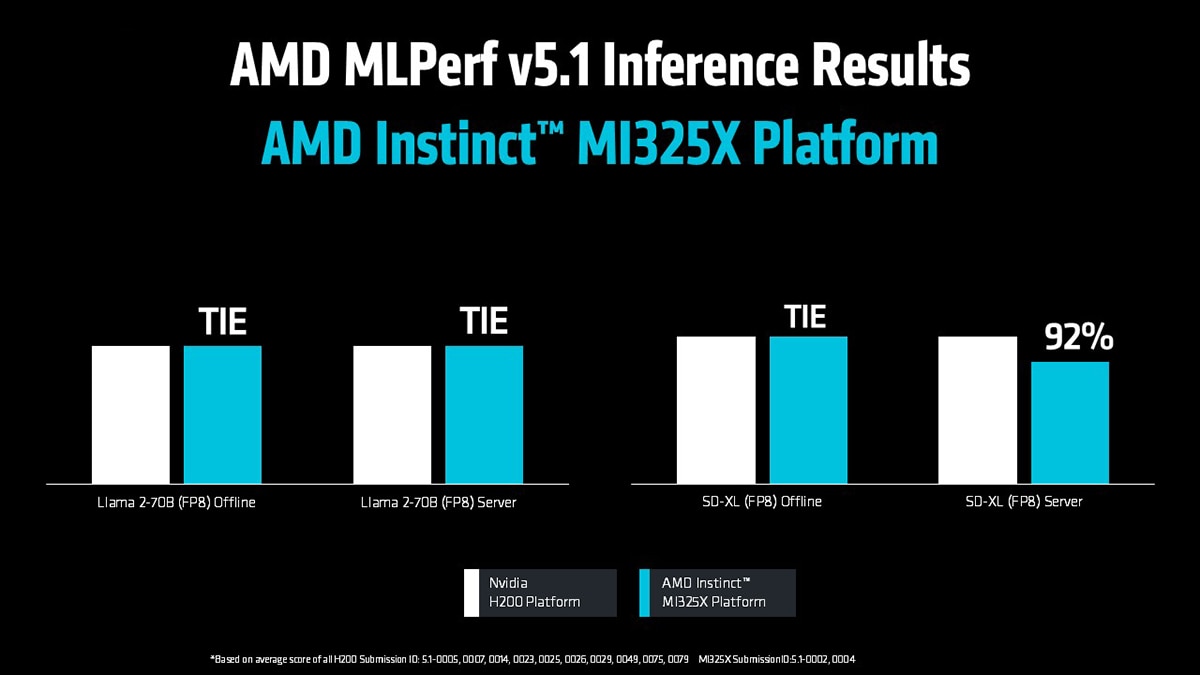

5. AMD Instinct MI325X GPUs Deliver Competitive Performance on Core AI Workloads

In the AMD MLPerf Inference v5.1 submission, Instinct MI325X GPUs also demonstrated competitive performance on previously submitted workloads, reinforcing its position as a balanced, cost-efficient platform for large-scale generative AI inference.

These results compare single-node Instinct MI325X platform performance against the average of all NVIDIA H200-SXM platform partner submissions in this round to provide a fair and consistent view.

Llama 2-70B-99.9 (FP8)

- Offline inference - Instinct MI325X GPUs deliver performance parity with averaged H200-SXM results.

- Server inference – Instinct MI325X GPUs also tie averaged H200-SXM performance in sustained multi-query environments.

SD-XL (FP8)

- Offline inference – AMD Instinct MI325X GPUs nearly matched the results of H200-SXM with 97% of its averaged results, providing strong performance for text-to-image generation workloads.

- Server inference – AMD Instinct MI325X GPUs achieve 88% of averaged H200-SXM performance, demonstrating efficiency in concurrent image-generation scenarios.

These competitive results are enabled by continuous ROCm software optimizations that improve inference performance generation over generation:

- Optimized inference kernels - Deliver higher throughput on FP8 workloads like Llama 2-70B-99.9 and SD-XL.

- Enhanced framework integrations - Tight tuning with PyTorch and TensorFlow ensures more efficient hardware utilization.

- Improved communication libraries - Enable MI325X to handle multi-query server scenarios with reduced latency.

For customers, these results highlight key benefits:

- Consistent performance across workloads – AMD Instinct MI325X GPUs handle LLMs, vision models, and multi-modal inference with predictable throughput.

- Efficiency at scale - AMD ROCm software drives optimizations that allow Instinct MI325X GPUs to achieve competitive results while leveraging its 256GB HBM3E memory for large-model deployments.

- Future-ready platform platform - Continuous ROCm improvements ensure MI325X keeps delivering high performance as frameworks, workloads, and model sizes evolve.

By combining hardware capability with AMD ROCm-driven software maturity, Instinct MI325X GPUs deliver validated, competitive performance across diverse AI workloads, empowering customers to deploy text, conversational, and image-generation models efficiently at scale.

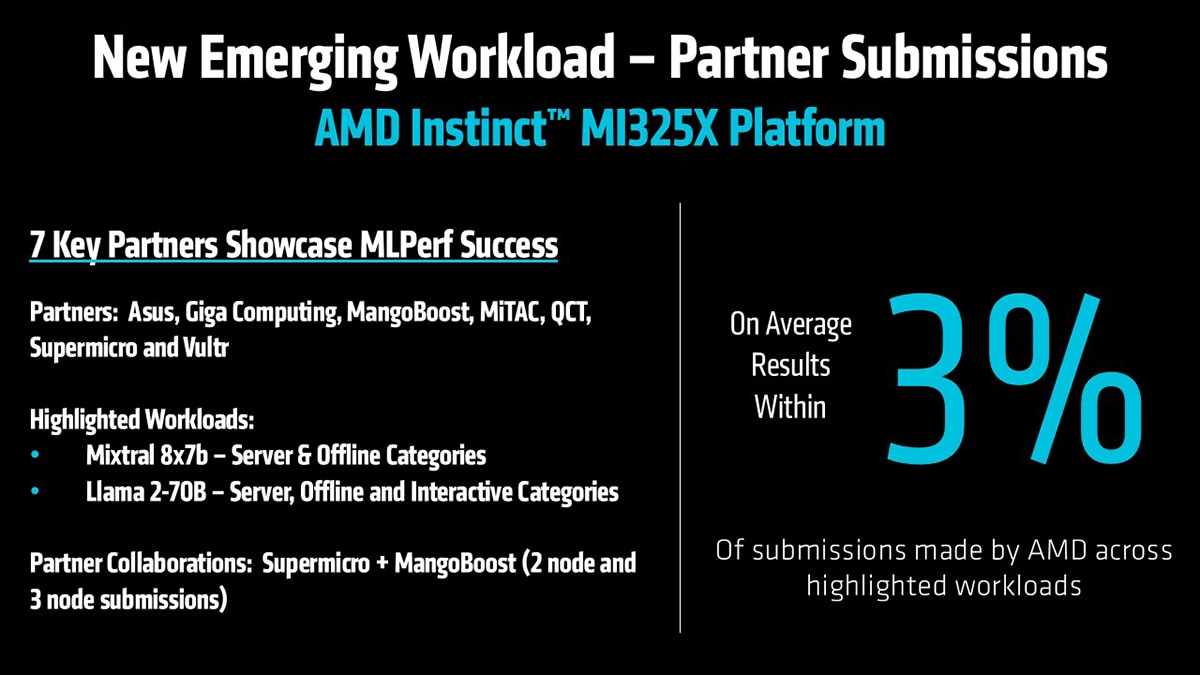

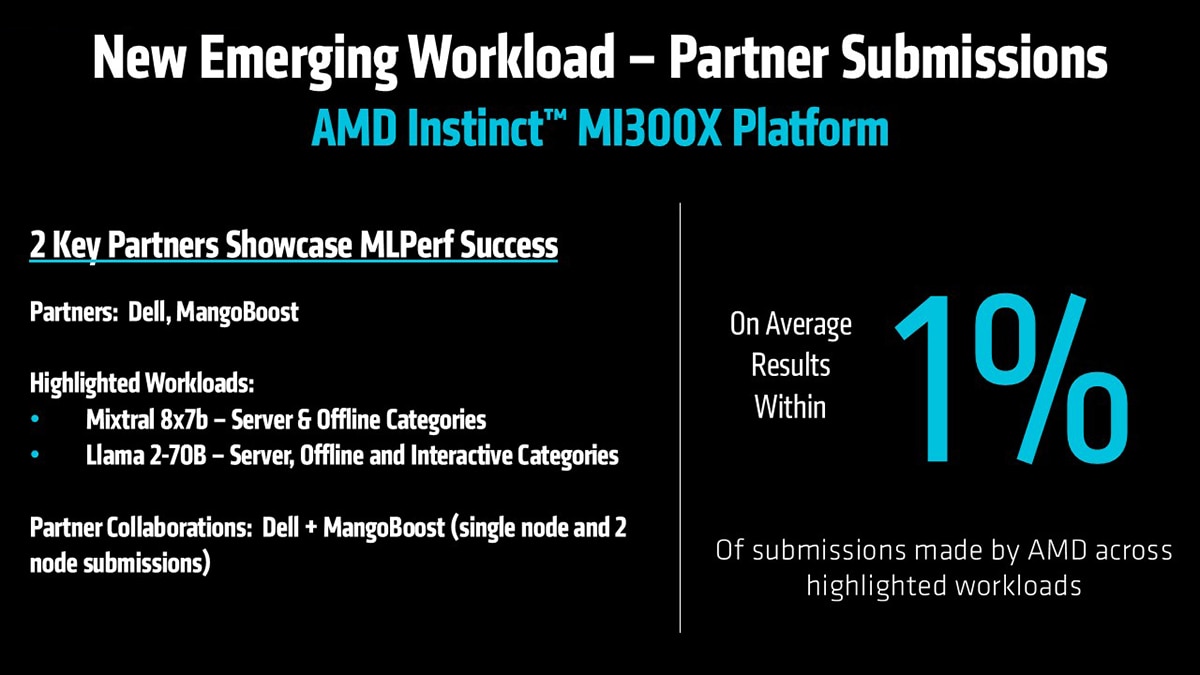

Partner Submissions Validate Ecosystem Consistency and Scalability

The MLPerf Inference v5.1 submission highlights the growing strength of the Instinct GPU ecosystem, with partners delivering performance that is nearly identical to AMD reference results across both Instinct MI325X and MI300X platforms.

- Instinct MI325X GPU partner submissions — from Asus, Giga Computing, MangoBoost, MiTAC, QCT, Supermicro, and Vultr - landed within 3% of the results submitted by AMD on MI325X across Llama 2-70B and Mixtral 8x7B workloads.

- Instinct MI300X GPU partner submissions, including Dell and MangoBoost, achieved within 1% of AMD reference performance, demonstrating deployment-ready consistency for widely adopted Instinct platforms.

The submission also showed collaborative scaling results:

- Supermicro + MangoBoost partnered on 2-node and 3-node MI325X submissions, demonstrating seamless horizontal scaling for high-volume inference.

- Dell + MangoBoost partnered on single-node and 2-node MI300X submissions, validating scalability and workload portability for production-ready environments.

For customers, these results highlight three key takeaways:

- Consistent performance - AMD Instinct GPUs deliver predictable, reproducible results across OEM, ODM, and cloud platforms.

- Deployment flexibility - Enterprises can choose from a broad partner ecosystem while maintaining validated efficiency.

- Scalable solutions - Collaborative submissions prove that AMD Instinct platforms support multi-node scaling in real-world generative AI deployments.

With results this consistent, AMD Instinct GPUs offer a trusted, production-ready ecosystem, giving customers confidence that deployments will perform as expected regardless of hardware configuration or partner platform.

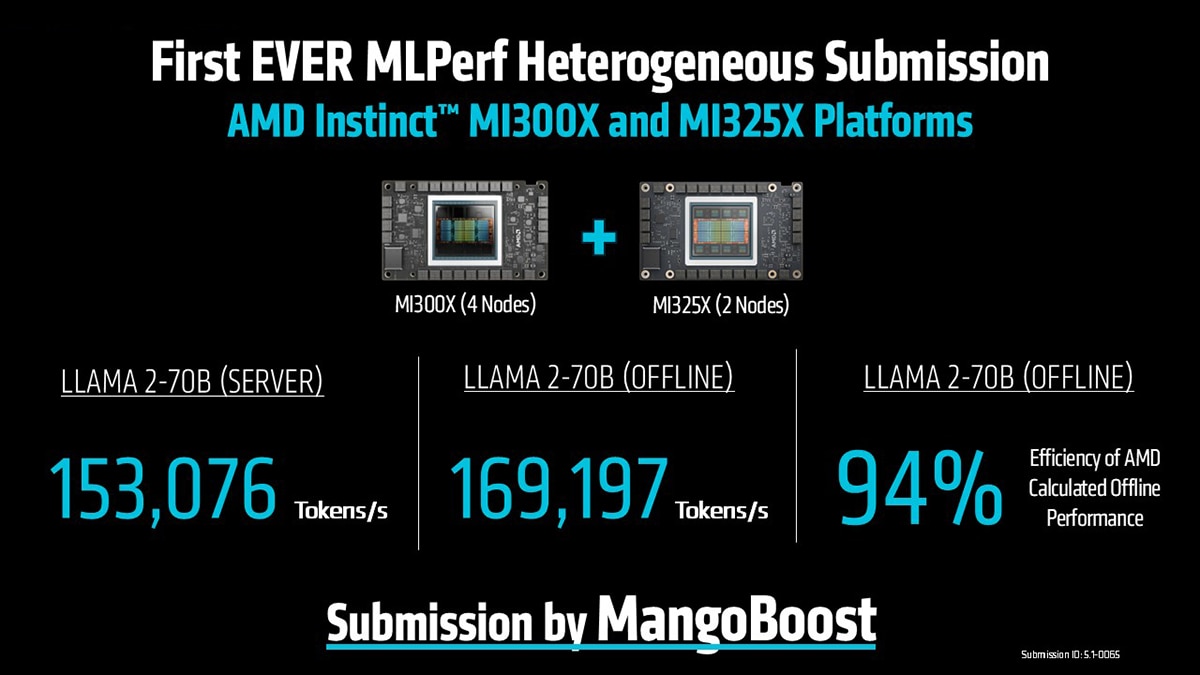

First-Ever Heterogeneous GPU Submission Demonstrates Flexibility and Efficiency

This latest MLPerf Inference v5.1 submission introduced a groundbreaking result: the first-ever heterogeneous GPU submission powered by AMD Instinct hardware. Partner MangoBoost combined 4 MI300X nodes with 2 MI325X nodes into a mixed-generation cluster, demonstrating that AMD Instinct platforms can scale seamlessly across multiple GPU generations while maintaining competitive performance and efficiency.

Key results on Llama 2 70B:

- Server inference - Achieved 153,076 tokens/sec.

- Offline inference - Reached 169,197 tokens/sec.

- Delivered 94% efficiency relative to AMD-calculated reference scaling, an outstanding result for a first heterogeneous submission.

This submission highlights a critical innovation: intelligent load balancing powered by AMD ROCm software. Mixed-generation clusters are inherently complex because GPUs with different compute capabilities must work together efficiently. ROCm software manages this by:

- Dynamically distributing workloads across MI300X and MI325X GPUs to maximize utilization.

- Coordinating inter-GPU communication to avoid bottlenecks and maintain predictable scaling.

- Ensuring reproducible results even when hardware configurations are non-uniform.

For customers, this has clear benefits:

- Protect existing investments - Combine MI300X systems already in production with MI325X upgrades for seamless scaling.

- Maximize hardware efficiency - Intelligent load balancing ensures every GPU is fully utilized, regardless of generation.

- Lower cost of scaling - Achieve higher throughput without replacing entire clusters.

- Accelerate deployments - Deploy mixed-generation environments confidently and predictably.

By enabling heterogeneous scaling with AMD ROCm software load balancing, the submission proves that AMD Instinct GPUs deliver flexibility, efficiency, and future-ready infrastructure for enterprise-scale generative AI.

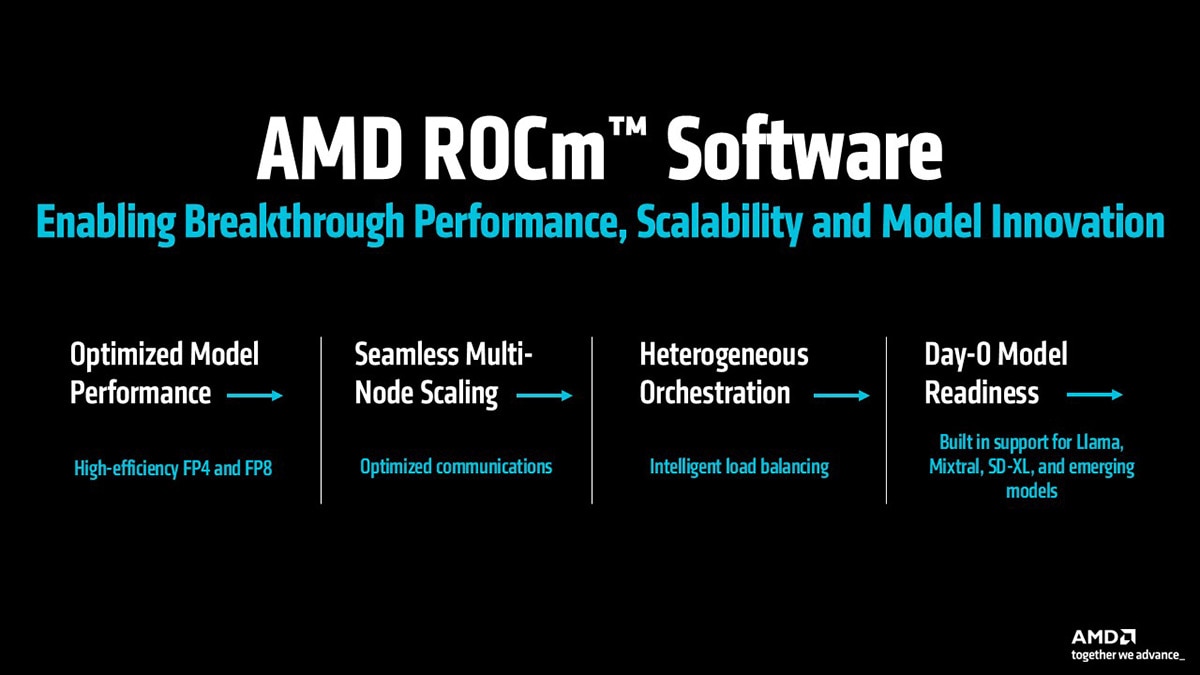

AMD ROCm™ Software: Powering MLPerf Results and Accelerating Innovation

Every success highlighted in the AMD MLPerf Inference v5.1 submission is built on AMD ROCm software, the open software platform for Instinct GPUs. AMD ROCm software delivers the optimized kernels, framework integrations, and intelligent orchestration that make these record-setting results possible, enabling customers to deploy, scale, and optimize generative AI workloads with confidence.

AMD ROCm software provides the optimized libraries, deep framework integrations, and intelligent orchestration that enable customers to deploy and scale generative AI efficiently:

- Built for scale - Supports everything from single-GPU inference to 8-node MI355X clusters and mixed MI300X + MI325X GPU environments with seamless orchestration.

- Developer-ready - Tight integration with PyTorch, TensorFlow, Triton, and JAX ensures hardware-aware optimizations work out of the box.

- Consistent results – AMD ROCm software enables partner systems to deliver performance within 1–3% of AMD reference results, ensuring predictable deployments across OEMs, ODMs, and the cloud.

AMD ROCm software is the foundation behind the submission, enabling the efficiency, scalability, and consistency that make these MLPerf results possible.

Final Takeaway

The AMD MLPerf Inference v5.1 submission marks a major step forward in enabling scalable, efficient, and flexible generative AI deployments. From unlocking new levels of efficiency with FP4 precision to accelerating innovation through structured pruning, the results demonstrate how Instinct GPUs are built to meet the evolving demands of today’s largest AI workloads.

The submission highlights seamless scaling, from single-GPU inference to 8-node clusters, and introduces industry-first heterogeneous scaling, where mixed AMD Instinct MI300X and MI325X environments deliver near-linear performance. Combined with competitive throughput on MI325X across emerging workloads and consistent results within 1–3% across partner submissions, these results prove the AMD Instinct GPU ecosystem is production-ready and deployment-flexible.

At the heart of these achievements is AMD ROCm software, the open software platform powering every breakthrough. With optimized kernels, intelligent orchestration, and deep framework integration, ROCm software enables customers to serve larger models faster, scale more predictably, and deploy generative AI confidently, whether on-premises, in the cloud, or across hybrid environments.

The results from MLPerf v5.1 reinforce a clear message: AMD Instinct GPUs, combined with ROCm software and a growing partner ecosystem, give customers the tools they need to deploy, optimize, and scale generative AI workloads efficiently today while preparing for the next generation of models and deployments.

Footnotes

- MI350-012 - Based on calculations by AMD as of April 17, 2025, using the published memory specifications of the AMD Instinct MI350X / MI355X GPUs (288GB) vs MI300X (192GB) vs MI325X (256GB). Calculations performed with FP16 precision datatype at (2) bytes per parameter, to determine the minimum number of GPUs (based on memory size) required to run the following LLMs: OPT (130B parameters), GPT-3 (175B parameters), BLOOM (176B parameters), Gopher (280B parameters), PaLM 1 (340B parameters), Generic LM (420B, 500B, 520B, 1.047T parameters), Megatron-LM (530B parameters), LLaMA ( 405B parameters) and Samba (1T parameters). Results based on GPU memory size versus memory required by the model at defined parameters, plus 10% overhead. Server manufacturers may vary configurations, yielding different results. Results may vary based on GPU memory configuration, LLM size, and potential variance in GPU memory access or the server operating environment. *All data based on FP16 datatype. For FP8 = X2. For FP4 = X4. MI350-012.

- https://mlcommons.org/benchmarks/inference-datacenter/

- Average score is not a primary metric of MLPerf inference. Result not verified by MLCommons association.

- The MLPerf name and logo are registered and unregistered trademarks of MLCommons Association in the United States and other countries. All rights reserved. Unauthorized use is strictly prohibited. See www.mlcommons.org for more information.

Editor’s Note: The initial version of this blog referenced an average of NVIDIA H200 submissions that included both H200-SXM and H200-NVL results. We have since amended the analysis to exclude H200-NVL results.

- MI350-012 - Based on calculations by AMD as of April 17, 2025, using the published memory specifications of the AMD Instinct MI350X / MI355X GPUs (288GB) vs MI300X (192GB) vs MI325X (256GB). Calculations performed with FP16 precision datatype at (2) bytes per parameter, to determine the minimum number of GPUs (based on memory size) required to run the following LLMs: OPT (130B parameters), GPT-3 (175B parameters), BLOOM (176B parameters), Gopher (280B parameters), PaLM 1 (340B parameters), Generic LM (420B, 500B, 520B, 1.047T parameters), Megatron-LM (530B parameters), LLaMA ( 405B parameters) and Samba (1T parameters). Results based on GPU memory size versus memory required by the model at defined parameters, plus 10% overhead. Server manufacturers may vary configurations, yielding different results. Results may vary based on GPU memory configuration, LLM size, and potential variance in GPU memory access or the server operating environment. *All data based on FP16 datatype. For FP8 = X2. For FP4 = X4. MI350-012.

- https://mlcommons.org/benchmarks/inference-datacenter/

- Average score is not a primary metric of MLPerf inference. Result not verified by MLCommons association.

- The MLPerf name and logo are registered and unregistered trademarks of MLCommons Association in the United States and other countries. All rights reserved. Unauthorized use is strictly prohibited. See www.mlcommons.org for more information.

Editor’s Note: The initial version of this blog referenced an average of NVIDIA H200 submissions that included both H200-SXM and H200-NVL results. We have since amended the analysis to exclude H200-NVL results.