Accelerating Innovation Through Shared AI Compute

Nov 10, 2025

As AI moves from laboratories to the core of products, services and processes, the ability to run and manage AI workloads is essential. But existing infrastructure and financial models lack the agility to keep up with the dynamic, real-time nature of modern AI solutions.

For enterprises, compute has already become an imperative strategic resource, but unlike data for example, compute is not trivial to duplicate. Compute capacity must be shared, scheduled, and robust, particularly when multiple teams or companies need to run large AI workloads on the same infrastructure.

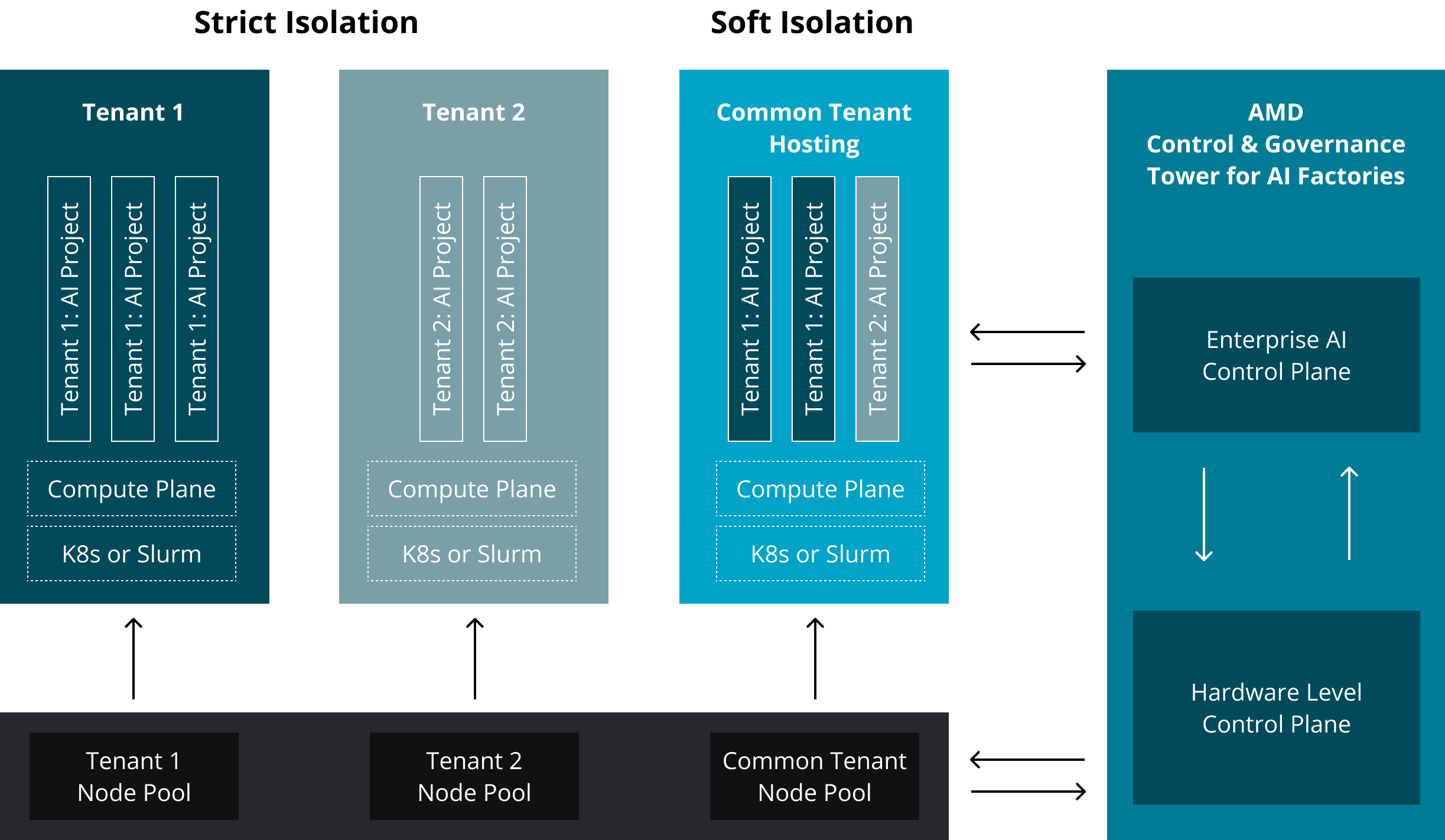

Solving Multitenancy for AI

Multitenancy solves this issue, allowing multiple users, teams or organizations to share the same high-performance infrastructure, including compute, storage, and networking, while keeping their data and workloads isolated. This is how cloud computing works at scale.

Resource contention from high-demand tenants creates critical, but different, problems; It causes unacceptable high latency for real-time inference, while destroying performance throughput for training and fine-tuning jobs and delaying their completion. For all these workloads, every GPU cycle matters, every model checkpoint is of significant size, and every second of downtime leads to loss of valuable resources.

Most enterprises end up over-provisioning isolated GPU clusters, wasting capacity and locking innovation inside silos, which results in idle, unused compute resources, slower innovation cycles, and higher costs for every organization competing in AI.

A New Blueprint for Shared AI Compute

To solve the issue of AI multitenancy, AMD Silo AI, in collaboration with TensorWave and the Combient Group, have developed a new model for shared AI compute infrastructure, designed to make large-scale AI development faster, more cost-efficient, and more collaborative.

Built on AMD Instinct™ accelerators on the TensorWave and powered by AMD Resource Manager and AMD AI Workbench, the environment allows multiple organizations to reliably share high-performance GPU infrastructure, enabling industry-leading innovation by lowering barriers to advanced computing capabilities while fostering a thriving innovation ecosystem.

“By advancing a more open, collaborative model for AI development, this initiative is making compute more accessible, elastic, and efficient. It empowers enterprises to innovate together in the next era of AI, driven by democratized access, scalable infrastructure, and accelerated time-to-value,” said Darrick Horton, Co-founder and CEO of TensorWave.

Multitenancy Compute Infrastructure for AI

This shared, multi-tenant design enables clusters of companies to co-innovate — reducing idle compute, accelerating model training, and dramatically lowering costs compared to isolated deployments. It constitutes a new blueprint for sovereign and efficient AI infrastructure for both enterprises and cloud service providers.

Built on open standards and powered by open-source technologies, the platform removes vendor lock-in and enables seamless portability of AI models and workflows across heterogeneous environments. By tapping into a rapidly evolving open-source ecosystem, users gain access to advanced tools that simplify AI infrastructure management and maximize performance efficiency.

AMD Silo AI Multitenancy Compute Infrastructure for AI.

Powered by, and optimized for, AMD Instinct GPUs, the infrastructure provides the foundation for robust, high-performance multitenancy, both on-premises and in the cloud:

AMD Instinct™ MI300X / MI325X GPUs, built for large-scale AI with hardware-level partitioning and high-bandwidth interconnects.

AMD ROCm™ 7 software stack, enabling GPU partitioning, containerized workloads, and integration with Kubernetes, OpenShift, and Slurm.

AMD Infinity Fabric™ interconnects and advanced memory partitioning for workload isolation and throughput.

A validated partner ecosystem, supporting orchestration and scheduling platforms for multi-tenant GPU clusters.

AMD AI Workbench and AMD Resource Manager, open-source enterprise AI tooling for deploying AI at scale through modular components.

Enhanced by Enterprise Ready AI tooling from AMD

The Combient network of Nordic enterprises across manufacturing, energy, and finance collaborate on emerging technologies, and through this initiative, member companies will gain access to a shared, AMD-powered AI infrastructure, enabling them to co-develop and deploy advanced AI solutions by:

Scaling AI workloads efficiently.

Quickly developing and deploying AI models.

Reducing environmental and financial waste from under-used compute.

Combined with the enterprise-ready AI software stack from AMD Silo AI, the AMD AI Workbench and the AMD Resource Manager, engineered to run AI workloads at scale, the Combient companies can further enhance their AI deployment. The enterprise AI tools provide access to key open-source AI frameworks and Gen AI models connected to an enterprise-ready Kubernetes platform, shortening the time from AI experimentation to large scale production.

“This model changes how our network of companies can access and scale AI.” said Jonas Wettergren, VP & Head of People & Org, at Combient. “Instead of each enterprise building its own infrastructure from scratch, they can now tap into a shared AI environment — cutting time-to-compute, lowering cost, and speeding up real-world deployment across 36 organizations, regardless of platform.”

This multitenant infrastructure has already delivered notable performance gains, as one of the participating companies achieved substantially better AI model training times using AMD Instinct™ MI300X GPUs with ROCm™ optimizations, demonstrating how shared high-performance compute can accelerate innovation.

The Future of Shared AI Compute

As AI adoption accelerates, shared compute will define the next phase of enterprise AI infrastructure, one where collaboration, sovereignty, and efficiency coexist.

“Our collaboration with TensorWave and Combient shows that AI compute doesn’t have to be siloed,” says Peter Sarlin, CEO & Co-founder of AMD Silo AI. “Through shared, robust multi-tenant clusters powered by AMD technology, companies can scale innovation faster while maintaining the performance and isolation modern AI workloads require.”

AMD Silo AI remains committed to pushing the frontier of AI on AMD compute platforms, and this initiative marks a significant step forward, building a more secure, cooperative, and scalable AI fabric for Europe and beyond, helping enterprises and startups alike accelerate development without compromising on performance, control, or reliability.

TensorWave

TensorWave is the AI and HPC cloud purpose-built for performance. Powered exclusively by AMD Instinct™ Series GPUs, TensorWave delivers high-bandwidth, memory-optimized infrastructure that scales with the most demanding training and inference workloads. Backed by more than $166 million in funding from investors including Magnetar, AMD Ventures, and Nexus Venture Partners, TensorWave operates one of the world's largest all-AMD GPU clouds and is expanding rapidly to meet global demand. For more information, please visit https://tensorwave.com.

Combient

Combient leads a network with the largest leading Nordic companies from different industries towards the common goal of accelerating digital transformation. Combient comprises 36 companies with a total turnover of EUR 280 billion and more than 1 400 000 employees. Learn more about us at combient.com.