[How-To] Running Optimized Automatic1111 Stable Diffusion WebUI on AMD GPUs

Aug 18, 2023

[UPDATE]: TheAutomatic1111-directMLbranch now supports Microsoft Olive under the Automatic1111 WebUI interface, which allows for generating optimized models and running them all under the Automatic1111 WebUI, without a separatebranch needed to optimize for AMD platforms. The updated blog to run Stable Diffusion Automatic1111 with Olive Optimizations is available here -UPDATED HOW-TO GUIDE

Prepared by Hisham Chowdhury (AMD),Lucas Neves (AMD), andJustin Stoecker (Microsoft)

Did you know you can enable Stable Diffusion with Microsoft Olive under Automatic1111(Xformer) to get a significant speedup via Microsoft DirectML on Windows? Microsoft and AMD have been working together to optimize the Olive path on AMD hardware, accelerated via the Microsoft DirectML platform API and the AMD User Mode Driver’s ML (Machine Learning) layer for DirectML allowing users access to the power of the AMD GPU’s AI (Artificial Intelligence) capabilities.

- Installed Git (Git for Windows)

- Installed Anaconda/Miniconda (Miniconda for Windows)

- Ensure Anaconda/Miniconda directory is added to PATH

- Platform having AMD Graphics Processing Units (GPU)

- Driver: AMD Software: Adrenalin Edition™ 23.7.2 or newer (https://www.amd.com/en/support)

Microsoft Olive is a Python tool that can be used to convert, optimize, quantize, and auto-tune models for optimal inference performance with ONNX Runtime execution providers like DirectML. Olive greatly simplifies model processing by providing a single toolchain to compose optimization techniques, which is especially important with more complex models like Stable Diffusion that are sensitive to the ordering of optimization techniques. The DirectML sample for Stable Diffusion applies the following techniques:

- Model conversion: translates the base models from PyTorch to ONNX.

- Transformer graph optimization: fuses subgraphs into multi-head attention operators and eliminating inefficient from conversion.

- Quantization: converts most layers from FP32 to FP16 to reduce the model's GPU memory footprint and improve performance.

Combined, the above optimizations enable DirectML to leverage AMD GPUs for greatly improved performance when performing inference with transformer models like Stable Diffusion.

(Following the instruction from Olive, we can generate optimized Stable Diffusion model using Olive)

- Open Anaconda/Miniconda Terminal

- Create a new environment by sequentially entering the following commands into the terminal, followed by the enter key. Important to note that Python 3.9 is required.

- conda create --name olive python=3.9

- conda activate olive

- pip install olive-ai[directml]==0.2.1

- git clone https://github.com/microsoft/olive --branch v0.2.1

- cd olive\examples\directml\stable_diffusion

- pip install -r requirements.txt

- pip install pydantic==1.10.12

- Generate an ONNX model and optimize it for run-time. This may take a long time.

- python stable_diffusion.py --optimize

The optimized model will be stored at the following directory, keep this open for later: olive\examples\directml\stable_diffusion\models\optimized\runwayml.The model folder will be called “stable-diffusion-v1-5”. Use the following command to see what other models are supported:python stable_diffusion.py –help

- To test the optimized model, run the following command:

- python stable_diffusion.py --interactive --num_images 2

Following the instructions here, install Automatic1111 Stable Diffusion WebUI without the optimized model. It will be using the default unoptimized PyTorch path. Enter the following commands sequentially into a new terminal window.

- Open Anaconda/Miniconda Terminal.

- Enter the following commands in the terminal, followed by the enter key, to install Automatic1111 WebUI

- conda create --name Automatic1111 python=3.10.6

- conda activate Automatic1111

- git clone https://github.com/lshqqytiger/stable-diffusion-webui-directml

- cd stable-diffusion-webui-directml

- git submodule update --init --recursive

- webui-user.bat

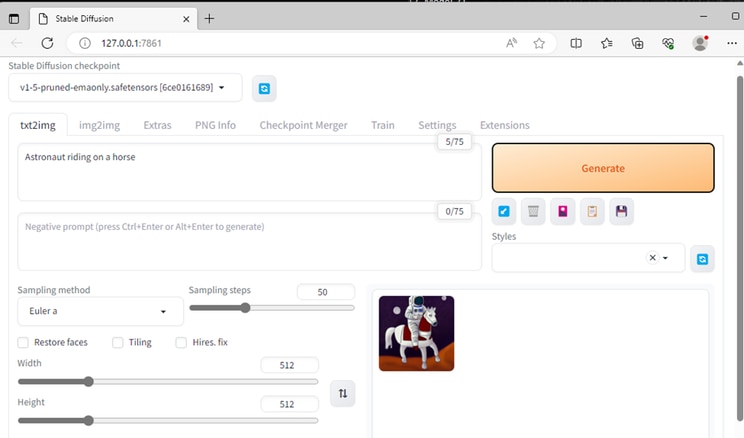

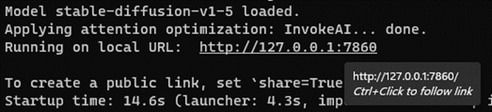

- CTRL+CLICK on the URL following "Running on local URL:" to run the WebUI

- Copy generated optimized model (the “stable-diffusion-v1-5” folder) from Optimized Modelfolderinto the directory stable-diffusion-webui-directml\models\ONNX. The ONNX folder may need to be created for some users.

- Launch a new Anaconda/Miniconda terminal window

- Navigate to the directory with the webui.bat and enter the following command to run the WebUI with the ONNX path and DirectML. This will be using the optimized model we created in section 3.

- webui.bat --onnx --backend directml

- CTRL+CLICK on the URL following "Running on local URL:" to run the WebUI

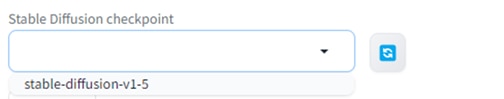

4. Pick “stable-diffusion-v1-5” from dropdown

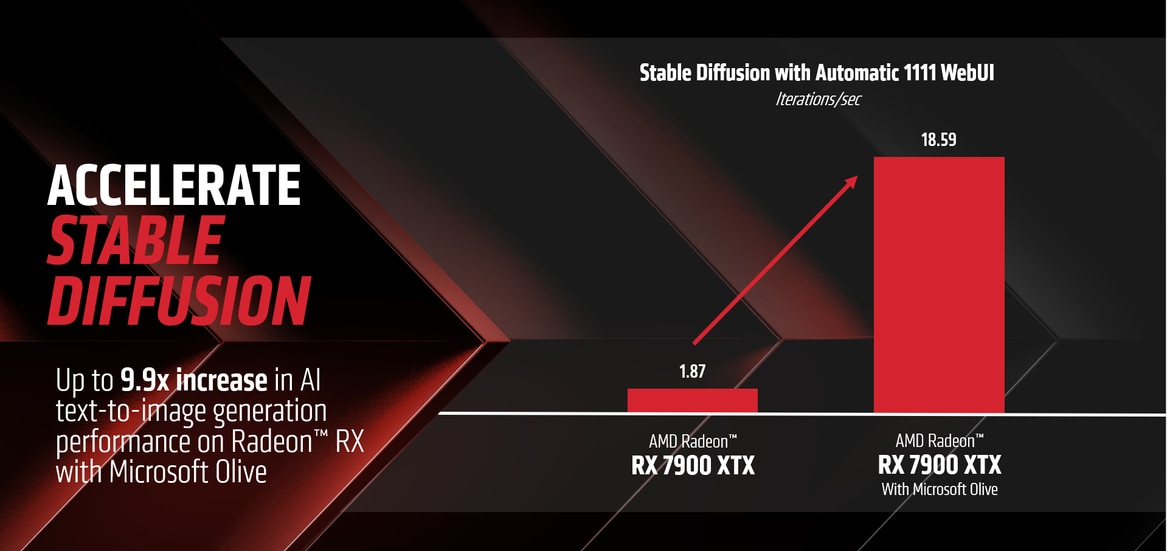

Running on the default PyTorch path, the AMD Radeon RX 7900 XTX delivers 1.87 iterations/second.

Running on the optimized model with Microsoft Olive, the AMD Radeon RX 7900 XTX delivers 18.59 iterations/second.