How To Run OpenAI’s GPT-OSS 20B and 120B Models on AMD Ryzen™ AI Processors and Radeon™ Graphics Cards

Aug 05, 2025

Today, OpenAI published their first state-of-the-art, open-weight language models. The release consists of a 116.8 Billion parameter model (referred to as 120B) with 5.1 Billion active parameters and a 20.9 Billion parameter model (referred to as 20B) with 3.6 Billion active parameters. These OpenAI GPT-OSS 20B and 120B language models deliver advanced reasoning capability for local AI inferencing and AMD products like the Ryzen™ AI processors and Radeon™ graphics are ready with day 0 support. You can try the models today on compatible hardware through our enablement partner: LM Studio.

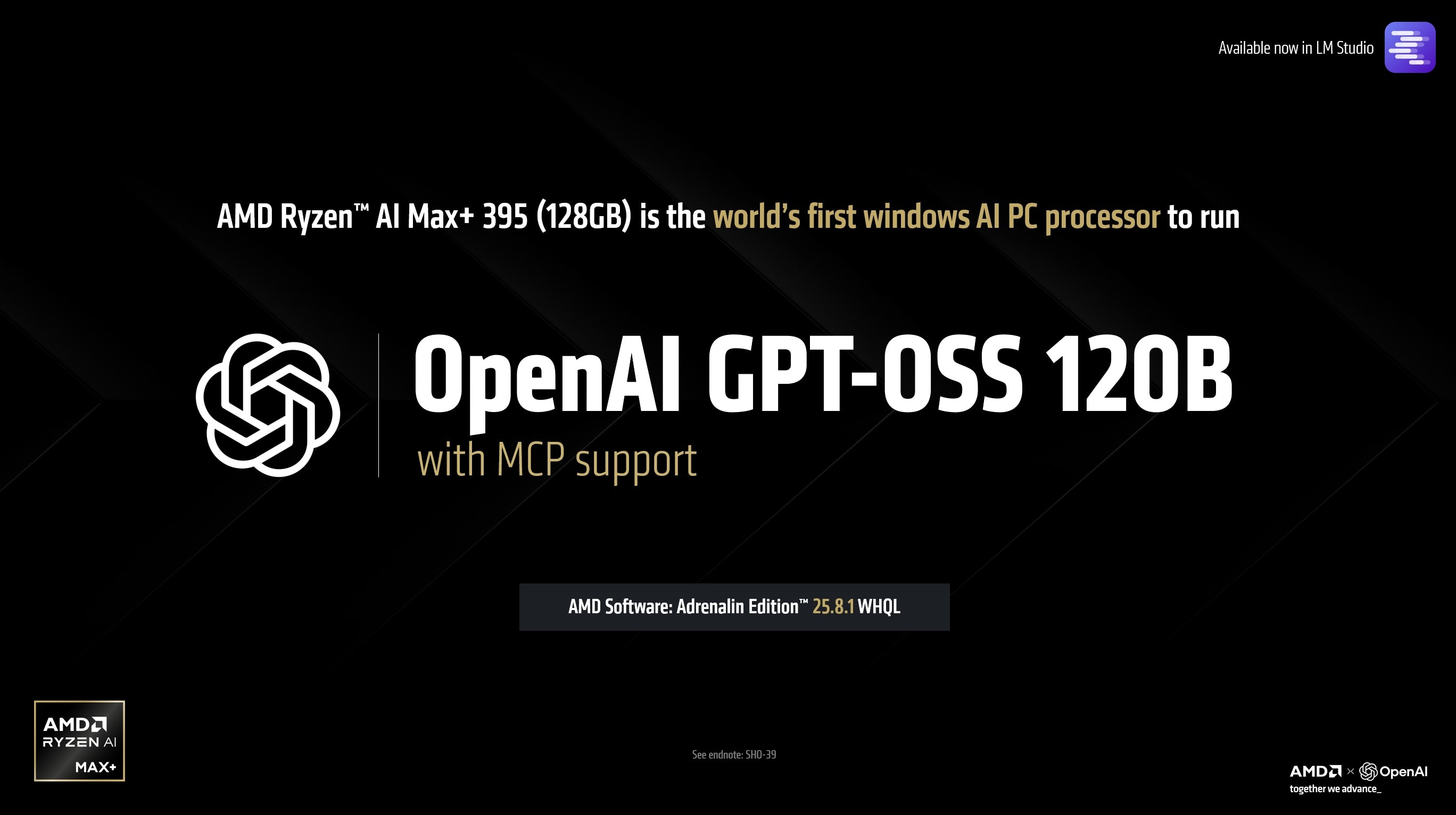

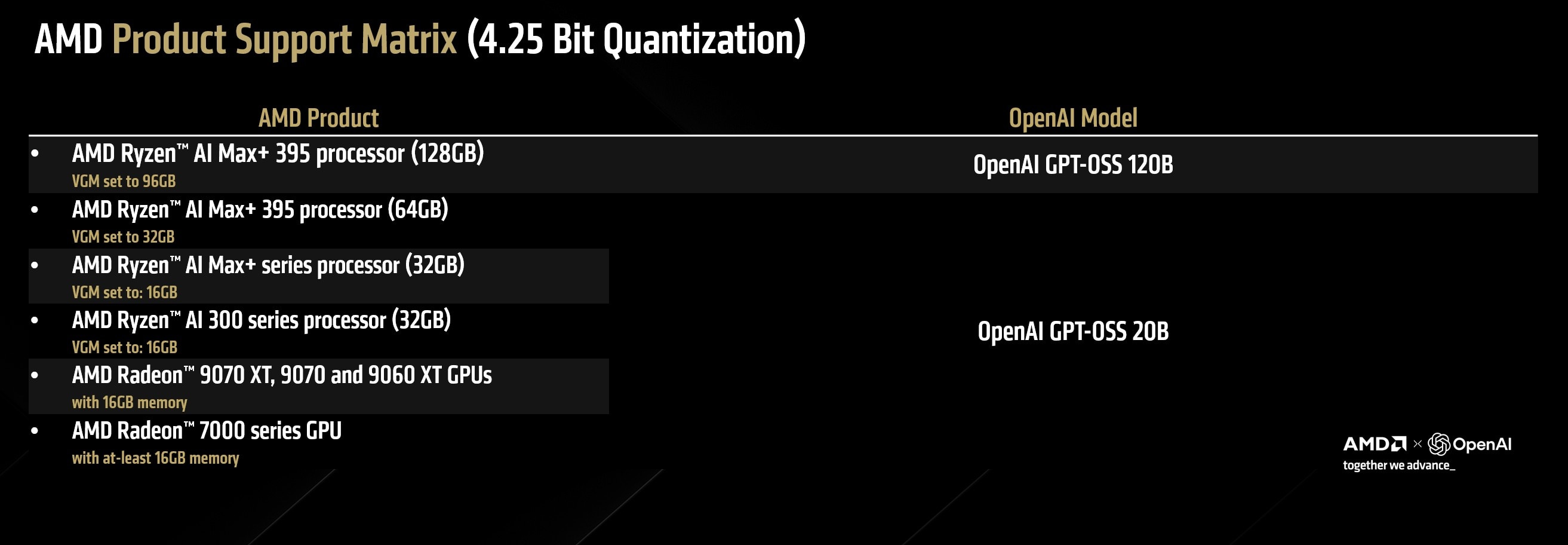

We are also announcing that the AMD Ryzen AI Max+ 395 is the world’s first consumer AI PC processor to run OpenAI’s GPT-OSS 120B parameter model. This furthers AMD's leadership as the only vendor providing full-stack, cloud to client solutions for bleeding edge AI workloads. What was previously possible only on datacenter-grade hardware is now available in thin and light.

Last week AMD upgraded the capability of the AMD Ryzen™ AI Max+ 395 processor with 128GB memory to run up to 128 Billion parameters in Windows through llama.cpp. We also published an FAQ blog covering model sizes, parameters, quantization, AMD Variable Graphics Memory, MCP and more.

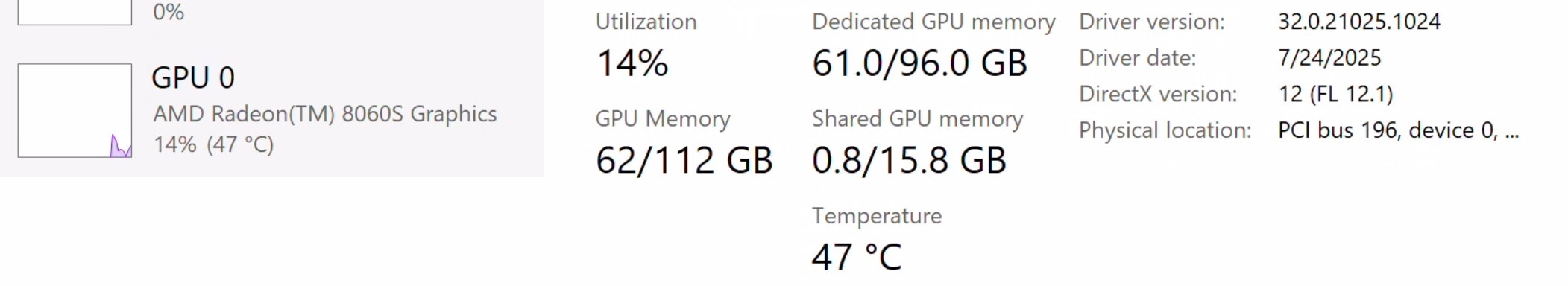

The GGML converted MXFP4 weights require roughly 61GB of VRAM and fit effortlessly into the 96GB dedicated graphics memory of the AMD Ryzen™ AI Max+ 395 processor. Note that a driver version equal or higher than AMD Software: Adrenalin™ Edition 25.8.1 WHQL is required to unlock this capability.

With speeds of up to 30 tokens per second, not only do AMD customers have access to a datacenter-class, state-of-the-art model, but the performance is very usable thanks to the bandwidth of the Ryzen™ AI Max+ platform and the mixture-of-experts architecture of the OpenAI GPT-OSS 120B. Because of its large memory, the Ryzen™ AI Max+ 395 (128GB) also supports Model Context Protocol (MCP) implementations with this model. Users with AMD Ryzen™ AI 300 series processors can also take full advantage of the smaller 20B model from Open AI.

For lighting fast performance with the OpenAI GPT-OSS 20B model, users can use the AMD Radeon™ 9070 XT 16GB graphics card in a desktop system. Not only does this setup offer lightning fast tokens per second but also has an incredible TTFT advantage. This means that users utilizing Model Context Protocol (MCP) implementations with the 20B models will find extremely responsive TTFT performance with this setup in typically compute bound situations.

Experience OpenAI's GPT-OSS 120B and 20B models on AMD Ryzen™ AI processors and Radeon™ graphics cards

- Download and Install AMD Software: Adrenalin Edition 25.8.1 WHQL drivers or higher. Please note performance and support may be degraded or absent in older drivers.

- If you are on a AMD Ryzen™ AI powered machine, right click on Desktop > AMD Software: Adrenalin™ Edition > Performance Tab > Tuning Tab> Variable Graphics Memory > please set VGM according to the specification table given below. If you are on an AMD Radeon™ graphics card, you can ignore this step and proceed.

- Download and install LM Studio.

- Skip onboarding.

- Go to the discover tab (magnifying glass)

- Search for “gpt-oss”. You will see two staff picks: GPT-OSS 120B and GPT-OSS 20B. Download the model you want to use (and is suppoted by your hardware).

- Go to the chat tab.

- Click on the drop down menu on the top and select the OpenAI model. Make sure to click “Manually load parameters”

- Move the “GPU Offload” slider all the way to the MAX. Click on "advanced settings". Enable "Flash Attention". Set the context length. AMD Ryzen(TM) AI Max+ users with 128GB systems can move the context slider all the way to the max.

- Check "remember settings" and click load. If you are using the 120B model – it may take a while and the loading bar may seem like it is stuck (the read speeds of most SSDs fall off after a burst and this is a large model to move to memory!).

- Start prompting!

Endnotes:

SHO-39- Testing as of August 2025 by AMD. All tests conducted on LM Studio 0.3.21b4 . Llama.cpp runtime 1.44. Tokens/s : Sustained performance average of multiple runs with specimen prompt "How long would it take for a ball dropped from 10 meter height to hit the ground?". Models tested: OpenAI GPT-OSS 120B. AMD Ryzen™ AI MAX+ 395 on an ASUS ROG Flow Z13 with 128GB 8000 MT/s memory, Windows 11 Pro 24H2 and Adrenalin 25.8.1 WHQL. Performance may vary. SHO-39

RX-1230- Testing as of August 2025 by AMD. All tests conducted on LM Studio 0.3.21b4 . Llama.cpp runtime 1.44. Tokens/s: Sustained performance average of multiple runs with specimen prompt "How long would it take for a ball dropped from 10 meter height to hit the ground?". Model tested OpenAI GPT-OSS 20B. AMD Radeon™ RX 9070 XT 16GB with Intel Core 13900K and 32GB 6000 MT/s memory, Windows 11 Pro 24H2 and Adrenalin 25.8.1 WHQL. Performance may vary. RX-1230

GD-97 - Links to third party sites are provided for convenience and unless explicitly stated, AMD is not responsible for the contents of such linked sites and no endorsement is implied. GD-97

GD-164 - Day-0 driver compatibility and feature availability depend on system manufacturer and/or packaged driver version. For the most up-to-date drivers, visit AMD.com. GD-164.