Commercial-grade AI music generation on AMD Ryzen™ AI processors and Radeon™ graphics with ACE Step 1.5

Feb 04, 2026

Local music generation is entering a new phase with ACE-Step v1.5: a fast, controllable, open-source music foundation model that runs efficiently on AMD Ryzen™ AI processors and AMD Radeon™ graphics through ComfyUI and AMD ROCm™ software. With the right pipeline, you can generate full-length tracks directly on your own system, iterate quickly, and keep your assets on-device from prompt to final audio.

A local-first workflow offers clear advantages for content creators, musicians and developers by turning AI into a flexible part of the creative process rather than a gated service. Without per-track fees or upload limits, creators can experiment freely, enabling them to sketch ideas, test arrangements, and explore new sounds without friction. On-device generation enables immediate iteration, making it easier to refine or discard ideas in real time without relying on an internet connection.

Unlimited offline generations, powered by AMD ROCm™ 7.2

1. Ensure sure you are on the latest AMD Software™: Adrenalin Edition Drivers.

2. Download and Install the ComfyUI desktop app.

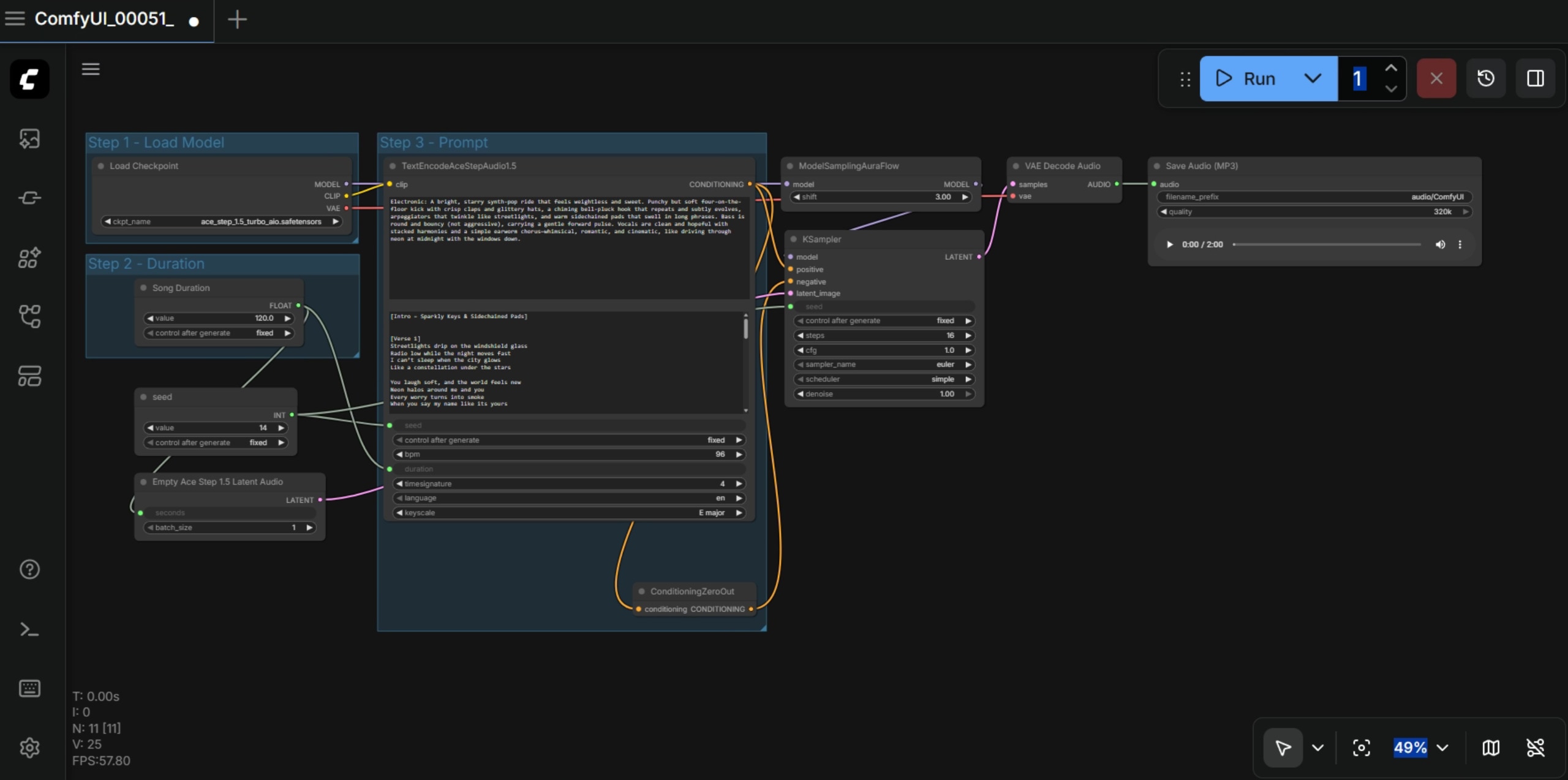

3. In templates, click on Audio and click on ACE Step 1.5 Turbo AIO.

ACE-Step v1.5 runs through ComfyUI, the node-based environment widely used to assemble, test, and reuse generative workflows. With AMD ROCm software support integrated into ComfyUI Desktop on Windows and also compatible with portable and git variants, day zero support for ACE Step 1.5 is available for AMD customers.

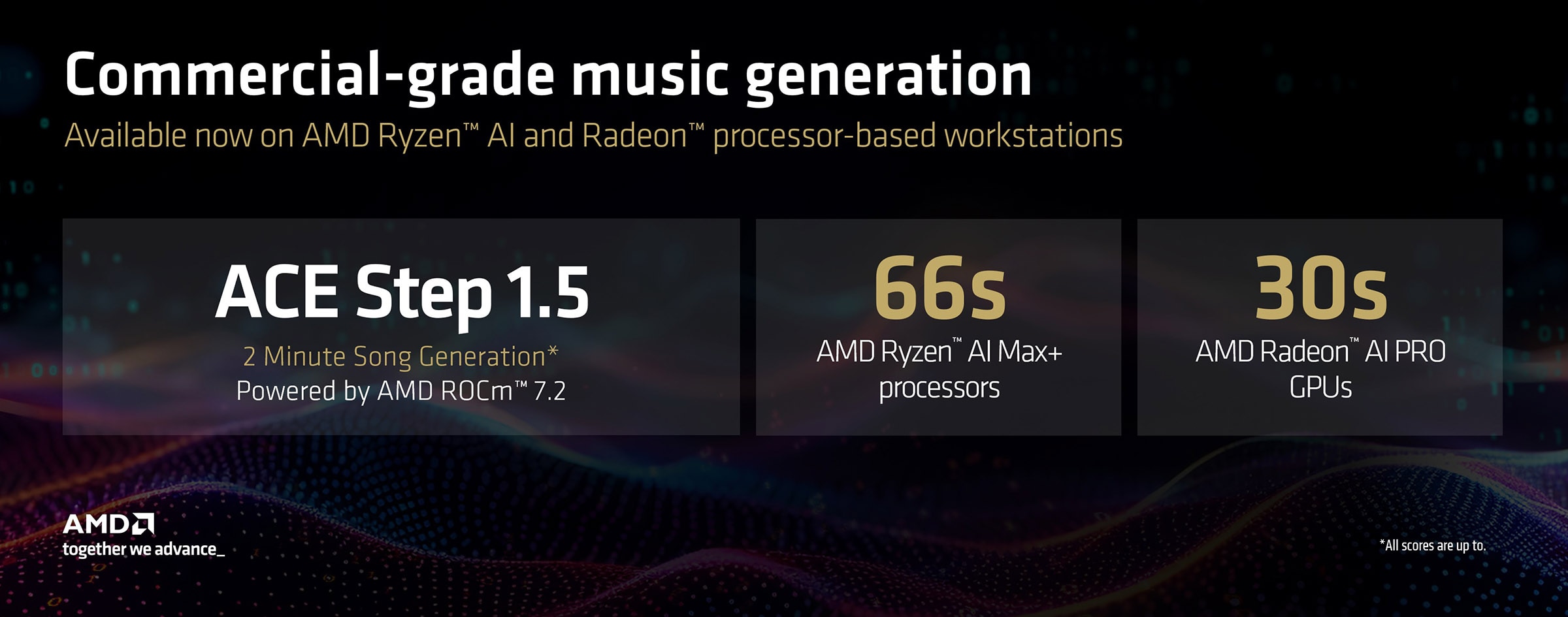

Users can expect the following baseline speeds when running ACE Step 1.5:

Those numbers translate to roughly 4x faster generating vs. listening in real time on the AMD Radeon™ AI PRO R9700 GPU and about 1.8x faster generating vs. listening in real time on the AMD Ryzen™ AI Max+ platform, making it realistic to iterate on multiple versions quickly and keep creative momentum high.

Commercially licensed, state-of-the-art AI music generation in ComfyUI

ACE-Step v1.5 is built for both speed and control. The project describes a hybrid approach where a language model can act as a planning layer, producing structured song guidance (for example, metadata, lyrics, and captions) that conditions the audio generator. The model is also designed to run locally with low VRAM requirements and supports lightweight personalization via LoRA, allowing you to adapt style with a small set of example songs.

Just as important for practical adoption, ACE-Step 1.5 is released under a permissive open-source MIT license is free for commercial use under that license. The project also claims it was trained entirely on royalty-free non copyrighted material. This makes it suitable not only for experimentation, but also for professional pipelines where licensing clarity matters.

ACE-Step v1.5 brings state-of-the-art music generation quality to local AMD platforms, with the project describing state-of-the-art performance across key measures like musical coherence and lyric alignment. It is also highly multilingual, with ACE-Step v1.5 reporting strong prompt adherence across 50+ languages, which makes it a practical fit for globally distributed creators and multi-language vocal and lyric workflows.