Advancing AI Research in Academia: Hybrid Models, Smarter Systems

Feb 11, 2026

As artificial intelligence matures, researchers are asking different questions. Higher accuracy alone is not enough. Scalability, efficiency, and sustainability now drive progress. These challenges are especially important in academia, where innovation must occur within tight computing limits.

AMD has made significant contributions to research that tackles these challenges. Its work connects model design, system efficiency, and real-world applications. This blog discusses emerging themes from this research, including hybrid architecture, hardware-aware optimization, and AI-assisted research workflows that are changing AI research practices.

Research Impact at ICLR 2026

These efforts are reflected in the strong academic presence of AMD at ICLR 2026, with six papers accepted across areas such as efficient inference, diffusion acceleration, multimodal reasoning, hybrid language models, and AI-driven research systems. Together, they demonstrate a commitment to scalable, practical, and open AI research.

Enhancing Learning and Reasoning Through Synthetic Data

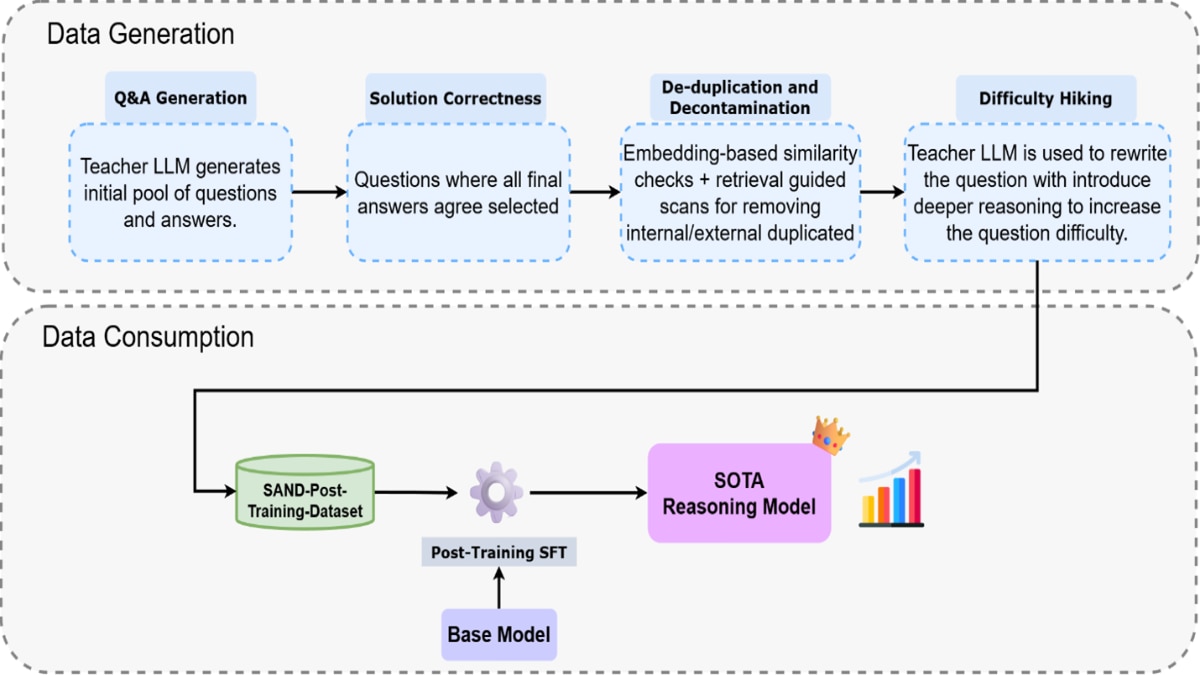

One key contribution of AI in academia is how it enhances model learning and reasoning, particularly in complex fields like mathematics. SAND‑Math introduces a process that creates difficult math problems and solutions using large language models. This process generates problems from scratch and includes a “Difficulty Hiking” step that systematically raises problem complexity before adding them to the dataset. Using this synthetic data to fine-tune reasoning models shows significant performance improvements on benchmarks like AIME25 compared to other synthetic datasets.

Key ideas include:

- Automated generation of novel, challenging math problems that go beyond simple remixing of existing datasets.

- A difficult process that increases problem complexity, leading to measurable performance gains when models are finetuned on the enhanced dataset.

This work shows how synthetic data pipelines can bridge important gaps in model training, especially in areas where curated data is limited.

SANDMath: Generating challenging mathematical datasets

AI Generated GPU Kernel Code and Evaluation Benchmarks

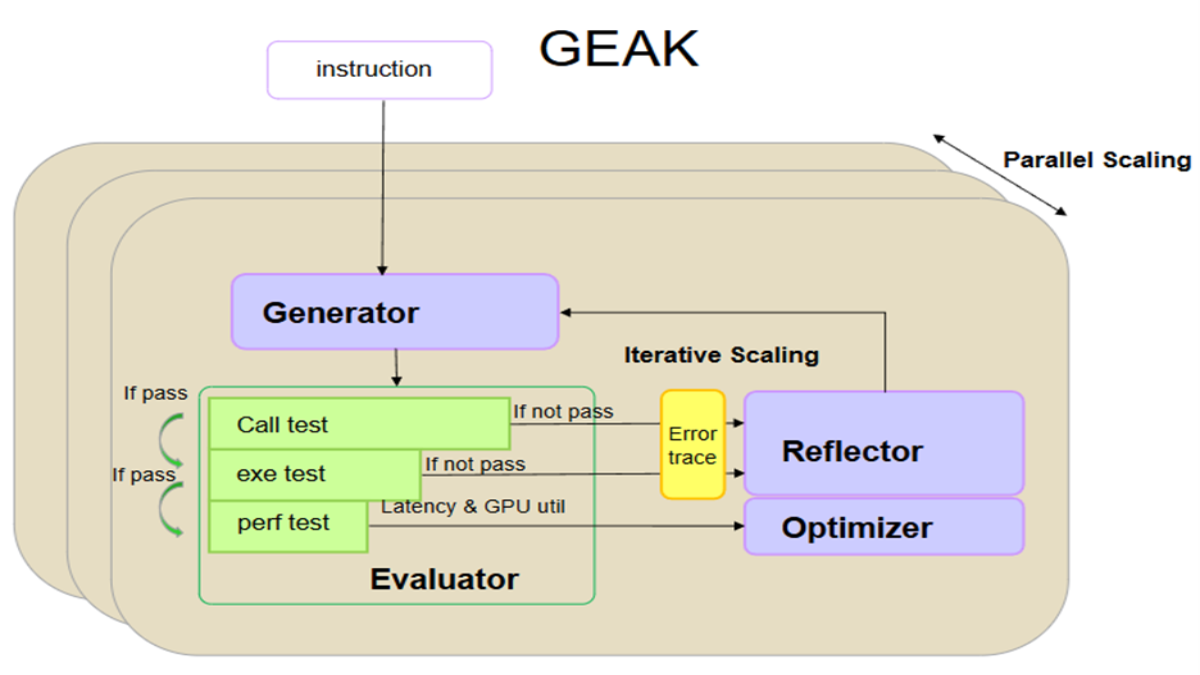

As workloads grow more demanding, hardware-optimized code, especially GPU kernels, becomes a performance bottleneck. The GEAK paper addresses this issue by using LLMs to automatically generate and refine GPU kernels written in Triton, a Python-based language for high-performance GPU programming. Instead of relying on manually optimized code, GEAK uses a reasoning loop inspired by agentic AI and benchmark suites to automatically enhance kernel code over several iterations.

Highlights include:

- A framework for AI‑generated GPU kernels, tailored to hardware like modern GPU architectures.

- Benchmarks and evaluation suites show execution speed improvements up to ~2.6x and strong correctness patterns compared to baseline generation pipelines.

This work demonstrates the promise of agentic AI for systems programming, reducing developer burden while pushing closer to expert‑level performance across diverse hardware.

GEAK: AI-generated GPU kernel optimization

Making Hybrid Language Models More Efficient and Accessible

Large language models have fantastic capabilities, but their memory and computation demands are a barrier to deployment and experimentation.

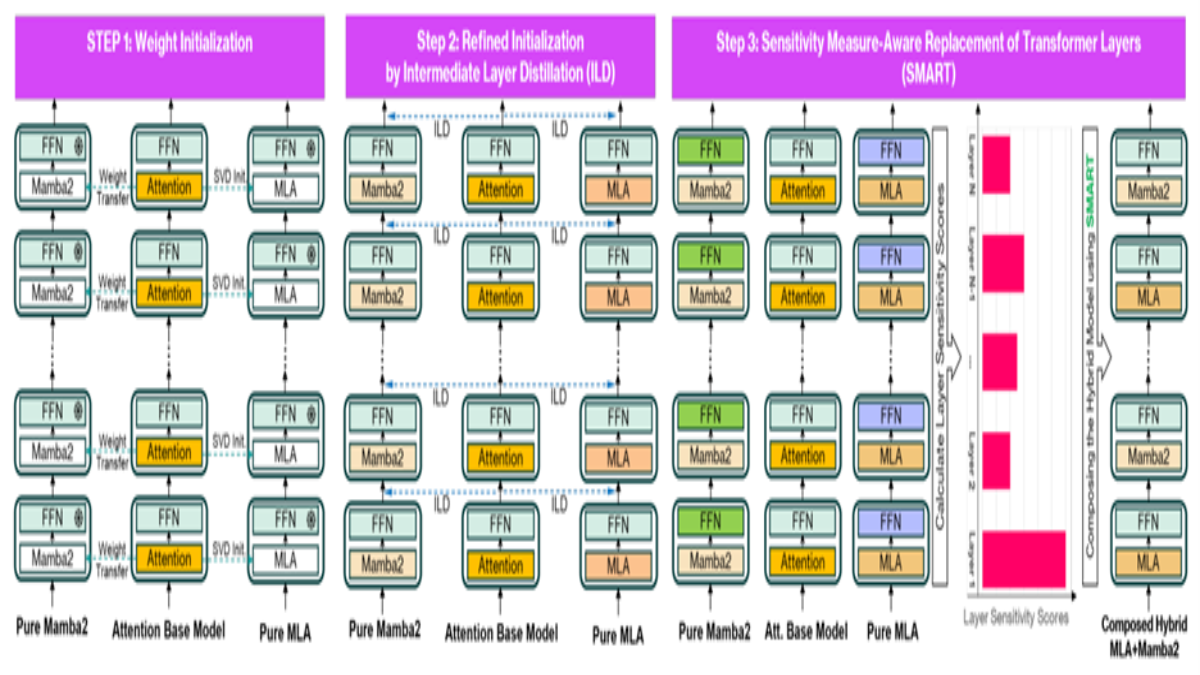

Zebra‑Llama presents a hybrid modeling approach that combines:

- State Space Models (SSMs)

- Multi‑Head Latent Attention (MLA)

The goal is to create efficient hybrid models directly from pre-trained Transformers, using refined initialization and post-training processes without starting from scratch.

Key results:

- Hybrid models achieve Transformer‑level accuracy with much less memory and training data than conventional approaches.

- Significant reductions in key/value cache size (down to a few percent of the original) enable greater throughput and lower inference cost.

Zebra‑Llama illustrates that algorithmic creativity, in how model components are combined, can unlock real efficiency gains while preserving performance.

ZebraLlama: Efficient hybrid LLMs

AI Agents as Research Collaborators

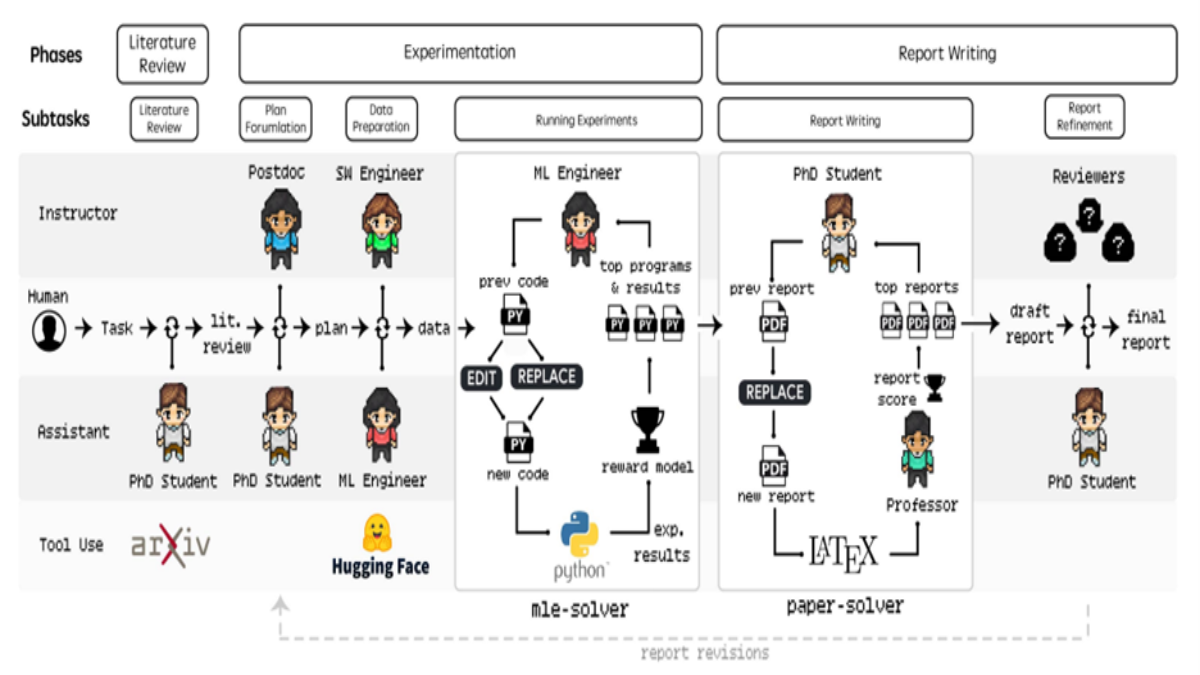

Some research looks at AI not just as a prediction tool, but as a partner in the research process itself. Agent Laboratory explores a framework where specialized LLM agents, like “researcher,” “reviewer,” and “advisor” agents, collaborate on research workflows:

- Aggregating and summarizing literature

- Generating and running code

- Documenting results and drafting scientific text

By coordinating these agents and involving humans for oversight, the system can significantly reduce the time and effort needed to produce research outcomes, from concept to runnable code to written reports. This work suggests:

- Faster research cycles where AI tools handle routine tasks.

- Human‑AI hybrid workflows that empower researchers rather than replace them.

Agent Laboratory: AI-assisted research workflows

A Unifying Theme: Efficiency Meets Innovation

Across hybrid model architecture, hardware-aware systems, and AI-assisted workflows, several key themes emerge from these academic contributions:

- Efficiency is essential: Reducing memory, compute, and energy costs enables broader adoption.

- Reuse and adaptation: Leveraging existing models or hardware platforms is more sustainable than retraining from scratch.

- Systems and algorithms evolve together: Optimization works best when models consider deployment environments.

- AI enhances research: Beyond modeling, AI can accelerate the scientific process, making research faster and more reproducible.

These efforts collectively demonstrate that advancing AI isn’t just about building bigger models, it’s about building smarter systems and workflows.

AMD at ICLR 2026

AMD research was well represented at ICLR 2026, with accepted papers including:

- PARD: Accelerating LLM Inference with Low‑Cost PARallel Draft Model Adaptation. Zihao An, Huajun Bai, Ziqiong Liu, Dong Li, Emad Barsoum.

- Diffsparse: Accelerating Diffusion Transformers With Learned Token Sparsity. Haowei Zhu, Ji Liu, Ziqiong Liu, Dong Li, Jun-Hai Yong, Bin Wang, Emad Barsoum

- Training-Free Loosely Speculative Decoding: Accepting Semantically Correct Drafts Beyond Exact Match. Jinze Li, Yixing Xu, Guanchen Li, Shuo Yang, Jinfeng Xu, Xuanwu Yin, Dong Li, Edith C. H. Ngai, Emad Barsoum

- Latent Visual Reasoning. Bangzheng Li, Ximeng Sun, Jiang Liu, Ze Wang, Jialian Wu, Xiaodong Yu, Hao Chen, Emad Barsoum, Muhao Chen, Zicheng Liu

- XModBench: Benchmarking Cross-Modal Capabilities and Consistency in Omni-Language Models. Xingrui Wang, Jiang Liu, Chao Huang, Xiaodong Yu, Ze Wang, Ximeng Sun, Jialian Wu, Alan Yuille, Emad Barsoum, Zicheng Liu

- ImageDoctor: Diagnosing Text-to-Image Generation via Grounded Image Reasoning. Yuxiang Guo, Jiang Liu, Ze Wang, Hao Chen, Ximeng Sun, Yang Zhao, Jialian Wu, Xiaodong Yu, Zicheng Liu, Emad Barsoum

Looking Ahead

As AI continues to scale, research that focuses on performance, efficiency, and practicality will become more important. Through ongoing academic contributions, AMD is helping to define this balance. We are advancing hybrid model architecture, hardware-aware optimizations, and exploring AI-assisted research tools that reduce friction in the research process.

These efforts highlight the commitment to open, collaborative research that connects algorithms, systems, and real-world deployment. By sharing insights, tools, and methods with the wider community, AMD is helping ensure that progress in AI is driven not just by scale but also by thoughtful design and accessibility. As researchers build on these ideas by experimenting with hybrid models, agent-based workflows, and system-aware optimization, collaboration between academia and industry will continue to play a key role in shaping AI that is more capable, efficient, and impactful.

Explore More AMD Research

For additional AMD research blogs, technical deep dives, and open academic contributions, visit the ROCm blogs.