Turn Your Next Refresh Into an IT Transformation

Choose AMD EPYC CPU-powered servers to reinvent your data center and your business.

As enterprises refresh and build out new data centers, they’re following the example set by the world’s largest cloud providers and deploying AMD EPYC Server CPUs.

For five generations, AMD EPYC™ Server CPUs have set the pace for data center, hyperscale, and supercomputing breakthroughs while Intel® Xeon® has struggled to compete.

Now it’s the enterprise’s turn to experience the high performance and efficiency of AMD EPYC Server CPUs.

With the launch of the AMD “Zen” architecture in 2017, AMD began a new chapter in x86 performance that redefined performance for data center CPUs. From 2017 to 2025, AMD EPYC server CPUs have increased performance 11.3X, leaving the competition behind.1

Results compare SPECrate®2017_int_base, best ToS scores at end of launch year for 1st Generation through 5th Generation AMD EPYC CPUs vs. 2nd Gen Intel Xeon through Intel 6 CPUs. Gains are relative to respective first-generation models. See note 1 for details.

The market has noticed AMD leadership. First, high-performance computing (HPC) made the switch, then hyperscale cloud providers. Now enterprise data centers are moving to AMD, driving our market share to new heights.

According to Mercury Research, AMD’s revenue share of the data center market segment surpassed 40% in Q2 2025. See note 2 for details.

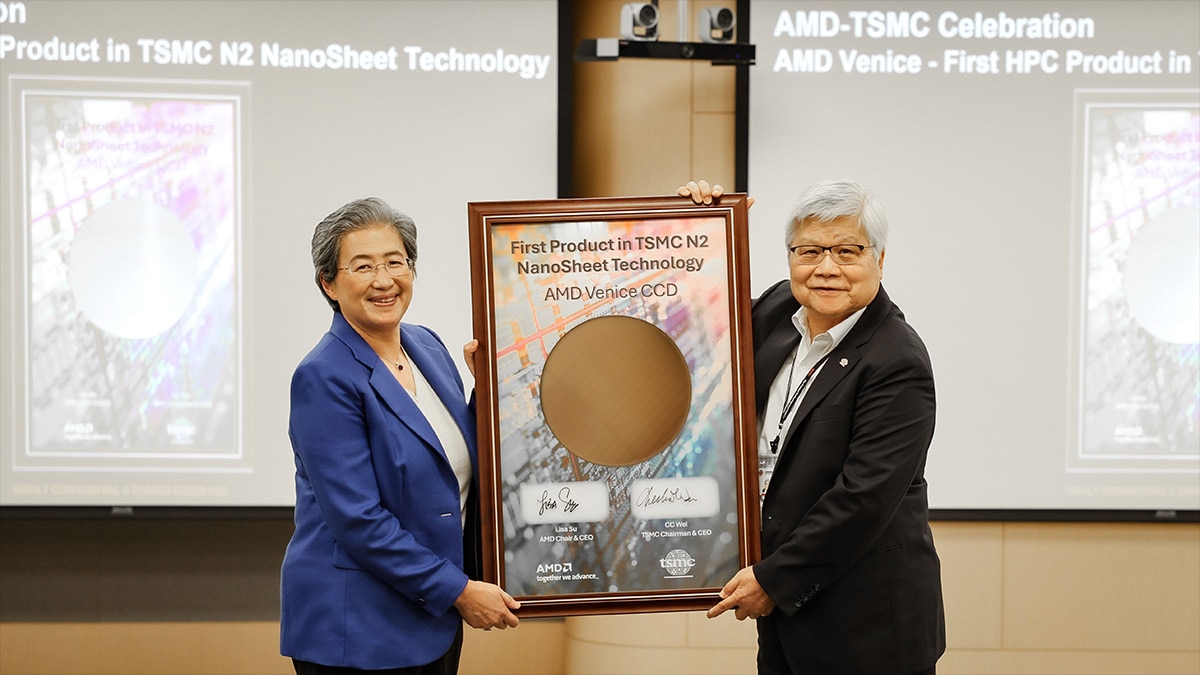

AMD is first to 2nm. 6th Generation AMD EPYC Server CPUs are the first to tape out on TSMC’s 2nm facility in Arizona. The result: performance and efficiency Intel Xeon can’t touch.

In integer performance, 2022-era AMD EPYC 9004 CPUs outperform Intel Xeon 6 at every core count. Our current 5th Generation AMD EPYC Server CPUs take performance, core counts, and frequency far beyond anything Intel can produce.4

Based on SPECrate®2017_int_base scores from spec.org as of June 6, 2025. Each core count features the highest-performing SKU in the processor series. See note 4 for details.

Based on SPECrate®2017_int_base scores from spec.org as of June 6, 2025. Each core count features the highest-performing SKU in the processor series. See note 4 for details.

Based on SPECrate®2017_int_base scores from spec.org as of June 6, 2025. Each core count features the highest-performing SKU in the processor series. See note 4 for details.

Based on SPECrate®2017_int_base scores from spec.org as of June 6, 2025. Each core count features the highest-performing SKU in the processor series. See note 4 for details.

The key benefits of x86 are its universal instruction set and general-purpose flexibility. That’s why every AMD EPYC Server CPU generation shares the same instructions and feature sets.

Intel split Intel Xeon 6 CPUs into separate architectures, with different features and different instruction sets, then saddled them both with performance-eating “engines” that require custom tuning for modest gains in micro-benchmarks.

|

AMD EPYC™ 9005 CPUs Out of the Box x86 Performance, Seamless Integration |

Intel® Xeon 6 CPUs |

Architecture Strategy |

Single ISA Common Zen 5 cores on all EPYC 9005 platforms Cross-compatible CPUs |

Split architecture Must choose performance cores or efficiency cores Can’t mix architectures at the server level |

Portfolio |

8 cores to 192 cores |

8 cores to 144 cores* |

Feature Availability |

Unified feature set for leadership performance and efficiency |

More complexity: Broad model choices require compromise on features, performance, memory, efficiency, cost, and more |

AVX512 |

Yes, on all models |

Only on Performance core models |

Simultaneous Multithreading (SMT) |

Yes, on all models |

Only on Performance cores models |

| Deployment Strategy | Unified feature set simplifies deployment |

Mixed features require tuning, tweaking, and optimizing to run on a mix of “engines” and aligning deployment to unique per-system capabilities. |

*Intel Xeon product information as of Q425 https://www.intel.com/content/www/us/en/ark/products/series/240357/intel-xeon-6-processors.html

Third party tests, AMD studies, and data from AMD customers demonstrate that faster, more efficient AMD EPYC Server CPU cores outperform proprietary Intel “acceleration” and split ISA in many applications. For micro-benchmarks and workloads in which the proprietary Intel accelerators do begin to show benefit, they’re no match for dedicated, workload-specific silicon accelerators, plus they add time and resource-intensive complications.

Standard 4th Generation AMD EPYC 9654 Server CPUs outperform 5th Gen Intel Xeon Platinum 8592+ on Llama 2 and Llama 3 inference workloads, even with Intel® AMX active.6

A MaxLinear Panther III accelerator paired with a 4th Generation AMD EPYC 9654 server outpaces two Intel Platinum 8472C servers with Intel™ QAT crypto and compression accelerators by up to 13X on L9 DEFLATE benchmarks.7

By switching its cloud to single-socket servers powered by 64-core 4th Gen AMD EPYC 9554P Server CPUs, Swisscom uses 55% fewer watts per vCPU than an Intel Xeon-powered server.8

AMD EPYC Server CPUs come in multiple configurations for AI workloads — from 192-core, high-density processors that devour AI inference workloads with capacity to high-frequency CPUs that help GPU arrays run faster.

Server consolidation is a major benefit of a data center refresh, especially when you’re making room for dedicated AI accelerators. Choose AMD EPYC Server CPUs and you’ll need fewer servers and less electricity than servers equipped with the latest Intel Xeon processors.

You have little to sacrifice and everything to gain. Whether you’re building an on-site data center, looking for outstanding performance in the cloud, or architecting a new hybrid model, AMD EPYC Server CPUs are the logical choice.

There are more than 350 AMD EPYC Server CPU-powered platforms in market.

AMD EPYC Server CPUs power over 1,350 public cloud instances around the world.