Overview

AMD CDNA™ architecture is the dedicated compute architecture underlying AMD Instinct™ GPUs and APUs. It features advanced packaging that unifies AMD chiplet technologies and High Bandwidth Memory (HBM), a high throughput Infinity Architecture fabric, and offers advanced Matrix Core Technology that supports a comprehensive set of AI and HPC data formats—designed to reduce data movement overhead and enhance power efficiency.

Table Comparison between generations:

| CDNA | CDNA 2 | CDNA 3 | CDNA 4 | |

| Process Technology | 7nm FinFET | 6nm FinFET | 5nm + 6nm FinFET | 3nm + 6nm FinFET |

| Transistors | 25.6 Billion | Up to 58 Billion | Up to 146 Billion | Up to 185 Billion |

| CUs | Matrix Cores | 120 | 440 | Up to 220 | 880 | Up to 304 | 1216 | 256 | 1024 |

| Memory Type | 32GB HBM2 | Up to 128GB HBM2E | Up to 256GB HBM3 | HBM3E | 288 GB HBM3E |

| Memory Bandwidth (Peak) | 1.2 TB/s | Up to 3.2 TB/s | Up to 6 TB/s | 8 TB/s |

| AMD Infinity Cache™ | N/A | N/A | 256 MB | 256MB |

| GPU Coherency | N/A | Cache | Cache and HBM | Cache and HBM |

| Data Type Support | INT4, INT8, BF16, FP16, FP32, FP64 | INT4, INT8, BF16, FP16, FP32, FP64 | Matrix: INT8, FP8, BF16, FP16, TF32, FP32, FP64 Vector: FP16, FP32, FP64 Sparsity: INT8, FP8, BF16, FP16 |

Matrix: MXFP4, MXFP6, INT8, MXFP8, OCP FP8, BF16, FP16, TF32*, FP32, FP64 Vector: FP16, FP32, FP64 Sparsity: OCP-FP8, INT8, FP16, BF16 |

| Products | AMD Instinct™ MI100 Series | AMD Instinct™ MI200 Series | AMD Instinct™ MI300 Series | AMD Instinct™ MI350 Series |

*TF32 is supported by software emulation.

Benefits

Matrix Core Technologies

AMD CDNA 4 offers enhanced Matrix Core Technologies that double the computational throughput for low precision Matrix data types compared to the previous Gen architecture. AMD CDNA 4 brings improved instruction-level parallelism, expands shared LDS resources with double the bandwidth, and includes support for a broad range of precisions that now include MXFP4 and MXFP6, along with sparse matrix data (i.e. sparsity) support for OCP-FP8, INT8, FP16, and BF16.

Enhanced AI Acceleration

AMD CDNA 4 brings new enhanced AI acceleration features for LLMs including improved GEMM performance with reduced latency, power efficiencies with lower precision offerings, and more flexibility for mixed precision AI projects based upon balancing priorities between model accuracy, speed or power efficiencies.

HBM Memory, Cache & Coherency

AMD Instinct MI350 Series GPUs offer industry-leading 256GB HBM3E memory capacity for larger model size support with all the bandwidth required, as well as shared memory and AMD Infinity Cache™ (shared Last Level Cache)—eliminating data copy and improving latency.

Unified Fabric

Next-gen AMD Infinity Architecture, along with AMD Infinity Fabric™ technology, enables coherent, high-throughput unification of AMD GPU chiplet technology with stacked HBM3E memory in single devices and across multi-device platforms. It also offers enhanced I/O with PCIe® 5 compatibility.

Introducing AMD CDNA™ 4

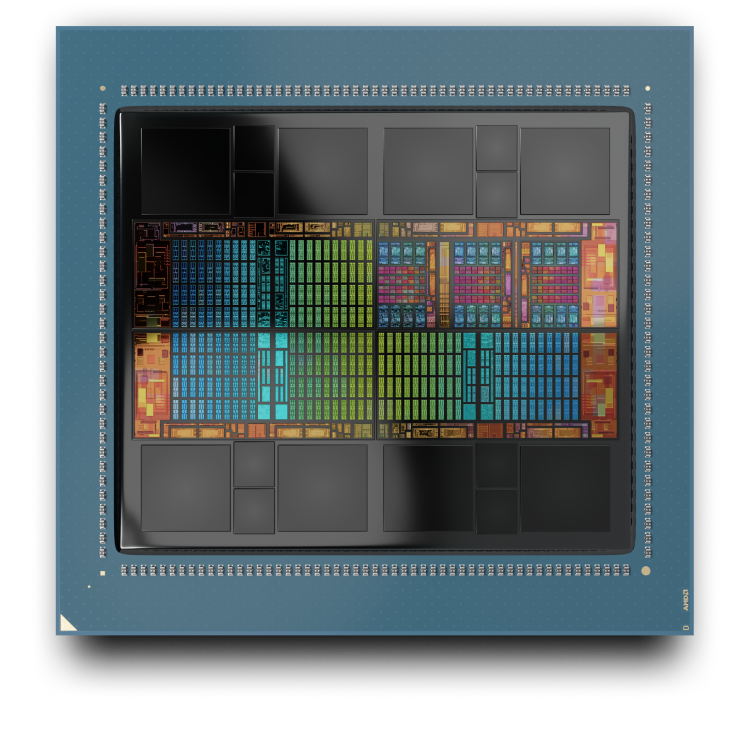

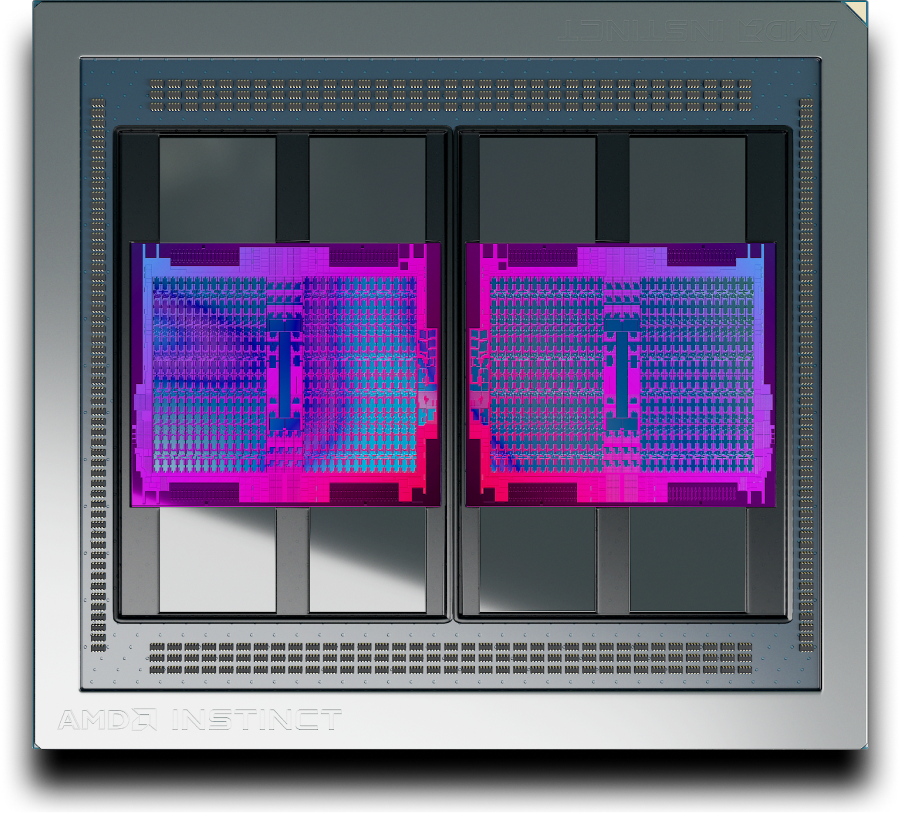

AMD CDNA™ 4 is the dedicated compute architecture underlying AMD Instinct™ MI350 Series GPUs. It features advanced packaging with chiplet technologies—designed to reduce data movement overhead and enhance power efficiency.

AMD Instinct MI350 Series GPUs

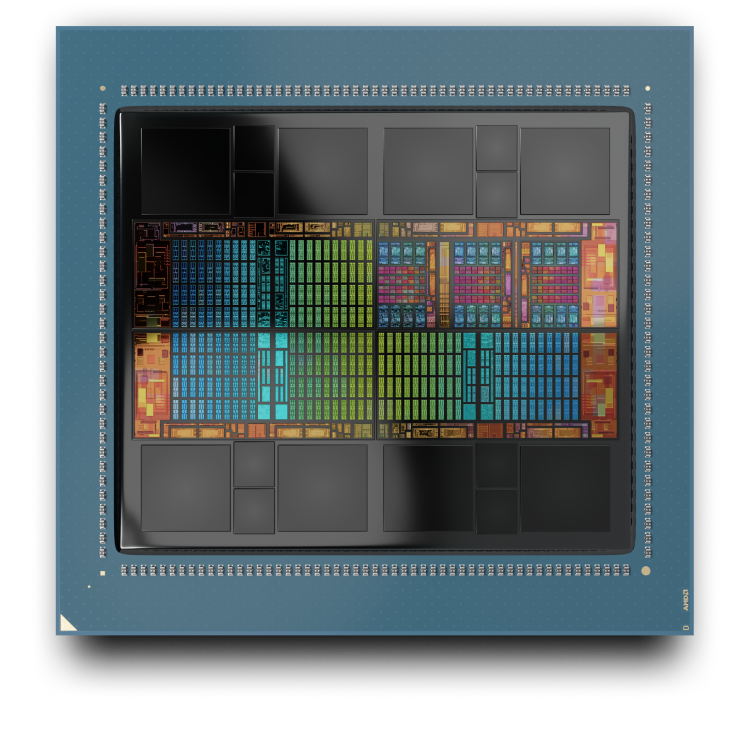

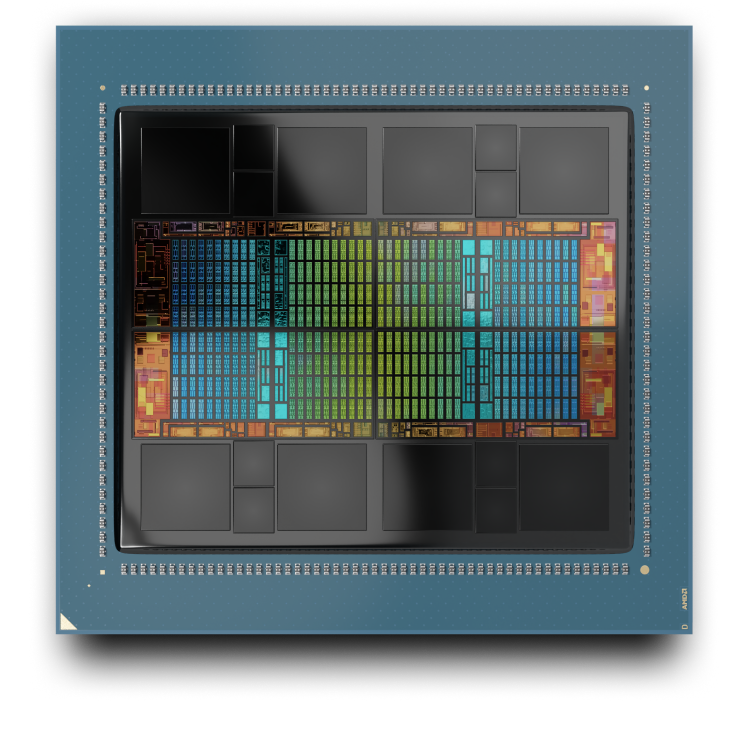

AMD CDNA 3

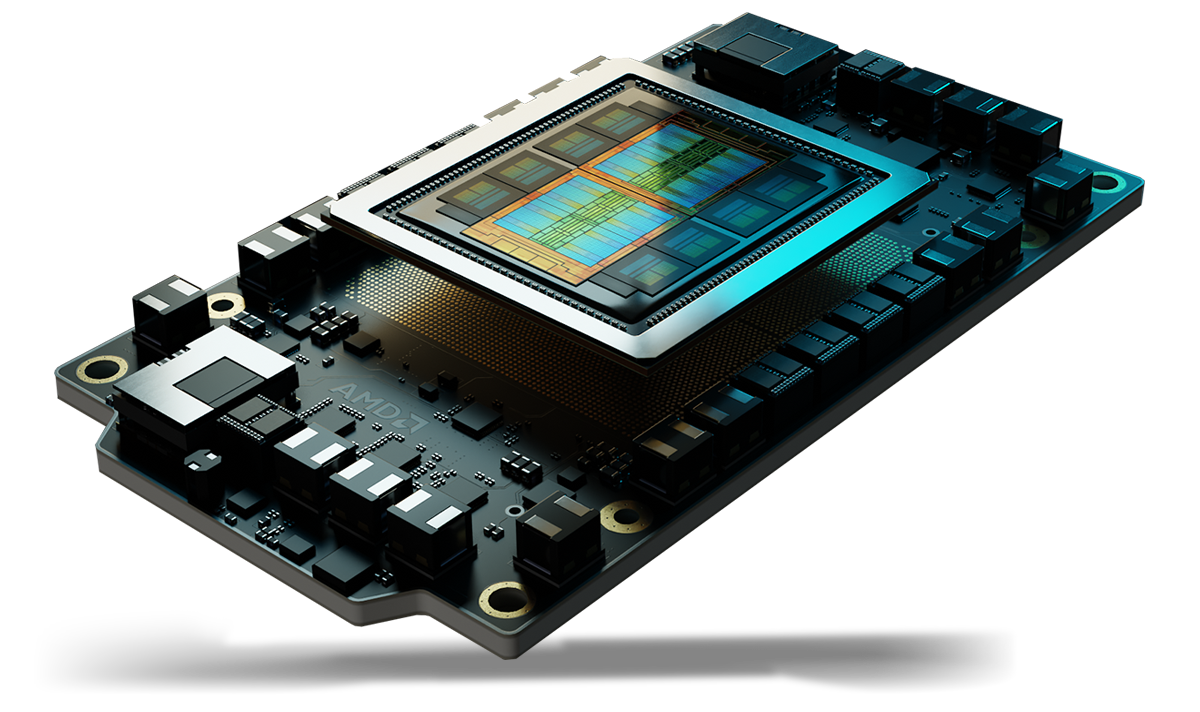

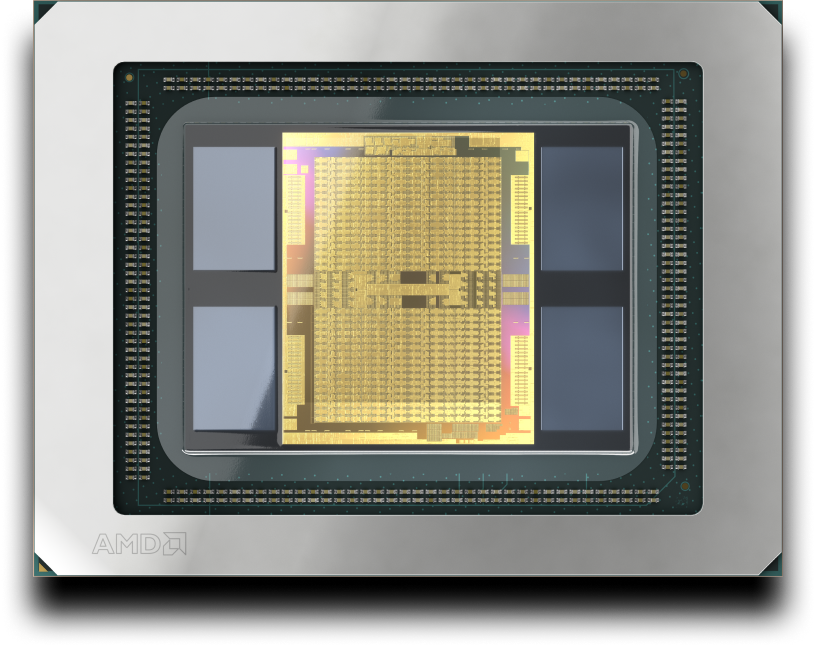

AMD CDNA 3 architecture is the dedicated compute architecture underlying AMD Instinct™ MI300 Series GPUs. It features advanced packaging with chiplet technologies—designed to reduce data movement overhead and enhance power efficiency.

AMD Instinct MI300A APU

AMD Instinct MI325X GPU

AMD CDNA 2

AMD CDNA 2 architecture is designed to accelerate even the most taxing scientific computing workloads and machine learning applications. It underlies AMD Instinct MI200 Series GPUs.

AMD CDNA

AMD CDNA architecture is a dedicated architecture for GPU-based compute that was designed to usher in the era of Exascale-class computing. It underlies AMD Instinct MI100 Series GPUs.

AMD Instinct Accelerators

Discover how AMD Instinct GPUs are setting new standards for Generative AI, training, and HPC.

AMD ROCm™ Software

AMD CDNA architecture is supported by AMD ROCm™ software, an open software stack that includes a broad set of programming models, tools, compilers, libraries, and runtimes for AI and HPC solution development targeting AMD Instinct GPUs.