AMD at NeurIPS 2025: The Evolution of Efficient AI Workflows

Dec 12, 2025

NeurIPS 2025 was a significant moment for AMD. It was not just about showcasing research; it played a role in shaping how the community thinks about the future of AI infrastructure, model design, and agentic workflows. Across papers, talks, and community events, one theme stood out clearly: Efficiency and Modularity are no longer optional; they are the future of AI.

Zebra-Llama: A New Paradigm for Hybrid Model Composition

This year, we presented our new research paper, “Zebra-Llama: Towards Extremely Efficient Hybrid Models”. The project explores a question increasingly relevant to both researchers and AI practitioners: How far can we push efficiency without giving up performance and without retraining massive models from scratch?

Zebra-Llama introduces a family of hybrid LLMs (1B, 3B, 8B) that integrate Multi-Head Latent Attention (MLA) with Mamba. These models are directly composed from existing pre-trained transformers. This method avoids the enormous resource footprint of end-to-end training while maintaining model quality.

Three ideas caught the community’s attention:

- Direct Model Composition: Using existing checkpoints as modular building blocks

- Tailored Initialization: Ensuring stability when merging architectural components

- Distillation Strategy: Preserving performance without ballooning compute cost

This approach leads to:

- Substantially lower memory usage

- Reduced inference cost

- Comparable or improved performance on key tasks

Researchers saw this as an encouraging direction for hybrid model architectures, especially for edge inference, sparse compute environments, and open-hardware deployments.

And notably, many attendees were excited to learn that AMD ROCm™ software is fully PyTorch-native, making experimentation with models like Zebra-Llama seamless on AMD GPUs.

Composable Intelligence: Architecting the Next Generation of AI Systems

Under the PyTorch foundation, Mahdi Ghodsi (SMTS Product Application, AMD) delivered a talk on Composable Intelligence and Modular Agentic Design, reflecting a broader trend away from monolithic models toward orchestrated multi-model systems.

He presented how using smaller, specialist models can yield systems that are:

- More reliable in decision boundaries

- Easier to audit, with clear internal traces

- More interpretable for safety and governance

- More portable, especially across heterogeneous compute

This differs from monolithic black-box LLMs. Instead, it leads to systems thinking, where autonomy emerges from coordination, not just scale. Mahdi also pointed out that modular agentic ecosystems cannot succeed in closed settings. They rely on:

- Open standards

- Open protocols

- Open hardware

This message resonated with researchers working on agent frameworks, distributed inference, and emerging agent standards and many appreciated the reminder that PyTorch’s native ROCm integration simplifies deploying modular agentic stacks on AMD accelerators.

Ecosystem Collaboration: Research That Moves Open AI Forward

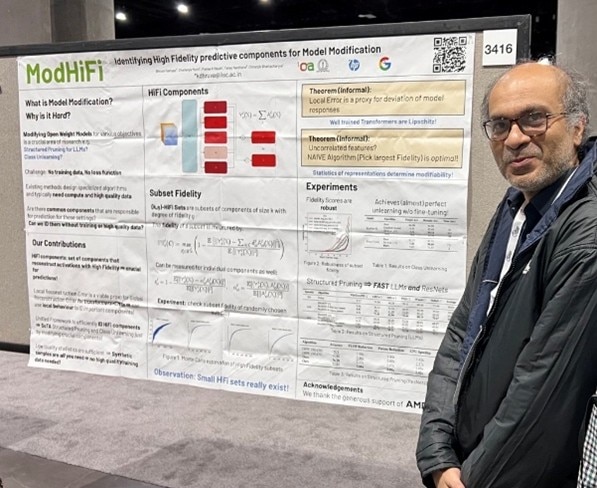

In addition to AMD’s own research, a collaborative poster supported by AMD drew strong engagement from attendees.

We also had a poster showcase on Tyr-the-Pruner, a method focused on precise, structured pruning that maintains accuracy while dramatically reducing model size and computational footprint. More details about the pruning technique can be found here.

With the growing emphasis on efficient mid-sized models, attendees valued seeing pruning research presented in a practical, deployment-ready context. It sparked meaningful discussions on connecting cutting-edge model compression with real-world inference on accessible hardware.

Scaling Community Energy: The AMD x vLLM NeurIPS Meetup

Our joint meetup with vLLM turned into one of the most energetic community gatherings of the entire conference.

We brought together:

- vLLM core contributors

- Researchers in high-performance inference

- Engineers and developers building real-world LLM systems

- Graduate students and open-source practitioners

Over 250 attendees came through for an evening of networking, technical deep-dives, arcade games, food, and drinks. Conversations ranged from multi-GPU scaling strategies to kernel-level optimizations to the future of model serving on open hardware.

The meetup showcased something we value deeply: a thriving ecosystem grows strongest when researchers and engineers collaborate in open spaces.

Looking Ahead

NeurIPS 2025 underscored a shift we’ve believed in for years:

- AI needs to be modular, not monolithic.

- Efficiency is not an optimization - it’s a requirement.

- Open ecosystems will define the next decade of progress.

From Zebra-Llama to agent orchestration frameworks to community events with vLLM, AMD is committed to building an open, efficient, and collaborative AI future.

And we’re just getting started.

Resources

- Zebra-Llama Paper

- ModHiFi Paper

- SAND-Math Paper @NeurIPS Math-AI workshop

- Blog post building a 32B Reasoning Model

- Developer Resources Portal – Access SDKs, libraries, documentation, and tools to accelerate AI, HPC, and graphics development.

- Developer Community Discord – Join global developer communities, share feedback, and exchange optimization tips directly with peers and AMD specialists.

- AI@AMD X – Stay updated with the latest software releases, AI blogs, tutorials, and news.

- Developer Central YouTube – Explore hands-on videos, demos, and deep-dive sessions from engineers and community experts.

/avx512-blog-primary-photo.png)