Day 0 Support for Qwen 3.5 on AMD Instinct GPUs

Feb 16, 2026

AMD is excited to announce Day 0 support for Alibaba’s latest generation of Large Language Models, Qwen 3.5, on AMD Instinct™ MI300X, MI325X, and MI35X GPU accelerators, in close collaboration with Alibaba Qwen team. Leveraging the optimized ROCm™ software stack, SGLang and vLLM inference serving framework, developers can immediately deploy these state-of-the-art models.

What’s New in Qwen 3.5?

1. Hybrid Attention Architecture

Unlike Qwen 3’s reliance on Sliding Window Attention, Qwen 3.5 utilizes a Hybrid Attention strategy:

- Full Attention: Standard multi-head attention layers are used at set intervals (defaulting to every 4th layer) to maintain high associative recall.

- Linear Attention (Gated Delta Networks): Qwen 3.5 implements Gated Delta Networks (Qwen3_5GatedDeltaNet) which offer linear complexity relative to sequence length. This allows the model to handle massive contexts with significantly reduced computational overhead.

2. Native Multimodal Capabilities

Qwen 3.5 is "multimodal by design," featuring a DeepStack Vision Transformer:

- Convolutions: It treats video as a third dimension, using Conv3d for patch embeddings to capture temporal dynamics natively.

- DeepStack Mechanism: It merges features from multiple layers of the visual encoder rather than just the last layer, capturing both fine-grained and high-level visual details.

3. Advanced MoE with Shared Experts

The Qwen 3.5 MoE model evolves the standard sparse architecture by introducing a Shared Expert mechanism:

- Shared Expert: A dedicated dense MLP processes every token to capture universal features, improving training stability and overall model performance.

- Routed Experts: Tokens are simultaneously routed to a subset of specialized experts (e.g., top-8 active out of 64) via a Top-K Router.

The Strategic Value of Qwen 3.5 on AMD Instinct

Empowering Next-Generation AI Agents

This Day 0 enablement is designed for AI developers, system architects, and DevOps professionals building the next wave of AI agents and enterprise platforms. While previous models required trade-offs between parameter depth and reasoning speed, the Qwen 3.5 family on AMD Instinct GPUs allows teams to deploy massive 256K context windows and complex multimodal workflows with unprecedented efficiency.

Breaking the Long-Context Bottleneck

Traditional Transformer architectures suffer from quadratic complexity; as sequence length increases, memory and compute requirements grow exponentially. Qwen 3.5 solves this via its Hybrid Attention architecture:

- Linear Scaling: By utilizing Gated Delta Networks, the model achieves linear complexity, allowing it to maintain performance across massive contexts that would crash standard dense models.

- Inference Speed: In contexts exceeding 32K tokens, Qwen 3.5 delivers significantly higher throughput compared to its predecessors due to the reduced computational overhead.

Enterprise Impact: Efficiency Without Compromise

For enterprises, this integration provides a path to high-performance AI without vendor lock-in:

- Massive Cost Savings: The Ultra-Sparse MoE design activates only a fraction of its total parameters during inference. This allows it to outperform larger dense models while using significantly less compute.

- Native Multimodality: With DeepStack and 3D Convolutions, the model can natively operate as a "Visual Agent"—identifying objects in complex environments for industrial or support use cases.

- Maximized ROI: By leveraging the massive HBM capacity of the AMD Instinct GPUs developers can serve full-scale models and massive contexts on a single GPU or single node, reducing the hardware footprint needed for production-grade agents.

Optimized for AMD ROCm, SGLang and vLLM

To ensure high performance on day zero, AMD has worked to provide optimized kernel support for the unique components of Qwen 3.5.

- Linear Attention via Triton: The Gated Delta Networks in Qwen 3.5 are supported in vLLM via Triton-based kernels (fused_recurrent_gated_delta_rule). Since SGLang and vLLM supports Triton on ROCm, these kernels work out-of-the-box on AMD GPU.

- Shared Expert MoE: The Shared Expert path leverages highly optimized hipBLASLt GEMM kernels, while the routed experts continue to use optimized AITER FusedMoE implementations.

- Vision Kernels: Multimodal Rotary Positional Embeddings (mRoPE) and Conv3d operations are fully supported via standard MIOpen and PyTorch kernels on AMD GPU.

Developer Quickstart: Deploying Qwen 3.5 on AMD Instinct GPU

Prerequisites:

Before you start, ensure you have access to AMD Instinct GPUs and the ROCm drivers set up.

How to Run QWEN3 with SGLang on AMD Instinct GPUs

Follow these steps to launch Qwen 3.5 using the latest ROCm-optimized SGLang docker container.

Step 1: Launch Docker Container

docker pull rocm/sgl-dev:v0.5.8.post1-rocm720-mi30x-20260215

docker run -it \

--device /dev/dri --device /dev/kfd \

--network host --ipc host \

--group-add video \

--security-opt seccomp=unconfined \

-v $(pwd):/workspace \

rocm/sgl-dev:v0.5.8.post1-rocm720-mi30x-20260215 /bin/bash

Step 2: Start the SGLang Server

Launch the Qwen 3.5 model (dense or MoE). SGLang will automatically detect the hybrid attention layers and use the optimized Gated Delta Net kernels.

python3 -m sglang.launch_server \

--port 8000 \

--model-path Qwen/Qwen3.5-397B-A17B \

--tp-size 8 \

--attention-backend triton \

--reasoning-parser qwen3 \

--tool-call-parser qwen3_coder

Step 3: Run the Examples

You can now interact with the model via an OpenAI-compatible API to run the following examples on HuggingFace,

https://huggingface.co/Qwen/Qwen3.5-397B-A17B#text-only-input

https://huggingface.co/Qwen/Qwen3.5-397B-A17B#image-input

https://huggingface.co/Qwen/Qwen3.5-397B-A17B#video-input

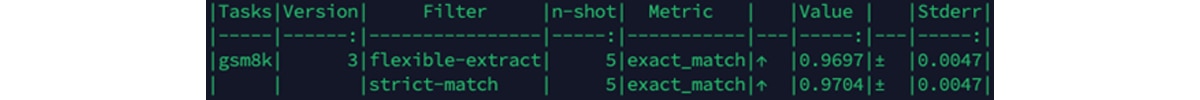

Step 4: Accuracy Evaluation (Optional)

pip install lm-eval[api]

lm_eval --model local-completions \

--model_args '{"base_url": "http://localhost:8000/v1/completions", "model": "Qwen/Qwen3.5-397B-A17B", "num_concurrent": 256, "max_retries": 10, "max_gen_toks": 2048}' \

--tasks gsm8k \

--batch_size auto \

--num_fewshot 5 \

--trust_remote_code

You will get GSM8K score like below

How to Run QWEN3 with vLLM on AMD Instinct GPUs

Step 1: Launch Docker Container

docker pull rocm/vllm-dev:nightly_main_20260211

docker run -it \

--device /dev/dri --device /dev/kfd \

--network host --ipc host \

--group-add video \

--security-opt seccomp=unconfined \

-v $(pwd):/workspace \

rocm/vllm-dev:nightly_main_20260211 /bin/bash

Install Transformers from Source inside the container,

pip install git+https://github.com/huggingface/transformers.git

Step 2: Start the vLLM Server

VLLM_ROCM_USE_AITER=1 \

vllm serve Qwen/Qwen3.5-397B-A17B \

--port 8000 \

--tensor-parallel-size 8 \

--reasoning-parser qwen3 \

--enable-auto-tool-choice \

--tool-call-parser qwen3_coder

Step 3: Run the Examples

The same with SGLang section.

Step 4: Accuracy Evaluation (Optional)

The same with SGLang section.

The above shows how to serve Qwen 3.5 with both SGLang and vLLM. All the AMD support code has been upstreamed. The next upstream released SGLang and vLLM docker image will run Qwen 3.5 out-of-the-box on AMD MI300X/MI325X/MI35X GPUs.

Conclusion

With the release of Qwen 3.5, Alibaba continues to push the boundaries of open-weight models. By providing Day 0 support on AMD Instinct™ GPUs with both SGLang and vLLM, we ensure that developers have the compute power and optimized software stack needed to run these massive, high-context models at production scale.

Additional Resources

- Join AMD AI Developer Program to access AMD developer cloud credits, expert support, exclusive training, and community.

- Visit the ROCm AI Developer Hub for additional tutorials, open-source projects, blogs, and other resources for AI development on AMD GPUs.

- Explore AMD ROCm Software.

- Learn more about AMD Instinct GPUs.

- Download the model and code

- Hugging Face: https://huggingface.co/Qwen/Qwen3.5-397B-A17B

- Modelscope: https://www.modelscope.ai/models/Qwen/Qwen3.5-397B-A17B