Introducing the AMD Pensando™ Pollara 400 AI NIC-Ready Server Platforms

Dec 10, 2025

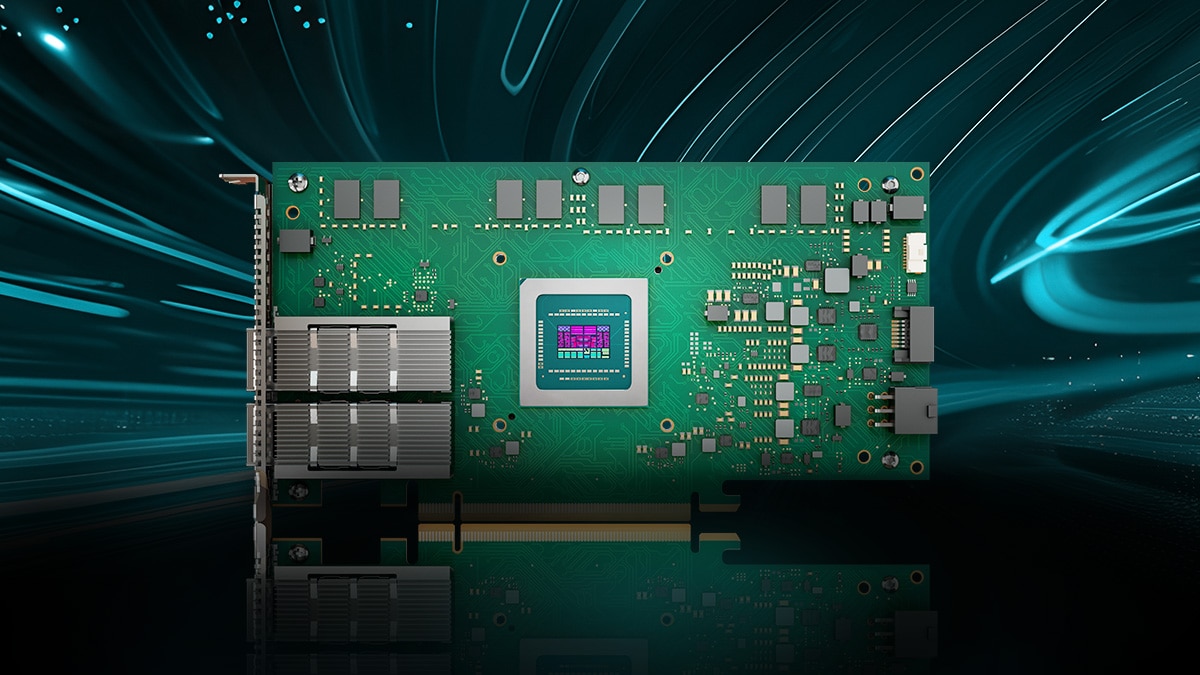

Enterprises, cloud providers, and research organizations need AI infrastructure that delivers results fast, not just parts to assemble. Today, AMD is introducing AMD Pensando™ Pollara 400 AI NIC–Ready Server Platforms: a growing ecosystem of server systems from leading partners that come preconfigured with the AMD Pensando™ Pollara 400 AI Network Interface Card to deliver high-performance, Ethernet-based AI networking out of the box for both the front-end and back-end. By combining proven server designs, powerful AMD compute, and Pollara 400’s fully programmable 400G Ethernet, these platforms help organizations stand up scalable AI clusters faster and with greater confidence.

AMD Pensando™ Pollara 400 AI NIC–Ready Server Platforms brings together servers from leading partners with the AMD Pensando™ Pollara 400 AI NIC to easily power the communication cycles required for the unique demand of AI workloads. These platforms give customers a consistent AI networking foundation across a broad ecosystem of server designs. Partners can offer dense GPU training nodes, high-throughput inference servers, that can often combine AMD EPYC™ Server CPUs, AMD Instinct™ GPU accelerators, and AMD Pensando™ Pollara 400 AI NIC–based Ethernet fabrics. Unlike other AI NICs, the AMD Pensando™ Pollara 400 AI NIC is fully hardware and software programmable, meaning the AI NIC can be updated without a hardware overhaul as transport and congestion-control algorithms evolve. This flexibility allows the same server platform to be tuned over time for new AI workloads, updated business priorities, and topologies.

Each AMD Pensando™ Pollara 400 AI NIC–Ready Server Platform is developed in collaboration with key ecosystem participants including Celestica, Cisco, Compal, Dell, Gigabyte, HPE, Ingrasys, Mitac, QCT, Supermicro, and Wistron, who bring their own strengths in system design, integration, and support. Server partners contribute configurations for training and inference, networking partners provide Ultra Ethernet–ready or RoCE-based fabrics, and software and orchestration partners help customers operationalize these systems at scale.

Within each platform, the AMD Pensando™ Pollara 400 AI NIC delivers the networking intelligence that AI workloads demand. Its P4-programmable pipeline enables customers to leverage advanced UEC features such as intelligent packet spray, out-of-order packet handling with in-order message delivery, selective retransmission, and path-aware congestion control. These capabilities help reduce AI job runtimes, improve effective throughput for collective operations, and increase network reliability by enabling fast fault detection and recovery.

Because the AMD Pensando™ Pollara 400 AI NIC is designed for open, standards-based Ethernet environments—including OCP 3.0 form factors and interoperability with a wide range of switches and optics—customers using these platforms can scale AI infrastructure while preserving choice. As the Ultra Ethernet Consortium and other ecosystem standards evolve, the AI NIC’s programmability provides a path to incorporate new transport protocols and optimizations into existing platforms without waiting for new hardware.

View our partner solutions catalog: AMD Pensando™ Pollara 400 AI NIC-Ready Server Platforms

/avx512-blog-primary-photo.png)