What is an AI NIC?

Dec 12, 2025

WHY DO WE NEED AI NICS?

Today’s AI workloads are becoming increasingly demanding, requiring data centers to be developed optimized for generative, agentic, and physical AI. As Dr. Lisa Su noted in her AAI keynote, these workloads require synchronized GPU clusters for large language model (LLM) training and distributed inference. This shift demands a new approach to infrastructure: scale-out data centers.

Scale-out architectures connect multiple nodes of Graphics Processing Unit (GPU) clusters, enabling them to work together as a unified system. These clusters must communicate constantly, sending massive volumes of data back and forth. Physical and logical efficient connectivity is extremely important for synchronization.

Ethernet is the modern standard for connecting GPU clusters, and nearly 90% of organizations are leveraging or planning to deploy Ethernet for their AI clusters, according to Enterprise Strategy Group’s recent analyst paper. However, traditional Ethernet wasn’t designed for the high bandwidth and low latency demands of AI workloads. AMD AI NICs are designed to meet high bandwidth and low latency demands of AI network.

An AI NIC eases the burden of the network and optimizes its available bandwidth, promoting the scale-out of GPU-GPU connections. Additionally, the intelligent capability of an AI NIC to improve data transfer creates a network of efficient communication with minimal latency/transmission delays.

WHAT CAN AI NICS DO?

Data centers are utilizing increasingly large GPU clusters. These clusters process immense amounts of data and often do so unpredictably; this can lead to network latency or data packets being “lost” in the form of packet dropping.

These delays can compound quickly. Imagine the lag from weak Wi-Fi, but scaled to millions of data packets moving across dozens of GPUs in a scale-out network. AI NICs mitigate these issues by intelligently rerouting traffic, reducing packet loss, and minimizing latency.

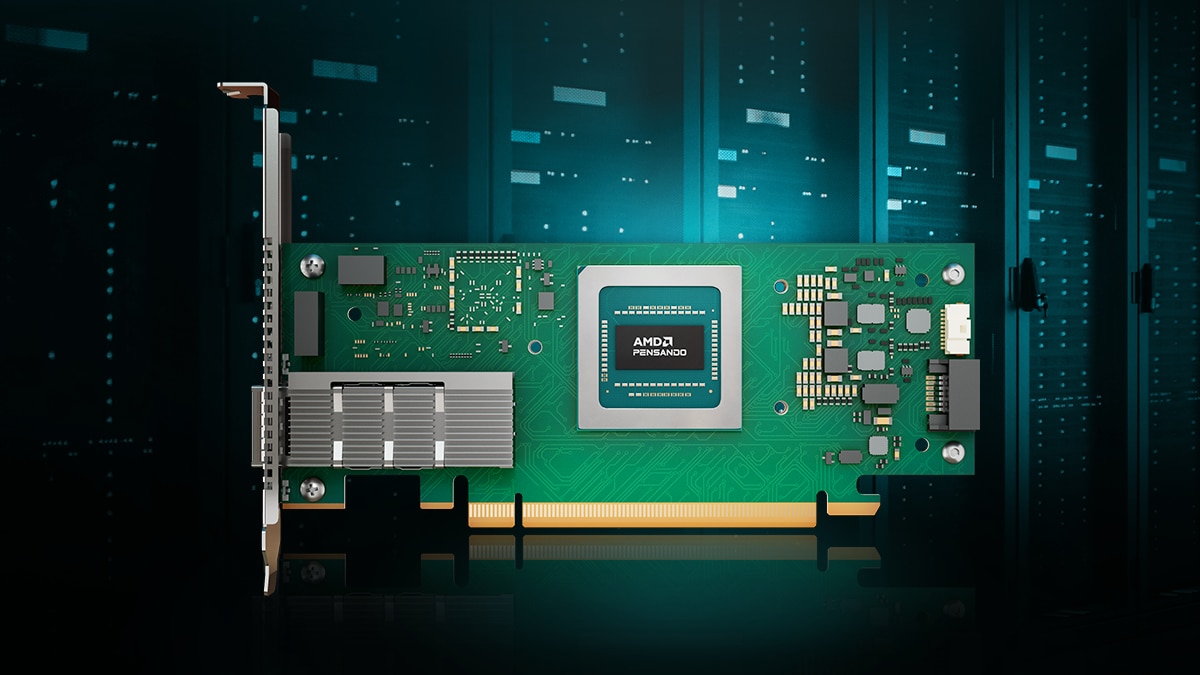

Despite this high volume of data, AI NICs enhance reliability by significantly reducing packet loss and latency. By intelligently rerouting data traffic, the AI NIC ensures workloads can be accelerated. The AMD Pensando™ Pollara 400 AI NIC, for example, employs path-awareness congestion avoidance, selective retransmission of data, and packet spray/multipathing to accelerate data throughput. Congestion and latency are inherent problems of large scale-out networks, and AI NICs serve to mitigate these issues and empower AI workloads.

The networking industry is continually evolving, and the choice to decide and adapt how data is being transmitted allows organizations to avoid being constrained by old paths. Typically, this can only be accomplished through a configurable AI NIC that decides how and when to route data packets along the pathway. A programmable AI NIC ensures optimal compatibility with all the moving parts of a scale-out data center. This open ecosystem provides the most flexibility as AI continues to rapidly develop, protecting large data center infrastructure investments.

WHEN ARE AI NICS USED?

AI NICs are crucial to efficiently process large volumes of data in intra-node GPU clusters. Rather than training a model on a single GPU over an extended period, parallel GPU clusters allow for the model to be trained over much less time. AI NICs facilitate the high-speed data transfer between GPUs, allowing for synchronous, extensive model training spanning large datasets.

Once an AI model is online, having visibility into errors is essential to keeping the workload running. This can be achieved by the reliability, availability, and serviceability (RAS) features of an AI NIC. Fault detection, for example, allows for operators to identify and troubleshoot errors, improving job consistency and uptime for AI workloads. Better RAS in AI NICs means a more resilient network that is capable of handling large AI workloads and managing congestion.

Mitigating the problems of the network is the role of an AI NIC, and one significant challenge is security. In scale-out networks, large amounts of data are processed every minute: AI NICs offer enhanced security, protecting sensitive information from potential cyber threats by utilizing robust encryption measures.

AI NICs IN ACTION

The AMD AI NIC™ adapter intelligently avoids congestion in data centers. View how some of its features actively work to improve performance HERE.

AI NICS: THE SOLUTION TO THE NETWORK

In the world of data processing, minimizing latency is essential, and AI NICs help to do exactly that. Offloading tasks from the GPU and CPU, path-aware congestion control, and the facilitation of quick and secure GPU cluster communication are the AMD AI NIC™ solutions to the network’s problems. High levels of programmability and customization create room for change, which is essential in an AI-driven, constantly adapting networking fabric.