The Most Advanced AMD AI Software Stack

Latest Algorithms and Models

Enhanced reasoning, attention algorithms, and sparse MoE for improved efficiency

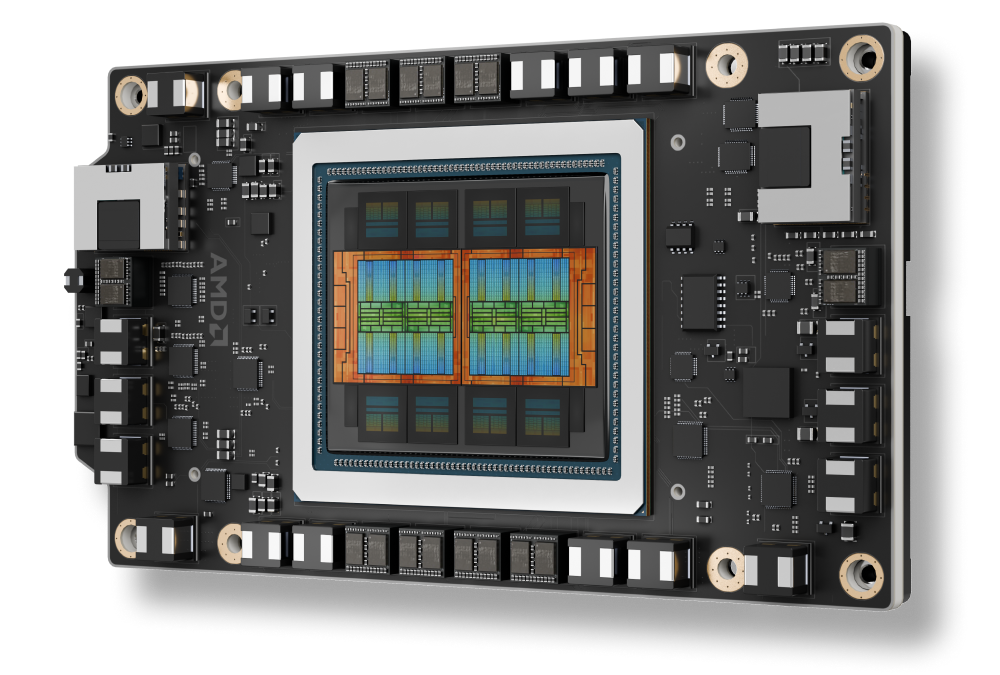

AMD Instinct™ MI350 Series Support

AMD CDNA 4 architecture, supporting new datatypes with advanced HBM

Advanced Features for Scaling AI

Seamless distributed inference, MoE training, reinforcement learning at scale

Enterprise-Ready AI Tools

Deploy and manage AI across clusters with orchestration and endpoint support

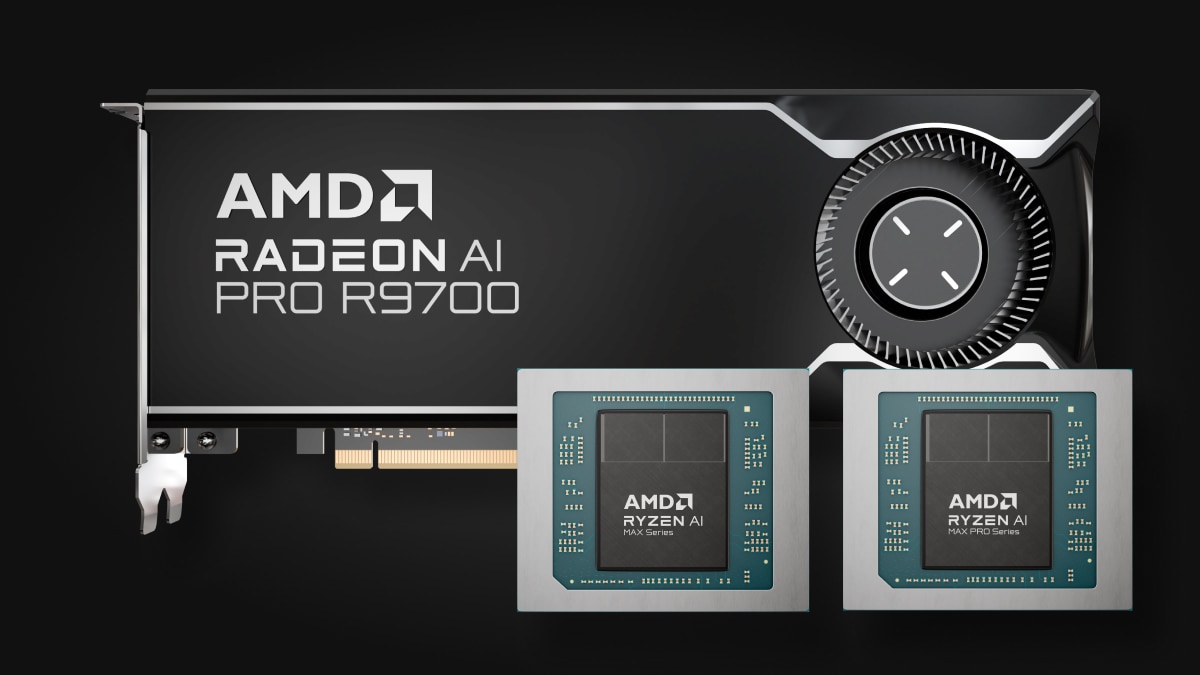

AI at Endpoint

From Ryzen™ AI to Radeon™ graphics — versatile endpoint AI processor for any application

Generational Leap in Performance

ROCm 7 vs. ROCm 6

Inference¹

Training²

AMD Instinct™ MI350 Series Support

Powering AMD Instinct™ MI350 Series GPUs

Enhancing seamless integration of AMD Instinct MI350X platforms with open rack infrastructure, enabling rapid deployment and optimized AI performance at scale.

Scaling Enterprise AI

Distributed Inference with Open Ecosystem

With vLLM-d, DeepEP, SGLang, and GPU direct access, ROCm software platform enables the highest throughput serving at rack scale —across batch, across nodes, across models.

ROCm for AI Lifecycle

ROCm software integrates with enterprise AI frameworks to provide a fully open-sourced end-to-end workflow for production AI, encompassing ROCm Enterprise AI and ROCm cluster management to facilitate seamless deployment and scalability.

AI at the Endpoint

Expanding ROCm Ecosystem Across AMD Ryzen™ AI Processor and AMD Radeon™ Graphics

The ROCm endpoint AI ecosystem is powered by the efficient open-source TheROCK build platform, featuring nightly releases of ROCm and PyTorch. Now supporting both Linux and Windows, this system caters to the latest Radeon RX 9000 series and the leading Ryzen AI Max products, providing a robust foundation for seamless AI development and deployment.

A Preview of PyTorch is now available: Linux support for AMD Ryzen™ AI 300 Series* and Ryzen AI Max Series, and Windows support for AMD Ryzen AI 300 Series*, Ryzen AI Max Series, and all AMD Radeon™ RX 7000 and Radeon W7000 Series (and newer).

*For a full list of supported products, visit our compatibility matrix page.

Get Started Today

Accelerate your AI/ML, high-performance computing, and data analytics tasks with AMD developer cloud.

Stay Informed

Stay up to date with the latest ROCm news.

Footnotes

- MI300-080 -Testing by AMD Performance Labs as of May 15, 2025, measuring the inference performance in tokens per second (TPS) of AMD ROCm 6.x software, vLLM 0.3.3 vs. AMD ROCm 7.0 preview version SW, vLLM 0.8.5 on a system with (8) AMD Instinct MI300X GPUs running Llama 3.1-70B (TP2), Qwen 72B (TP2), and Deepseek-R1 (FP16) models with batch sizes of 1-256 and sequence lengths of 128-204. Stated performance uplift is expressed as the average TPS over the (3) LLMs tested.

Hardware Configuration1P AMD EPYC™ 9534 CPU server with 8x AMD Instinct™ MI300X (192GB, 750W) GPUs, Supermicro AS-8125GS-TNMR2, NPS1 (1 NUMA per socket), 1.5 TiB (24 DIMMs, 4800 mts memory, 64 GiB/DIMM), 4x 3.49TB Micron 7450 storage, BIOS version: 1.8

Software Configuration(s)

Ubuntu 22.04 LTS with Linux kernel 5.15.0-119-generic

Qwen 72B and Llama 3.1-70B -

ROCm 7.0 preview version SW

PyTorch 2.7.0. Deepseek R-1 - ROCm 7.0 preview version, SGLang 0.4.6, PyTorch 2.6.0

vs.

Qwen 72 and Llama 3.1-70B - ROCm 6.x GA SW

PyTorch 2.7.0 and 2.1.1, respectively,

Deepseek R-1: ROCm 6.x GA SW

SGLang 0.4.1, PyTorch 2.5.0

Server manufacturers may vary configurations, yielding different results. Performance may vary based on configuration, software, vLLM version, and the use of the latest drivers and optimizations.

- MI300-081 - Testing conducted by AMD Performance Labs as of May 15, 2025, to measure the training performance (TFLOPS) of ROCm 7.0 preview version software, Megatron-LM, on (8) AMD Instinct MI300X GPUs running Llama 2-70B (4K), Qwen1.5-14B, and Llama3.1-8B models, and a custom docker container vs. a similarly configured system with AMD ROCm 6.0 software.

Hardware Configuration

1P AMD EPYC™ 9454 CPU, 8x AMD Instinct MI300X (192GB, 750W) GPUs, American Megatrends International LLC BIOS version: 1.8, BIOS 1.8.

Software Configuration

Ubuntu 22.04 LTS with Linux kernel 5.15.0-70-generic

ROCm 7.0., Megatron-LM, PyTorch 2.7.0

vs.

ROCm 6.0 public release SW, Megatron-LM code branches hanl/disable_te_llama2 for Llama 2-7B, guihong_dev for LLama 2-70B, renwuli/disable_te_qwen1.5 for Qwen1.5-14B, PyTorch 2.2.

Server manufacturers may vary configurations, yielding different results. Performance may vary based on configuration, software, vLLM version, and the use of the latest drivers and optimizations.

- MI300-080 -Testing by AMD Performance Labs as of May 15, 2025, measuring the inference performance in tokens per second (TPS) of AMD ROCm 6.x software, vLLM 0.3.3 vs. AMD ROCm 7.0 preview version SW, vLLM 0.8.5 on a system with (8) AMD Instinct MI300X GPUs running Llama 3.1-70B (TP2), Qwen 72B (TP2), and Deepseek-R1 (FP16) models with batch sizes of 1-256 and sequence lengths of 128-204. Stated performance uplift is expressed as the average TPS over the (3) LLMs tested.

Hardware Configuration1P AMD EPYC™ 9534 CPU server with 8x AMD Instinct™ MI300X (192GB, 750W) GPUs, Supermicro AS-8125GS-TNMR2, NPS1 (1 NUMA per socket), 1.5 TiB (24 DIMMs, 4800 mts memory, 64 GiB/DIMM), 4x 3.49TB Micron 7450 storage, BIOS version: 1.8

Software Configuration(s)

Ubuntu 22.04 LTS with Linux kernel 5.15.0-119-generic

Qwen 72B and Llama 3.1-70B -

ROCm 7.0 preview version SW

PyTorch 2.7.0. Deepseek R-1 - ROCm 7.0 preview version, SGLang 0.4.6, PyTorch 2.6.0

vs.

Qwen 72 and Llama 3.1-70B - ROCm 6.x GA SW

PyTorch 2.7.0 and 2.1.1, respectively,

Deepseek R-1: ROCm 6.x GA SW

SGLang 0.4.1, PyTorch 2.5.0

Server manufacturers may vary configurations, yielding different results. Performance may vary based on configuration, software, vLLM version, and the use of the latest drivers and optimizations.

- MI300-081 - Testing conducted by AMD Performance Labs as of May 15, 2025, to measure the training performance (TFLOPS) of ROCm 7.0 preview version software, Megatron-LM, on (8) AMD Instinct MI300X GPUs running Llama 2-70B (4K), Qwen1.5-14B, and Llama3.1-8B models, and a custom docker container vs. a similarly configured system with AMD ROCm 6.0 software.

Hardware Configuration

1P AMD EPYC™ 9454 CPU, 8x AMD Instinct MI300X (192GB, 750W) GPUs, American Megatrends International LLC BIOS version: 1.8, BIOS 1.8.

Software Configuration

Ubuntu 22.04 LTS with Linux kernel 5.15.0-70-generic

ROCm 7.0., Megatron-LM, PyTorch 2.7.0

vs.

ROCm 6.0 public release SW, Megatron-LM code branches hanl/disable_te_llama2 for Llama 2-7B, guihong_dev for LLama 2-70B, renwuli/disable_te_qwen1.5 for Qwen1.5-14B, PyTorch 2.2.

Server manufacturers may vary configurations, yielding different results. Performance may vary based on configuration, software, vLLM version, and the use of the latest drivers and optimizations.