Leadership AI & HPC Acceleration

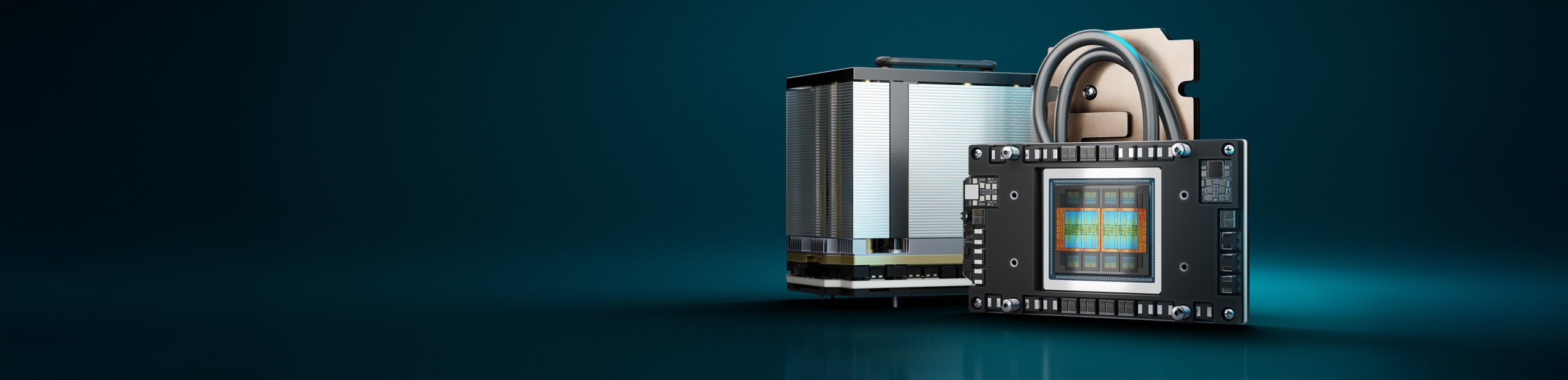

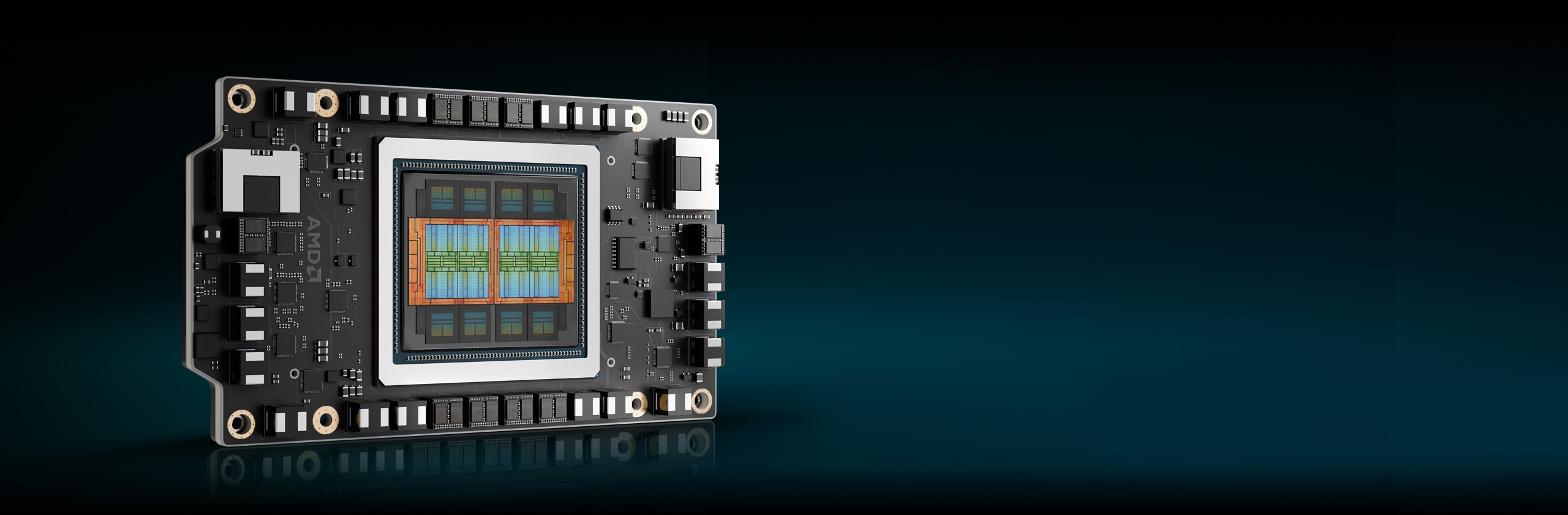

AMD Instinct™ MI350 Series GPUs set a new standard for Generative AI and high performance computing (HPC) in data centers. Built on the new cutting-edge 4th Gen AMD CDNA™ architecture, these GPUs deliver exceptional efficiency and performance for training massive AI models, high-speed inference, and complex HPC workloads like scientific simulations, data processing, and computational modeling.

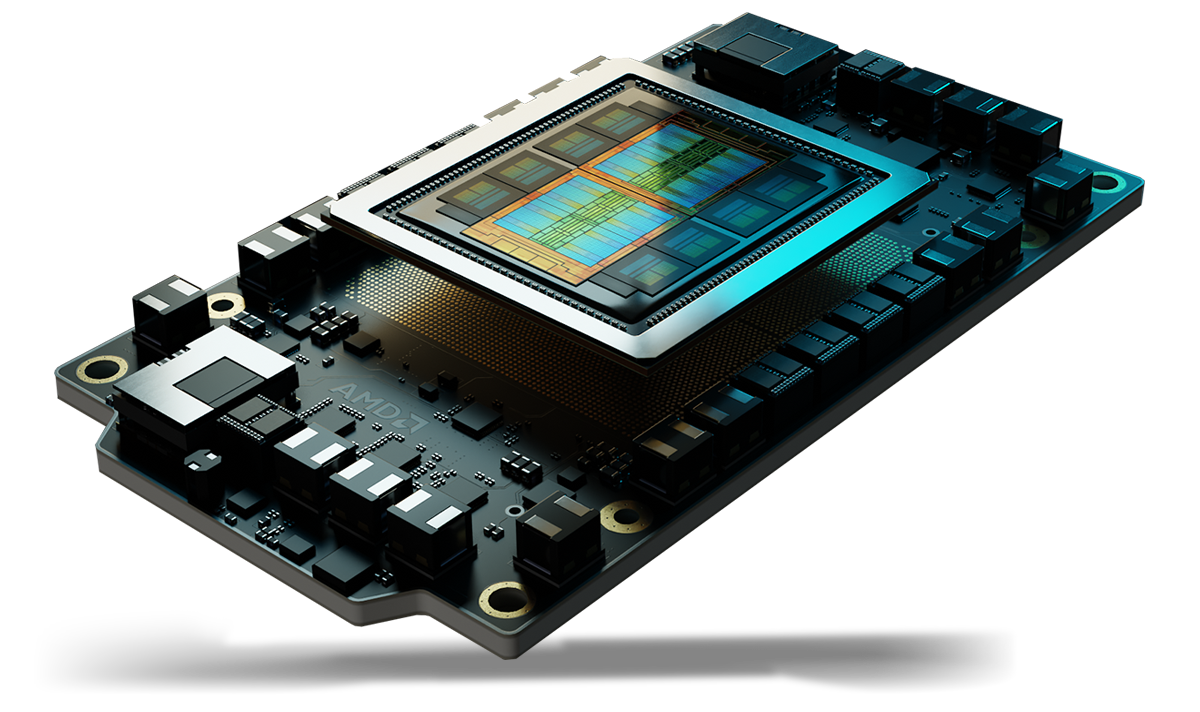

Under the Hood

The Ultimate AI and HPC Performance

Built on the cutting-edge 4th Gen AMD CDNA™ architecture, AMD Instinct™ MI350 Series GPUs feature powerful and energy-efficient cores, maximizing performance per watt to drive the next era of AI and HPC innovation.

Benefits

- Breakthrough AI Acceleration With Huge Memory

- Advanced Security for AI & HPC

- Seamless Deployment & AI Optimization

- Trusted by AI Leaders

Breakthrough AI Acceleration With Huge Memory

The AMD Instinct™ MI350 Series GPUs redefine AI acceleration with next-gen MXFP6 and MXFP4 datatype support, optimizing efficiency, bandwidth, and energy use for lightning-fast AI inference and training.

Designed to fuel performance of the most demanding AI models, Instinct MI350 GPUs boast a massive 288GB of HBM3E memory and 8TB/s bandwidth, delivering a huge leap in performance over previous generations.1

Advanced Security for AI & HPC

AMD Instinct™ MI350 Series GPUs help ensure trusted firmware, verify hardware integrity, enable secure multi-tenant GPU sharing, and encrypt GPU communication—helping enhance reliability, scalability, and data security for cloud AI and mission-critical workloads.

Seamless Deployment & AI Optimization

AMD Instinct™ MI350 Series GPUs help enable frictionless adoption with drop-in compatibility, while the AMD GPU Operator simplifies deployment and workload configuration in Kubernetes. Powered by the open AMD ROCm™ software stack, developers get Day 0 support for leading AI frameworks and models from OpenAI, Meta, PyTorch, Hugging Face, and more— helping ensure efficient, high-performance execution without vendor lock-in.

Trusted by AI Leaders

Industry leaders and innovators trust AMD Instinct™ GPUs for large-scale AI, powering models like Llama 405B and GPT. Broad AMD Instinct GPU adoption by CSPs & OEMs are helping to drive next-gen AI at scale.

Meet the Series

Explore AMD Instinct MI350 Series GPUs and AMD Instinct MI350 Series Platforms.

Meet the AMD Instinct™ MI350 Series GPUs

Built on 4th Gen AMD CDNA™ architecture, AMD Instinct™ MI350 Series GPUs deliver exceptional AI inference, training, and HPC workload performance with massive 288GB HBM3E memory, 8TB/s bandwidth, and expanded datatype support including MXFP6, MXFP4.

256 CUs

256 GPU Compute Units

288 GB

288 GB HBM3E Memory

8 TB/s

8 TB/s Peak Theoretical Memory Bandwidth

Specs Comparisons

- AI Performance

- HPC Performance

- Memory

AI Performance (Peak PFLOPs)

Up to 2.2X the AI performance vs. competitive accelerators2

FP16/BF16 Tensor / FP16/BF16 Matrix

(Sparsity)

FP8 Tensor / OCP-FP8 Matrix

(Sparsity)

FP6 Tensor / MXFP6 Matrix

B200 SXM5 180GB

MI355X OAM 288GB

HPC Performance (Peak TFLOPs)

Up to 2.1X the HPC performance vs. competitive accelerators3

B200 SXM5 180GB

MI355X OAM 288GB

Memory Capacity & Bandwidth

1.6X Memory Capacity vs. competitive accelerators1

B200 SXM5 180GB

MI355X OAM 288GB

AMD Instinct Platforms

The AMD Instinct MI350 Series Platforms integrate 8 fully connected MI355X or MI350X GPU OAM modules onto an industry standard OCP design via 4th Gen AMD Infinity Fabric™ technology, with an industry leadership 2.3TB HBM3E memory capacity for high throughput AI processing. These ready-to-deploy platforms now offer support for a variety of systems, from standard air cooled UBB-based servers to ultra dense Direct Liquid Cooled (DLC) platforms, helping to accelerate time-to-market and reduce development costs when adding AMD Instinct MI350 Series GPUs into existing AI rack and server infrastructures.

8 MI350 Series GPUs

Eight (8) MI355X or MI350X GPU OAM modules

2.3 TB

2.3 TB Total HBM3E Memory

64 TB/s

64 TB/s Peak Theoretical Aggregate Memory Bandwidth

AMD ROCm™ Software

AMD ROCm™ software includes a broad set of programming models, tools, compilers, libraries, and runtimes for AI models and HPC workloads targeting AMD Instinct GPUs.

Case Studies

Find Solutions

Experience AMD Instinct GPUs in the Cloud

Support your AI, HPC, and software development needs with programs supported by leading cloud service providers.

AMD Instinct GPU Partners and Server Solutions

AMD collaborates with leading Original Equipment Manufacturers (OEMs), and platform designers to offer a robust ecosystem of AMD Instinct GPU-powered solutions.

Resources

Stay Informed

Sign up to receive the latest data center news and server content.

Footnotes

- Calculations conducted by AMD Performance Labs as of May 22nd, 2025, based on current specifications and /or estimation. The AMD Instinct™ MI355X OAM accelerators have 288GB HBM3e memory capacity and 8 TB/s GPU peak theoretical memory bandwidth performance. The highest published results on the NVidia Hopper H200 (141GB) SXM GPU accelerator resulted in 141GB HBM3e memory capacity and 4.8 TB/s GPU memory bandwidth performance. https://nvdam.widen.net/s/nb5zzzsjdf/hpc-datasheet-sc23-h200-datasheet-3002446 The highest published results on the NVidia Blackwell HGX B200 (180GB) GPU accelerator resulted in 180GB HBM3e memory capacity and 7.7 TB/s GPU memory bandwidth performance. https://nvdam.widen.net/s/wwnsxrhm2w/blackwell-datasheet-3384703 The highest published results on the NVidia Grace Blackwell GB200 (186GB) GPU accelerator resulted in 186GB HBM3e memory capacity and 8 TB/s GPU memory bandwidth performance. https://nvdam.widen.net/s/wwnsxrhm2w/blackwell-datasheet-3384703 MI350-001

- Based on calculations by AMD Performance Labs in May 2025, to determine the peak theoretical precision performance for the AMD Instinct™ MI350X / MI355X GPUs, when comparing FP64, FP32, FP16, OCP-FP8, FP8, MXFP6, FP6, MXFP4, FP4, INT8, and bfloat16 datatypes with Vector, Matrix, or Tensor with Sparsity as applicable, vs. NVIDIA Blackwell B200 accelerator. Server manufacturers may vary configurations, yielding different results. MI350-009A

- Based on calculations by AMD Performance Labs in May 2025, to determine the peak theoretical precision performance for the AMD Instinct™ MI350X / MI355X GPUs, when comparing FP64 and FP32 with Vector, Matrix or Tensor as applicable, vs. NVIDIA Blackwell B200 accelerator. Results may vary based on server configuration, datatype, and workload. Performance may vary based on use of latest drivers and optimizations. MI350-019

- Based on calculations by AMD as of April 17, 2025, using the published memory specifications of the AMD Instinct MI350X / MI355X GPUs (288GB) vs MI300X (192GB) vs MI325X (256GB). Calculations performed with FP16 precision datatype at (2) bytes per parameter, to determine the minimum number of GPUs (based on memory size) required to run the following LLMs: OPT (130B parameters), GPT-3 (175B parameters), BLOOM (176B parameters), Gopher (280B parameters), PaLM 1 (340B parameters), Generic LM (420B, 500B, 520B, 1.047T parameters), Megatron-LM (530B parameters), LLaMA ( 405B parameters) and Samba (1T parameters). Results based on GPU memory size versus memory required by the model at defined parameters, plus 10% overhead. Server manufacturers may vary configurations, yielding different results. Results may vary based on GPU memory configuration, LLM size, and potential variance in GPU memory access or the server operating environment. *All data based on FP16 datatype. For FP8 = X2. For FP4 = X4. MI350-012

- Based on calculations by AMD Performance Labs in May 2025, for the 8 GPU AMD Instinct™ MI350X / MI355X Platforms to determine the peak theoretical precision performance when comparing FP64, FP32, FP16, OCP-FP8, FP8, MXFP6, FP6, MXFP4, FP4, and INT8 datatypes with Matrix, Tensor, Vector and Sparsity, as applicable vs. NVIDIA HGX Blackwell B200 accelerator platform. Results may vary based on configuration, datatype, and workload. MI350-010A

- Calculations conducted by AMD Performance Labs as of May 22nd, 2025, based on current specifications and /or estimation. The AMD Instinct™ MI355X OAM accelerators have 288GB HBM3e memory capacity and 8 TB/s GPU peak theoretical memory bandwidth performance. The highest published results on the NVidia Hopper H200 (141GB) SXM GPU accelerator resulted in 141GB HBM3e memory capacity and 4.8 TB/s GPU memory bandwidth performance. https://nvdam.widen.net/s/nb5zzzsjdf/hpc-datasheet-sc23-h200-datasheet-3002446 The highest published results on the NVidia Blackwell HGX B200 (180GB) GPU accelerator resulted in 180GB HBM3e memory capacity and 7.7 TB/s GPU memory bandwidth performance. https://nvdam.widen.net/s/wwnsxrhm2w/blackwell-datasheet-3384703 The highest published results on the NVidia Grace Blackwell GB200 (186GB) GPU accelerator resulted in 186GB HBM3e memory capacity and 8 TB/s GPU memory bandwidth performance. https://nvdam.widen.net/s/wwnsxrhm2w/blackwell-datasheet-3384703 MI350-001

- Based on calculations by AMD Performance Labs in May 2025, to determine the peak theoretical precision performance for the AMD Instinct™ MI350X / MI355X GPUs, when comparing FP64, FP32, FP16, OCP-FP8, FP8, MXFP6, FP6, MXFP4, FP4, INT8, and bfloat16 datatypes with Vector, Matrix, or Tensor with Sparsity as applicable, vs. NVIDIA Blackwell B200 accelerator. Server manufacturers may vary configurations, yielding different results. MI350-009A

- Based on calculations by AMD Performance Labs in May 2025, to determine the peak theoretical precision performance for the AMD Instinct™ MI350X / MI355X GPUs, when comparing FP64 and FP32 with Vector, Matrix or Tensor as applicable, vs. NVIDIA Blackwell B200 accelerator. Results may vary based on server configuration, datatype, and workload. Performance may vary based on use of latest drivers and optimizations. MI350-019

- Based on calculations by AMD as of April 17, 2025, using the published memory specifications of the AMD Instinct MI350X / MI355X GPUs (288GB) vs MI300X (192GB) vs MI325X (256GB). Calculations performed with FP16 precision datatype at (2) bytes per parameter, to determine the minimum number of GPUs (based on memory size) required to run the following LLMs: OPT (130B parameters), GPT-3 (175B parameters), BLOOM (176B parameters), Gopher (280B parameters), PaLM 1 (340B parameters), Generic LM (420B, 500B, 520B, 1.047T parameters), Megatron-LM (530B parameters), LLaMA ( 405B parameters) and Samba (1T parameters). Results based on GPU memory size versus memory required by the model at defined parameters, plus 10% overhead. Server manufacturers may vary configurations, yielding different results. Results may vary based on GPU memory configuration, LLM size, and potential variance in GPU memory access or the server operating environment. *All data based on FP16 datatype. For FP8 = X2. For FP4 = X4. MI350-012

- Based on calculations by AMD Performance Labs in May 2025, for the 8 GPU AMD Instinct™ MI350X / MI355X Platforms to determine the peak theoretical precision performance when comparing FP64, FP32, FP16, OCP-FP8, FP8, MXFP6, FP6, MXFP4, FP4, and INT8 datatypes with Matrix, Tensor, Vector and Sparsity, as applicable vs. NVIDIA HGX Blackwell B200 accelerator platform. Results may vary based on configuration, datatype, and workload. MI350-010A