Cost-Effective Inference for Enterprise AI

As the industry shifts from training models to running them, CPUs can pull double duty: run AI and general-purpose workloads side by side.

Deploy small and mid-size models on AMD EPYC™ 9005 server CPUs—on prem or in the cloud—and help maximize value from your computing investments.

To avoid overprovisioning and get the best return on your AI investments, it’s important to match your model size and latency requirements to the right hardware. The latest generations of AMD EPYC server CPUs can handle a range of AI tasks alongside general-purpose workloads. As model sizes grow, volumes go up, and lower latencies become critical, GPUs become more efficient and cost effective.

| AI Inference Workload | Good fit for... |

||

CPUs |

CPUs + PCIe-Based GPU |

GPU Clusters |

|

Document processing and classification |

✓ |

|

|

Data mining and analytics |

✓ |

|

✓ |

Scientific simulations |

✓ |

|

|

Translation |

✓ |

|

|

Indexing |

✓ |

|

|

Content moderation |

✓ |

|

|

Predictive maintenance |

✓ |

|

✓ |

Virtual assistants |

✓ |

✓ |

|

Chatbots |

✓ |

✓ |

|

Expert agents |

✓ |

✓ |

|

Video captioning |

✓ |

✓ |

|

Fraud detection |

|

✓ |

✓ |

Decision-making |

|

✓ |

✓ |

Dynamic pricing |

|

✓ |

✓ |

Audio and video filtering |

|

✓ |

✓ |

Financial trading |

|

|

✓ |

Telecommunications and networking |

|

|

✓ |

Autonomous systems |

|

|

✓ |

Depending on your workload requirements, either high-core count CPUs alone or a combination of CPUs and GPUs work best for inference. Learn more about which infrastructure fits your model size and latency needs.

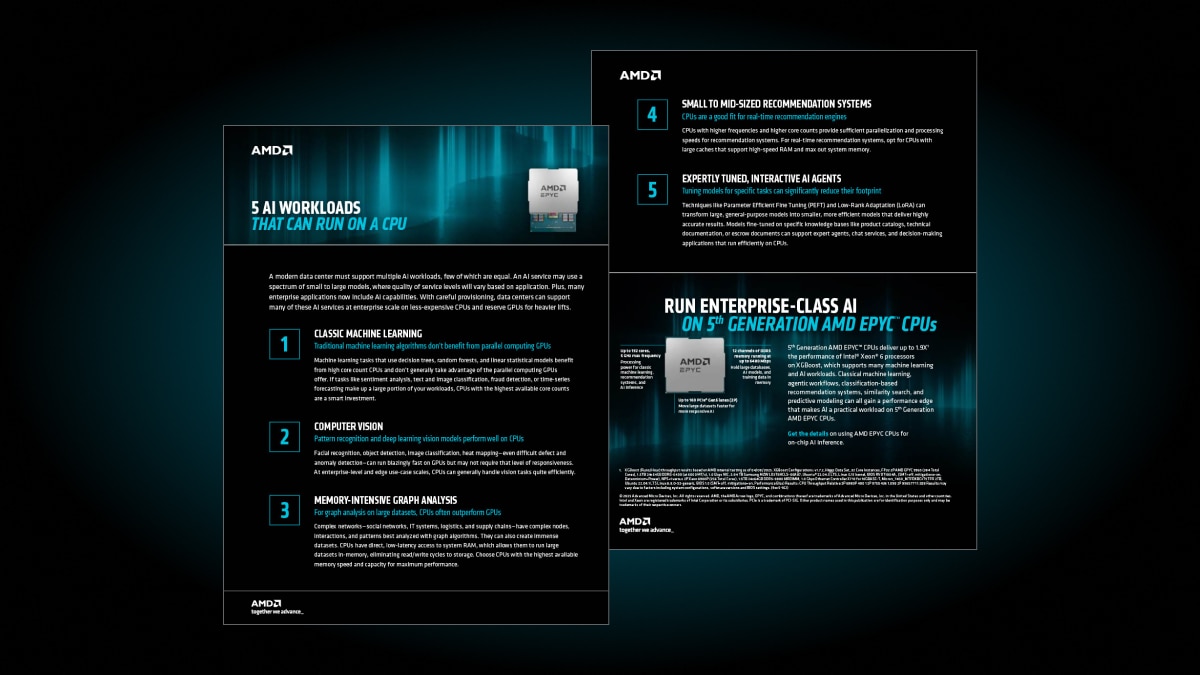

The latest AMD EPYC server CPUs can meet the performance requirements of a range of AI workloads, including classic machine learning, computer vision, and AI agents. Read about five popular workloads that run great on CPUs.

Whether deployed in a CPU-only server or used as a host for GPUs executing larger models, AMD EPYC server CPUs are designed with the latest open standard technologies to accelerate enterprise AI inference workloads.

Claims compare 5th Gen AMD EPYC 9965 server CPUs versus Intel Xeon 6980P.

5th Gen AMD EPYC 9965

Intel Xeon 6980P

5th Gen AMD EPYC 9965

Intel Xeon 6980P

5th Gen AMD EPYC 9965

Intel Xeon 6980P

5th Gen AMD EPYC 9965

Intel Xeon 6980P

5th Gen AMD EPYC 9965

Intel Xeon 6980P

First, determine your performance needs. How fast do you need responses in terms of minutes, seconds, or milliseconds? How big are the models you’re running in terms of parameters? You may be able to meet performance requirements simply by upgrading to a 5th Gen AMD EPYC CPU, avoiding the cost of GPU hardware.

If you don’t need responses in real time, batch inference is cost-efficient for large-scale and long-term analysis—for example, analyzing campaign performance or predictive maintenance. Real-time inference that supports interactive use cases like financial trading and autonomous systems may need GPU accelerators. While CPUs alone are excellent for batch inference, GPUs are best for real-time inference.

CPUs alone offer enough performance for inference on models up to ~20 billion parameters and for mid-latency response times (seconds to minutes). This is sufficient for many AI assistants, chatbots, and agents. Consider adding GPU accelerators when models are larger or response times must be faster than this.

The short answer is it depends. Extracting maximum performance for a workload is very workload and expertise dependent. With that said, select 5th Gen AMD EPYC Server CPUs outperform comparable Intel Xeon 6 in inference for many popular AI workloads, including large language models (DeepSeek-R1 671B),3 medium language models (Llama 3.1 8B4 and GPT-J 6B6), and small language models (Llama 3.2 1B).5

AMD EPYC server CPUs include AMD Infinity Guard which provides a silicon-based set of security features.7 AMD Infinity Guard includes AMD Secure Encrypted Virtualization (AMD SEV), a widely adopted confidential computing solution that uses confidential virtual machines (VMs) to help protect data, AI models, and workloads at runtime.

Match your infrastructure needs to your AI ambitions. AMD offers the broadest AI portfolio, open standards-based platforms, and a powerful ecosystem—all backed by performance leadership.

With AMD ZenDNN and AMD ROCm™ software, developers can optimize their application performance while using their choice of frameworks.